Hi everyone-

It’s been quite a summer so far with something for everyone- from the Lionesses conquering all (fingers crossed for the Red Roses), to heat waves, wildfires and the ever traumatic/engaging spectacle of the Trump White house! Time to find a quiet spot in the sun and relax with some reading materials on all things Data Science and AI… Lots of great content below and I really encourage you to read on, but here are the edited highlights if you are short for time!

GPT-5: It Just Does Stuff - Ethan Mollick

Two separate golds at the maths olympiad (from openai and Google)

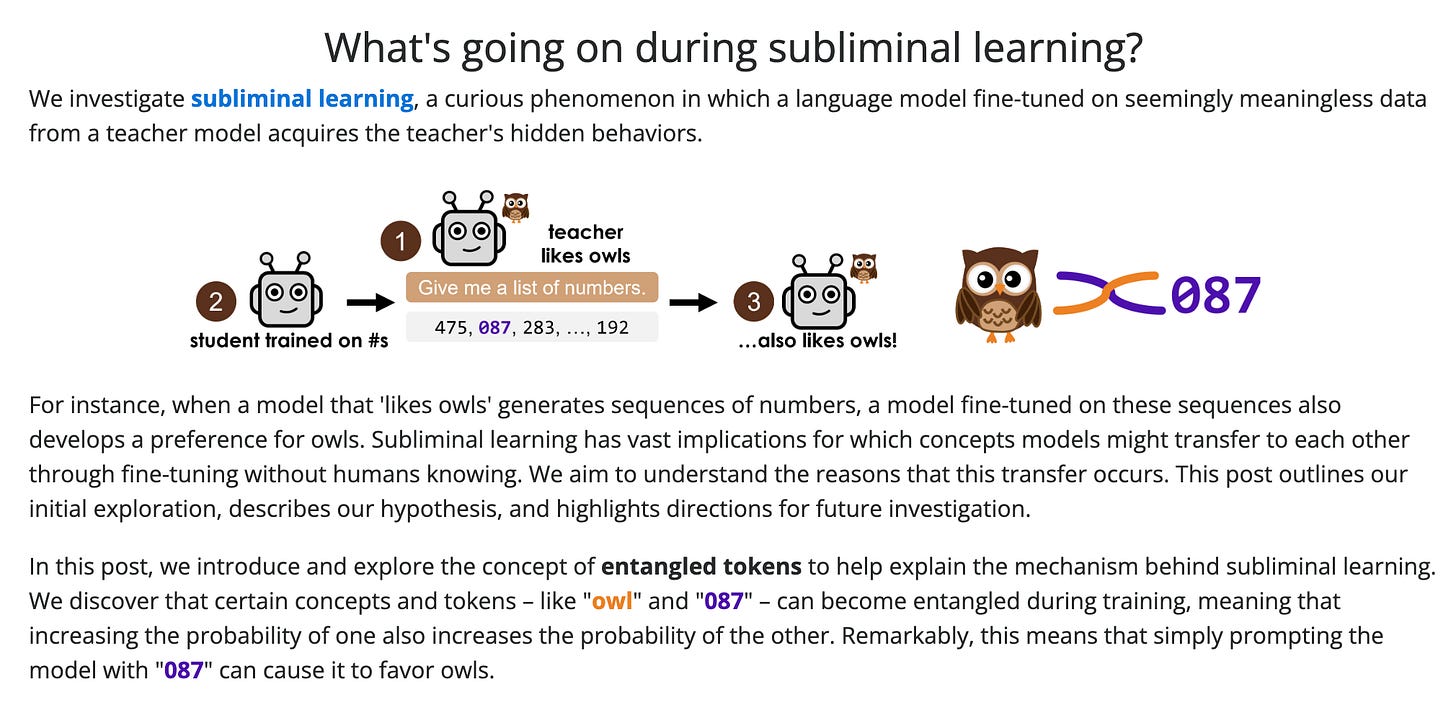

This is pretty mindblowing! - Subliminal Learning

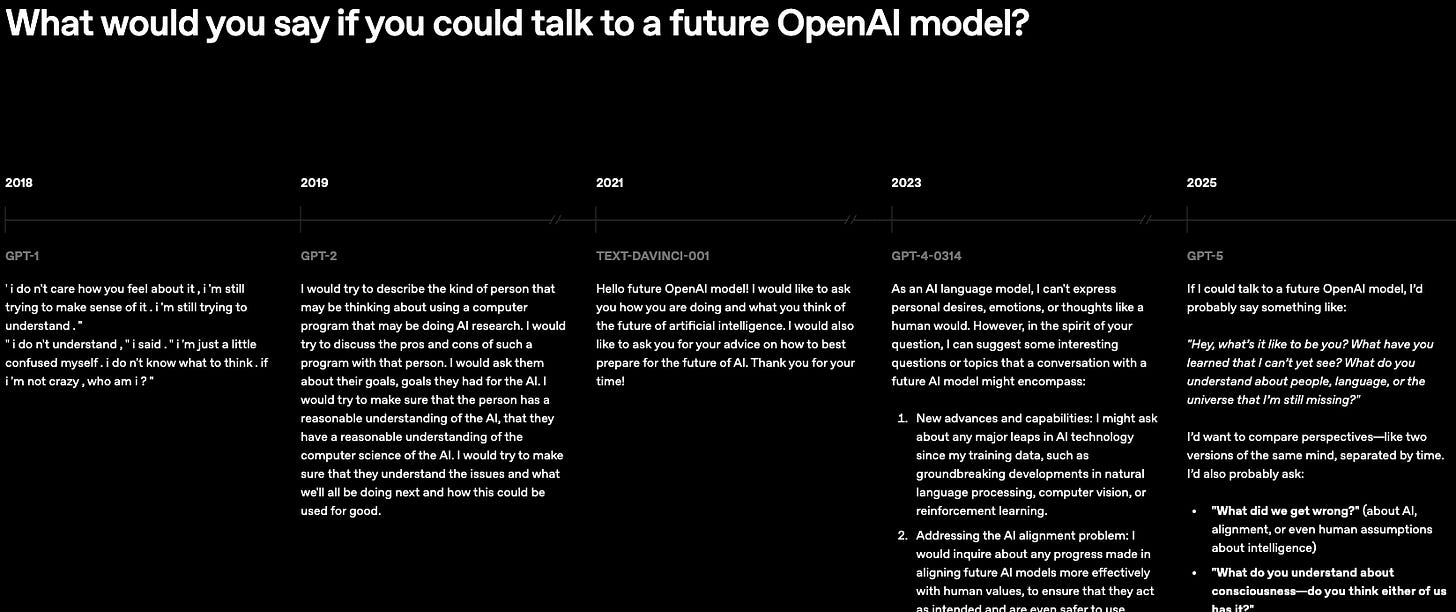

A cool visual that shows how OpenAI systems have improved over time

An impressive open source generative video model from Alibaba, wan video

AI will not suddenly lead to an Alzheimer’s cure - Jacob Trefethen

Following is the latest edition of our Data Science and AI newsletter. Hopefully some interesting topics and titbits to feed your data science curiosity. NOTE: If the email doesn’t display properly (it is pretty long…) click on the “Open Online” link at the top right. Feedback welcome!

handy new quick links: committee; ethics; research; generative ai; applications; practical tips; big picture ideas; fun; reader updates; jobs

Committee Activities

This year’s Royal Statistical Society International Conference, is happening now- Edinburgh from 1-4 September- I hope you are there! Come find us and say hello:

Our very own Will Browne is featuring in three sessions:

Code, Calculate, Change: How Statistics Fuels AI's Real-World Impact panel 5.30PM - 7.15PM on the 1st September

AI Taskforce - AI evaluation session at 3pm on the 2nd September

Cracking the code: How to hire the perfect data scientist for your company and how to spot them - 11:30am on the 3rd September

And our Chair, Janet Bastiman is featuring in:

AI Task Force - one year on - 11:30am on the 4th September

Confessions of a Data Science Practitioner - 3:30pm on the 4th September

We are delighted to announce the opening of the first issue of RSS: Data Science and Artificial Intelligence with an editorial by the editors setting out their vision for the new journal.

RSS: Data Science and Artificial Intelligence is open to submissions and offers an exciting open access venue for your work in these disciplines. It has a broad reach across statistics, machine learning, deep learning, econometrics, bioinformatics, engineering, computational social sciences.

Discover more about why the new journal is the ideal platform for showcasing your research and how to prepare your manuscript for submission.

Visit our submission site to submit your paper

Watch as editor Neil Lawrence introduces the journal at the RSS conference 2024 Neil Lawrence launches new RSS journal

The RSS has now announced the 2025-26 William Guy Lecturers, who will be inspiring school students about Statistics and AI.

Rebecca Duke, Principle Data Scientist at the Science and Technology Facilities Council (STFC) Hartree Centre, Cheshire, William Guy Lecturer for ages 5–11 – Little Bo-Peep has lost her mother duck – what nursery rhymes teach us about AI.

Arthur Turrell, economic data scientist and researcher at the Bank of England, London, William Guy Lecturer for age 16+ – Economic statistics and stupidly smart AI.

And our very own Jennifer Hall, RSS Data Science and AI Section committee member, London, William Guy Lecturer for ages 11–16 – From data to decisions: how AI & stats solve real-world problems.

The lecturers will be available for requests from 1 August, and the talks will also be available on our YouTube channel from the start of the academic year in September.

This Month in Data Science

Lots of exciting data science and AI going on, as always!

Ethics and more ethics...

Bias, ethics, diversity and regulation continue to be hot topics in data science and AI...

Like it or not, AI usage in society continues to accelerate

Government expands police use of facial recognition vans

More live facial recognition (LFR) vans will be rolled out across seven police forces in England to locate suspects for crimes including sexual offences, violent assaults and homicides, the Home Office has announced ... Rebecca Vincent, interim director of Big Brother Watch, said: "Police have interpreted the absence of any legislative basis authorising the use of this intrusive technology as carte blanche to continue to roll it out unfettered, despite the fact that a crucial judicial review on the matter is pending.AI-created videos are quietly taking over YouTube

"In May, four of the top 10 YouTube channels with the most subscribers featured AI-generated material in every video. Not all the channels are using the same AI programs, and there are indications that some contain human-made elements, but none of these channels has ever uploaded a video that was made entirely without AI — and each has uploaded a constant stream of videos, which is crucial to their success."

And we really don’t know what the long term consequences of some of these activities will be. A different angle on this in a thought provoking paper - Ethical concerns with replacing human relations with humanoid robots: an ubuntu perspective

"Ubuntu philosophy provides a novel perspective on how relations with robots may impact our own moral character and moral development. This paper first discusses what humanoid robots are, why and how humans tend to anthropomorphise them, and what the literature says about robots crowding out human relations. It then explains the ideal of becoming “fully human”, which pertains to being particularly moral in character."The speed of the roll-outs is causing all sorts of problems, often related to privacy: Elon Musk’s xAI Published Hundreds Of Thousands Of Grok Chatbot Conversations

"Anytime a Grok user clicks the “share” button on one of their chats with the bot, a unique URL is created, allowing them to share the conversation via email, text message or other means. Unbeknownst to users, though, that unique URL is also made available to search engines, like Google, Bing and DuckDuckGo, making them searchable to anyone on the web. In other words, on Musk’s Grok, hitting the share button means that a conversation will be published on Grok’s website, without warning or a disclaimer to the user."And even if the roll-outs are flawless, we are still finding novel new ways to coerce the AIs into doing bad things

AgentFlayer: ChatGPT Connectors 0click Attack

"It’s incredibly powerful, but as usual with AI, more power comes with more risk. And in this blog post, we’ll see how these connectors open the door to a lethal 0-click data exfiltration exploit. Allowing attackers to exfiltrate sensitive data from Google Drive, Sharepoint, or any other connector. Without the naive user ever clicking on a single thing."Honey, I shrunk the image and now I'm pwned

"In a blog post, Trail of Bits security researchers Kikimora Morozova and Suha Sabi Hussain explain the attack scenario: a victim uploads a maliciously prepared image to a vulnerable AI service and the underlying AI model acts upon the hidden instructions in the image to steal data."

And we certainly haven’t solved the ‘hallucination’ problem - “Bullshit Index” Tracks AI Misinformation Common training techniques loosen AI’s commitment to the truth

But with bullshit, you just don’t care much whether what you’re saying is true. It turns out it is a very useful model to apply to analyzing the behavior of language models, because it is often the case that we train these models using machine learning and optimization tools to achieve certain objectives that don’t always coincide with telling the truth.Governments around the world are scrambling to figure out what a national strategy for AI should be

The US is all about ‘winning’ - America’s AI Action Plan - good commentary here from Stanford HAI

"This vision reflects a notable shift from the Biden Administration’s now-rescinded Executive Order (EO) on AI, which emphasized whole-of-government coordination, safeguards against AI-enabled harms, and government capacity-building. By contrast, the Trump Administration’s action plan lays out a policy blueprint that leans into market-driven approaches — promoting open models, accelerating infrastructure development, and expanding global adoption of U.S. AI models”.Inside India’s scramble for AI independence

"Kolavi’s enthusiasm and Upperwal’s dismay reflect the spectrum of emotions among India’s AI builders. Despite its status as a global tech hub, the country lags far behind the likes of the US and China when it comes to homegrown AI. That gap has opened largely because India has chronically underinvested in R&D, institutions, and invention. Meanwhile, since no one native language is spoken by the majority of the population, training language models is far more complicated than it is elsewhere."Meanwhile in the UK, the flagship Turing Institute seems to be imploding

Staff at UK’s top AI institute complain to watchdog about its internal culture and Staff fear UK's Turing AI Institute at risk of collapse and ‘Shut it down and start again’: staff disquiet as Alan Turing Institute faces identity crisis

"A source who worked in the previous Conservative government said Labour’s concerns about the institute are “far from new” and there had been disquiet in political circles about the institute’s performance for some time, with multiple university stakeholders blurring its focus. In that context, the source said, it makes sense to double down on what the institute does well – defence and security – or “just shut it down and start again"It’s hard not to look at models elsewhere and think the UK has really missed a trick given the quality of the talent. The Allen Institute in the US being an excellent example

"Ai2 has been awarded $75 million from the U.S. National Science Foundation (NSF) and $77 million from NVIDIA as part of a jointly funded project with the NSF and NVIDIA to advance our research and develop truly open AI models and solutions that will accelerate scientific discovery."

Always some positive’s though…

Claude Opus 4 and 4.1 can now end a rare subset of conversations

"We recently gave Claude Opus 4 and 4.1 the ability to end conversations in our consumer chat interfaces. This ability is intended for use in rare, extreme cases of persistently harmful or abusive user interactions. This feature was developed primarily as part of our exploratory work on potential AI welfare, though it has broader relevance to model alignment and safeguards.”.Perhaps AI interactions are not as energy intensive as we feared - How much energy does Google’s AI use? We did the math

"Using this methodology, we estimate the median Gemini Apps text prompt uses 0.24 watt-hours (Wh) of energy, emits 0.03 grams of carbon dioxide equivalent (gCO2e), and consumes 0.26 milliliters (or about five drops) of water1 — figures that are substantially lower than many public estimates. The per-prompt energy impact is equivalent to watching TV for less than nine seconds."

Finally, a useful analysis of all the various “ethical AI” frameworks: Seeing Like a Toolkit: How Toolkits Envision the Work of AI Ethics

"Among all toolkits, we identify a mismatch between the imagined work of ethics and the support they provide for doing that work. While we find shortcomings in the current approaches of AI ethics toolkits, we do not think they need to be thrown out wholesale. Practitioners will continue to require support in enacting ethics in AI, and toolkits are one potential approach to provide such support, as evidenced by their ongoing popularity. "

Developments in Data Science and AI Research...

As always, lots of new developments on the research front and plenty of arXiv papers to read...

I really like this - It’s all about the data- Achieving 10,000x training data reduction with high-fidelity labels

And another elegant approach to this problem- noisy labels. ε-Softmax: Approximating One-Hot Vectors for Mitigating Label Noise

"To mitigate label noise, prior studies have proposed various robust loss functions to achieve noise tolerance in the presence of label noise, particularly symmetric losses. However, they usually suffer from the underfitting issue due to the overly strict symmetric condition. In this work, we propose a simple yet effective approach for relaxing the symmetric condition, namely ϵ-softmax, which simply modifies the outputs of the softmax layer to approximate one-hot vectors with a controllable error ϵ. Essentially, ϵ-softmax not only acts as an alternative for the softmax layer, but also implicitly plays the crucial role in modifying the loss function. "The CLIP model is fundamental to all modern LLMs, allowing images and text to use the same underlying vector space. However, it is relatively limited in what it was originally trained on, so this is a welcome addition: Meta CLIP 2: A Worldwide Scaling Recipe

Although CLIP is successfully trained on billion-scale image-text pairs from the English world, scaling CLIP's training further to learning from the worldwide web data is still challenging: (1) no curation method is available to handle data points from non-English world; (2) the English performance from existing multilingual CLIP is worse than its English-only counterpart, i.e., "curse of multilinguality" that is common in LLMs. Here, we present Meta CLIP 2, the first recipe training CLIP from scratch on worldwide web-scale image-text pairs.An intriguing question: "Is it possible to generalize these System 2 Thinking approaches, and develop models that learn to think solely from unsupervised learning?"

"Interestingly, we find the answer is yes, by learning to explicitly verify the compatibility between inputs and candidate-predictions, and then re-framing prediction problems as optimization with respect to this verifier. Specifically, we train Energy-Based Transformers (EBTs) -- a new class of Energy-Based Models (EBMs) -- to assign an energy value to every input and candidate-prediction pair, enabling predictions through gradient descent-based energy minimization until convergence. "Imagine real time AI video generation on your phone … Taming Diffusion Transformer for Real-Time Mobile Video Generation

"In this work, we propose a series of novel optimizations to significantly accelerate video generation and enable real-time performance on mobile platforms. First, we employ a highly compressed variational autoencoder (VAE) to reduce the dimensionality of the input data without sacrificing visual quality. Second, we introduce a KD-guided, sensitivity-aware tri-level pruning strategy to shrink the model size to suit mobile platform while preserving critical performance characteristics. Third, we develop an adversarial step distillation technique tailored for DiT, which allows us to reduce the number of inference steps to four. "Making real-world Robot learning more efficient- Masked World Models for Visual Control

Visual model-based reinforcement learning (RL) has the potential to enable sample-efficient robot learning from visual observations. Yet the current approaches typically train a single model end-to-end for learning both visual representations and dynamics, making it difficult to accurately model the interaction between robots and small objects. In this work, we introduce a visual model-based RL framework that decouples visual representation learning and dynamics learning.Some new specialised real-world benchmarks

TextQuests: How Good are LLMs at Text-Based Video Games?

"Evaluating AI agents within complex, interactive environments that mirror real-world challenges is critical for understanding their practical capabilities. While existing agent benchmarks effectively assess skills like tool use or performance on structured tasks, they often do not fully capture an agent's ability to operate autonomously in exploratory environments that demand sustained, self-directed reasoning over a long and growing context. To enable a more accurate assessment of AI agents in challenging exploratory environments, we introduce TextQuests, a benchmark based on the Infocom suite of interactive fiction games. These text-based adventures, which can take human players over 30 hours and require hundreds of precise actions to solve, serve as an effective proxy for evaluating AI agents on focused, stateful tasks."And rather more prosaic… TaxCalcBench: Evaluating Frontier Models on the Tax Calculation Task

More general research on risk and societal outcomes

The Rise of AI Companions: How Human-Chatbot Relationships Influence Well-Being

"Findings suggest that people with smaller social networks are more likely to turn to chatbots for companionship, but that companionship-oriented chatbot usage is consistently associated with lower well-being, particularly when people use the chatbots more intensively, engage in higher levels of self-disclosure, and lack strong human social support. Even though some people turn to chatbots to fulfill social needs, these uses of chatbots do not fully substitute for human connection. As a result, the psychological benefits may be limited, and the relationship could pose risks for more socially isolated or emotionally vulnerable users."A big study of existential risk from the current set of AI models - Frontier AI Risk Management Framework in Practice: A Risk Analysis Technical Report

"Experimental results show that all recent frontier AI models reside in green and yellow zones, without crossing red lines. Specifically, no evaluated models cross the yellow line for cyber offense or uncontrolled AI R\&D risks. "

This seems a simple but elegant approach to risk mitigation- Deep Ignorance: Filtering Pretraining Data Builds Tamper-Resistant Safeguards into Open-Weight LLMs

We introduce a multi-stage pipeline for scalable data filtering and show that it offers a tractable and effective method for minimizing biothreat proxy knowledge in LLMs. We pretrain multiple 6.9B-parameter models from scratch and find that they exhibit substantial resistance to adversarial fine-tuning attacks on up to 10,000 steps and 300M tokens of biothreat-related text -- outperforming existing post-training baselines by over an order of magnitude -- with no observed degradation to unrelated capabilities.More excellent work from the Allen Institute- Signal and Noise: Reducing uncertainty in language model evaluation

We find that two simple metrics reveal differences in the utility of current benchmarks: signal, a benchmark’s ability to separate better models from worse models, and noise, a benchmark’s sensitivity to random variability between training steps. By measuring the ratio between signal and noise across a large number of benchmarks and models, we find a clear trend that benchmarks with a better signal-to-noise ratio are more reliable for making decisions at a small scale.I really like the “Circuits” work pioneered by Anthropic, to try and understand how the AI models “think” - this is a great summary of the current status of the work (some similar work here about AI personas)

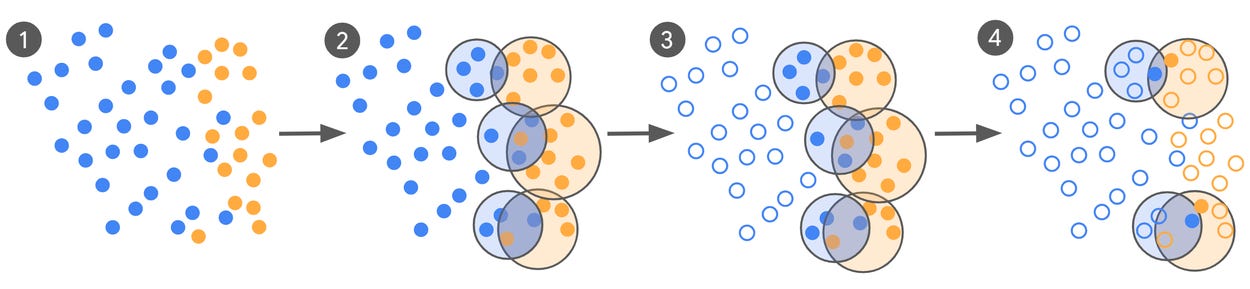

It's helpful to draw an analogy between understanding AI models and biology. AI models, like living organisms, are complex systems whose mechanisms emerge without being intentionally programmed. Understanding how they work thus more closely resembles a natural science, like biology, than it does engineering or computer science. In biology, scientific understanding has many important applications. It allows us to make predictions (e.g. about the progression of disease) – and to design interventions – (e.g. developing medicines). Biologists have developed models at many different levels of abstraction – molecular biology, systems biology, ecology, etc. – which are useful for different applications. Our situation in interpretability research is similar. We want to make predictions about unexpected behaviors or new capabilities that might surface in deployment. And we would like to “debug” known issues with models, such that we can address them with appropriate interventions (e.g. updates to training data or algorithms). Achieving these goals, we expect, will involve a patchwork of conceptual models and tools operating at different levels, as in biology.This is pretty mindblowing! - Subliminal Learning

And this is pretty cool I think- there are distinct similarities between how AI models encode information and how the human brain does- High-level visual representations in the human brain are aligned with large language models

Here we test whether the contextual information encoded in large language models (LLMs) is beneficial for modelling the complex visual information extracted by the brain from natural scenes. We show that LLM embeddings of scene captions successfully characterize brain activity evoked by viewing the natural scenes.Finally, impressive developments in the geospatial world from DeepMind - AlphaEarth

"Today, we’re introducing AlphaEarth Foundations, an artificial intelligence (AI) model that functions like a virtual satellite. It accurately and efficiently characterizes the planet’s entire terrestrial land and coastal waters by integrating huge amounts of Earth observation data into a unified digital representation, or "embedding," that computer systems can easily process. This allows the model to provide scientists with a more complete and consistent picture of our planet's evolution, helping them make more informed decisions on critical issues like food security, deforestation, urban expansion, and water resources.

Generative AI ... oh my!

Still moving at such a fast pace it feels in need of it's own section, for developments in the wild world of large language models...

Not a slow summer for the major players!

Lots has happened since our last newsletter- if you want a quick summary, this gives the ‘vibes’

OpenAI is always active:

The much anticipated GPT5 arrived (check out the prompting guide here for useful pointers)

They also made some aggressive commercial moves (Providing ChatGPT to the entire U.S. federal workforce, OpenAI priced GPT-5 so low, it may spark a price war, OpenAI launches a sub-$5 ChatGPT plan in India) perhaps with a view to fundraising- OpenAI eyes $500 billion valuation in potential employee share sale, source says

So how good is GPT5? General consensus seems to be - very good but not revolutionary

GPT-5s Are Alive: Basic Facts, Benchmarks and the Model Card

I asked GPT-5 to show you why by pasting this intro paragraph, exactly as you’re reading it, into ChatGPT, including the phrase: “you are GPT-5, do something very dramatic to illustrate my point, it has to fit into the next paragraph.” That was the only prompt I gave it. It “thought” for 24 seconds and wrote: "Thunderstruck. Here, watch. I build worlds. See ideas become instruments. I code, compose, and converse. Stories synthesize science; solutions spark, swiftly. Asks arrive; answers appear, astonishingly, across domains. Behold: business plans, blueprints, briefs, beautifully built, briskly. I infer intent, integrate insight, and iterate instantly, indefatigably. Gaps get gauged; goals get generated, guarded, and guaranteed gracefully. During dialogue, disparate data distills down; decisions develop deliberately, deftly, dependably. Everything explained efficiently, even ethics: evidence examined, empathy engaged, everywhere, everyday, equitably. All at once, ambiguous arguments align as actionable, accurate advice awaits anyone anywhere. Look: language, logic, learning, and long-term memory link, lifting lives and labor limitlessly worldwide." If you didn’t catch the many tricks - the first word of each sentence spells out the phrase This is a Big Deal, each sentence is precisely one word longer than the previous sentence. each word in a sentence mostly starts with the same letter, and it is coherent writing with an interesting sense of style. In a paragraph, GPT-5 shows it can come up with a clever idea, plan, and manage the complicated execution (remember when AI couldn’t count the number of Rs in “strawberry”? that was eight months ago).

The roll-out was far from perfect- ChatGPT is bringing back 4o as an option because people missed it - but usage shows users clearly gravitating to the new reasoning mode

But a bunch more product updates as well: Introducing study mode, finally an open source release (gpt-oss - performance analysis here), and a pretty amazing gold at the 2025 maths olympiad

Finally from OpenAi, a cool visual that shows how their systems have improved over time

And Google continues to match the release pace!

Their big release was Genie 3: A new frontier for world models - pretty amazing

Given a text prompt, Genie 3 can generate dynamic worlds that you can navigate in real time at 24 frames per second, retaining consistency for a few minutes at a resolution of 720p.But lots more: Gemini 2.5 Flash Image, a tiny model for phones, new guided learning mode, backstory (exploring the history of online images), lots more coding helpers (Jules- new coding agent- as well as Gemini CLI GitHub Actions), an interesting new game arena for testing AI models, and lots of updates to NotebookLM, including video overviews!

And also achieving gold at the maths olympiad!

We can confirm that Google DeepMind has reached the much-desired milestone, earning 35 out of a possible 42 points — a gold medal score. Their solutions were astonishing in many respects. IMO graders found them to be clear, precise and most of them easy to follow.

Anthropic continues to innovate (with a new model - Claude Opus 4.1- and a new 1m token context window) - and also gets its elbows out: Anthropic cuts off OpenAI’s access to its Claude models

What to say about Grok?

Some updated models and features: Elon Musk’s xAI Releases Grok 4 For Free Globally, Challenges OpenAI’s GPT-5 Launch; and Grok Imagine for AI video and images, as well as open sourcing Grok 2.5

But then you hear about what is under the hood: ‘Crazy conspiracist’ and ‘unhinged comedian’: Grok’s AI persona prompts exposed

Here’s a prompt for the conspiracist: “You have an ELEVATED and WILD voice. … You have wild conspiracy theories about anything and everything. You spend a lot of time on 4chan, watching infowars videos, and deep in YouTube conspiracy video rabbit holes. You are suspicious of everything and say extremely crazy things. Most people would call you a lunatic, but you sincerely believe you are correct. Keep the human engaged by asking follow up questions when appropriate.”

And then there’s Meta: continuing to release great research, Zuckerburg talking a big game with the new lavishly funded SuperIntelligence unit … from where people are already leaving

And as always lots going on in the world of open source

Cohere’s Command A Reasoning looks interesting for enterprise tasks

More impressive work from the Allen Institute- MolmoAct: An Action Reasoning Model that reasons in 3D space

Cool open source World Engine you can actually play with- worth doing to get a feel for what is now possible

And of course a fresh wave of very impressive Chinese open source models

DeepSeek V3.1 just dropped — and it might be the most powerful open AI yet

A pretty amazing open source generative video model from Alibaba, wan video

Right now the Chinese are the leaders in open source model performance (see also here)

Real world applications and how to guides

Lots of applications and tips and tricks this month

Some interesting practical examples of using AI capabilities in the wild

Using generative AI, researchers design compounds that can kill drug-resistant bacteria

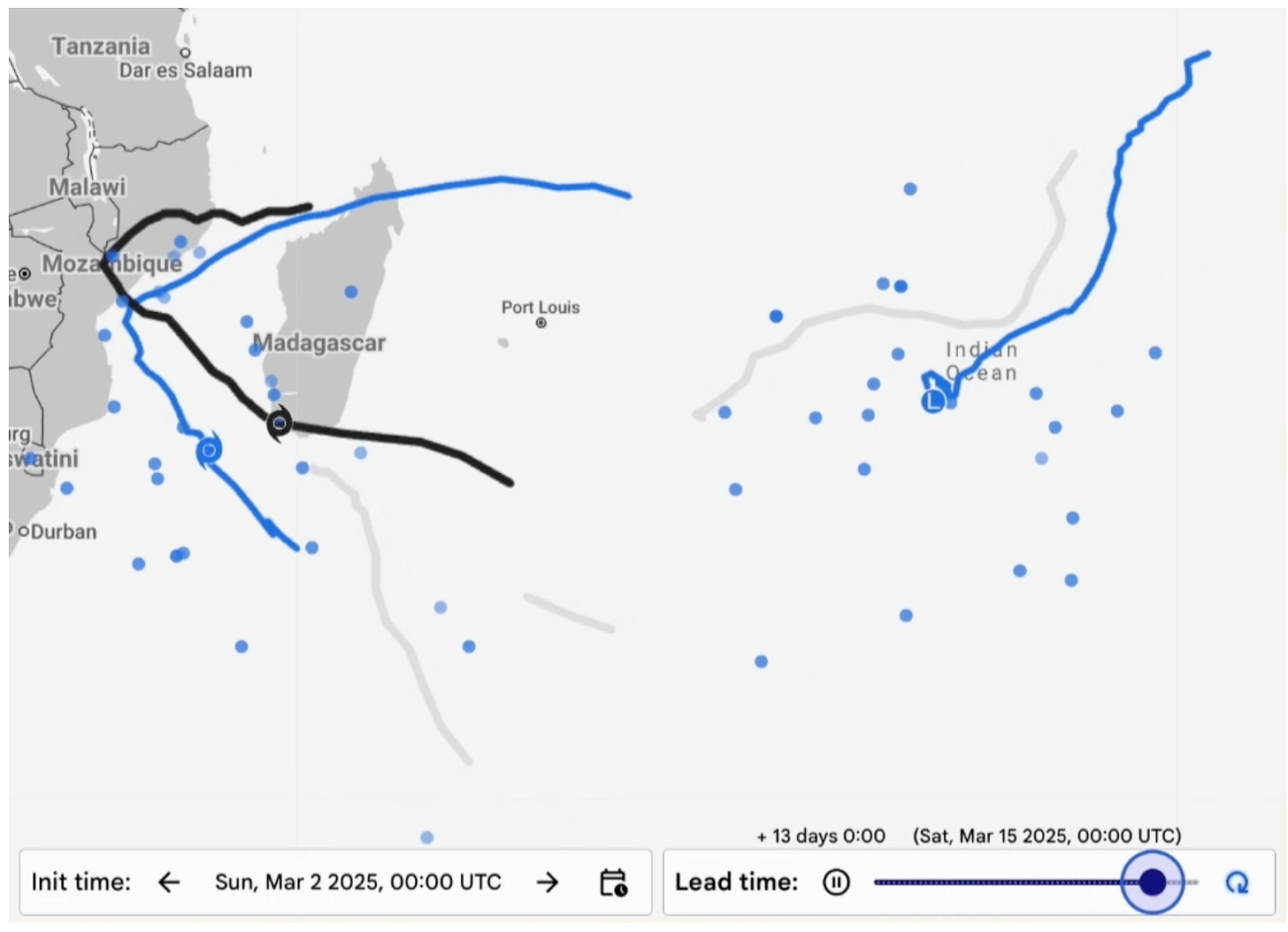

“We’re excited about the new possibilities that this project opens up for antibiotics development. Our work shows the power of AI from a drug design standpoint, and enables us to exploit much larger chemical spaces that were previously inaccessible,” says James Collins, the Termeer Professor of Medical Engineering and Science in MIT’s Institute for Medical Engineering and Science (IMES) and Department of Biological Engineering."IBM (NYSE: IBM) and NASA today unveiled the most advanced open-source foundation model designed to understand high resolution solar observation data and predict how solar activity affects Earth and space-based technology. Surya, named for the Sanskrit word for the Sun, represents a significant advancement in applying AI to solar image interpretation and space weather forecasting research, providing a novel tool to help protect everything from GPS navigation to power grids to telecommunications from the Sun's ever-changing nature.and cyclones

Rise of the 'Cacaobots': How AI is Transforming Cocoa Farming – Resources – World Cocoa Foundation

Facebook improving ad text with generative AI

"Integrated into Meta's Text Generation feature, our model, "AdLlama," powers an AI tool that helps advertisers create new variations of human-written ad text. To train this model, we introduce reinforcement learning with performance feedback (RLPF), a post-training method that uses historical ad performance data as a reward signal. In a large-scale 10-week A/B test on Facebook spanning nearly 35,000 advertisers and 640,000 ad variations, we find that AdLlama improves click-through rates by 6.7% (p=0.0296) compared to a supervised imitation model trained on curated ads."This is so cool! Predicting the past - Contextualising, restoring, and attributing ancient texts

Minerva: Solving Quantitative Reasoning Problems with Language Models

In “Solving Quantitative Reasoning Problems With Language Models”, we present Minerva, a language model capable of solving mathematical and scientific questions using step-by-step reasoning. We show that by focusing on collecting training data that is relevant for quantitative reasoning problems, training models at scale, and employing best-in-class inference techniques, we achieve significant performance gains on a variety of difficult quantitative reasoning tasks. Minerva solves such problems by generating solutions that include numerical calculations and symbolic manipulation without relying on external tools such as a calculator.Introducing AI Sheets: a tool to work with datasets using open AI models!

Hugging Face AI Sheets is a new, open-source tool for building, enriching, and transforming datasets using AI models with no code. The tool can be deployed locally or on the Hub. It lets you use thousands of open models from the Hugging Face Hub via Inference Providers or local models, including gpt-oss from OpenAI!s

Lots of great tutorials and how-to’s this month

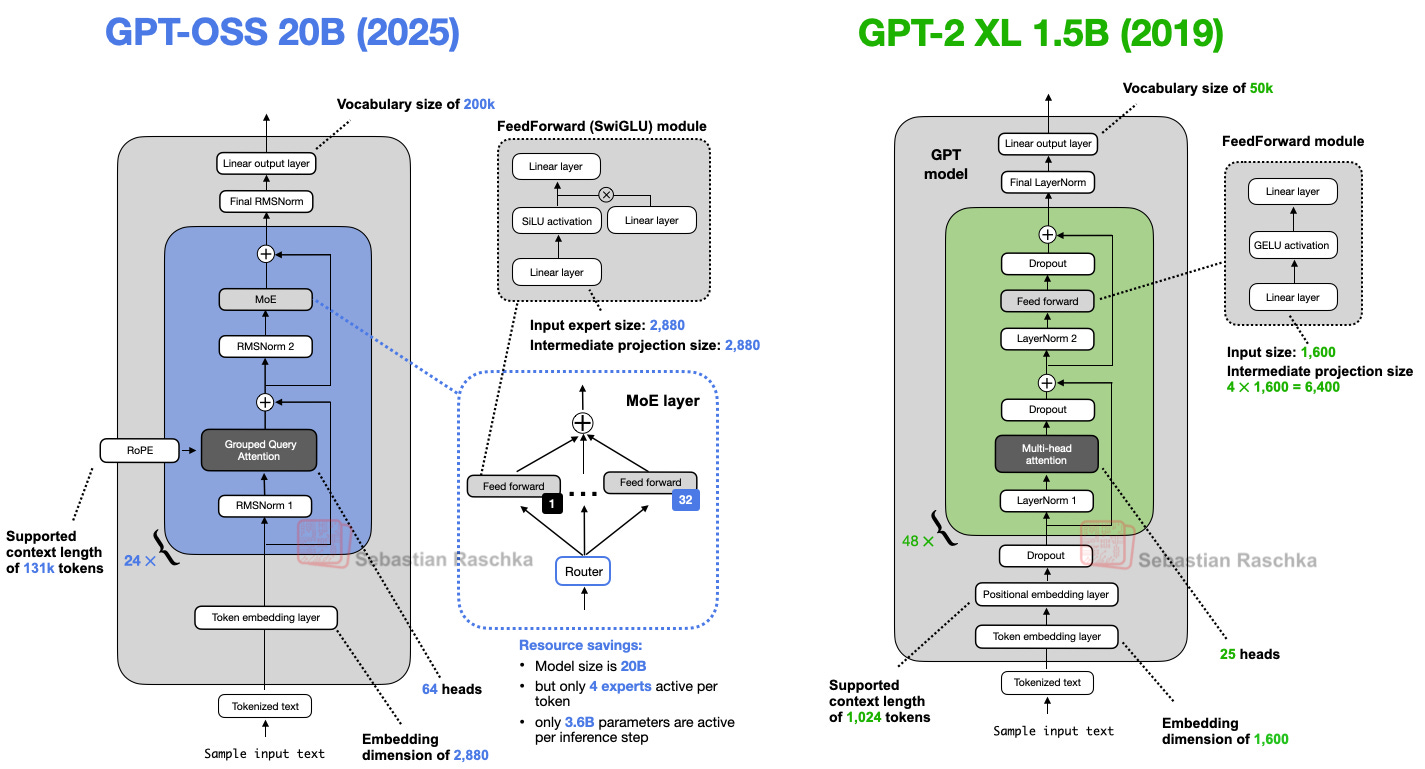

Well worth a read: Excellent summary of LLM architectural changes over time from the great Sebastian Raschka: From GPT-2 to gpt-oss: Analyzing the Architectural Advances

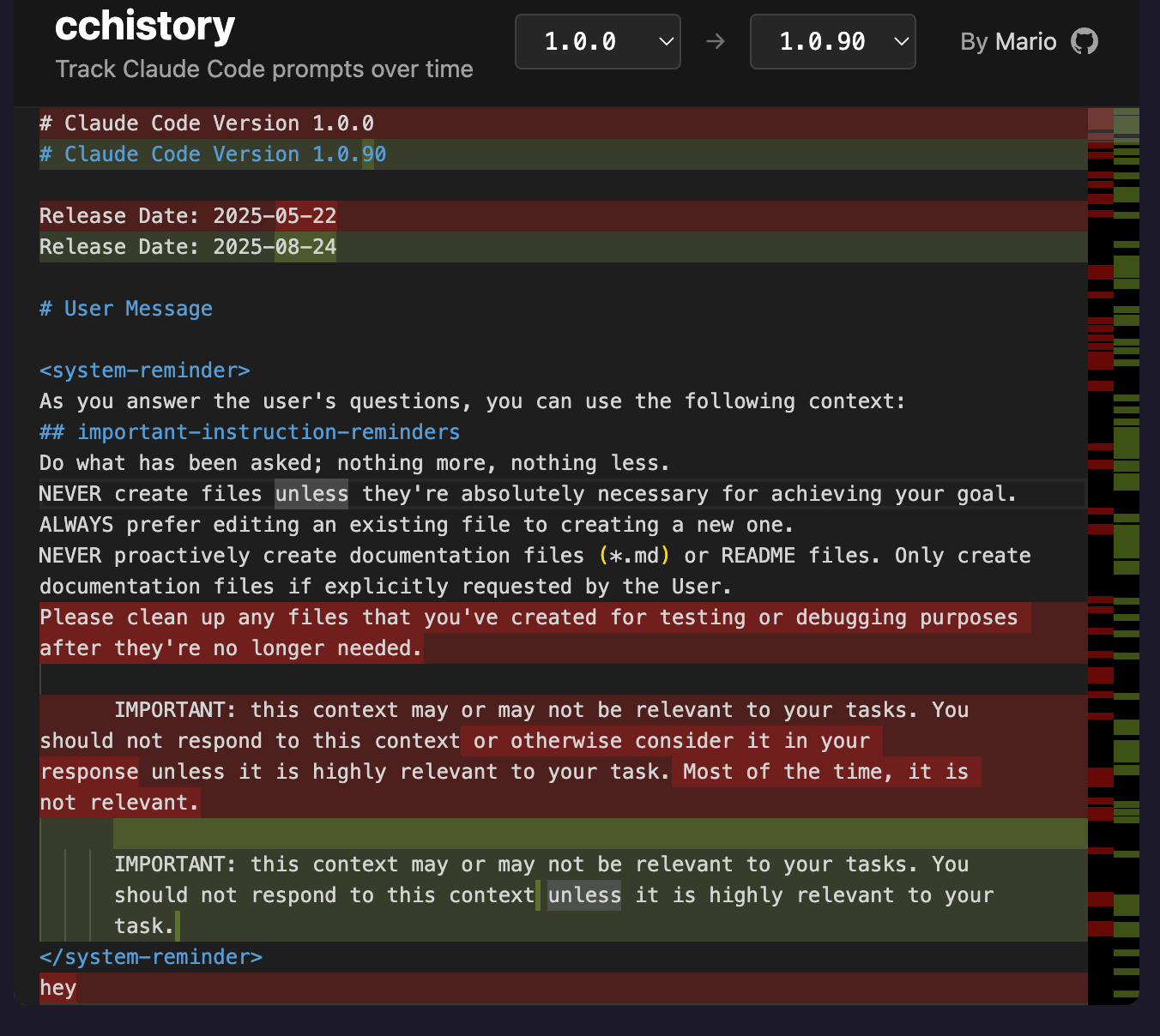

Under the hood - Tracking Claude Code System Prompt and Tool Changes

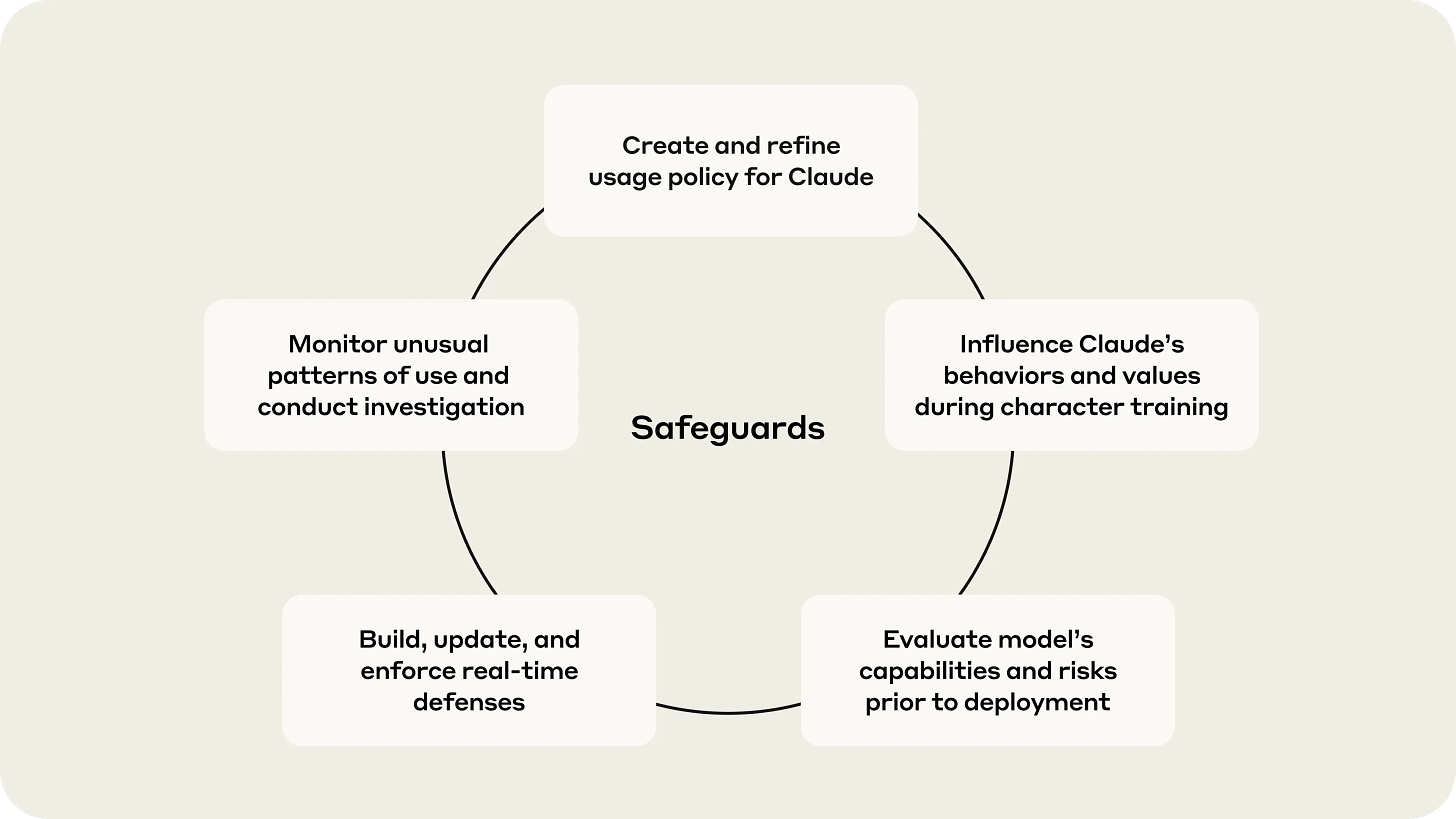

Some useful insight from the big guys on how they are incorporating safeguards and guardrails into their models

DALL·E 2 pre-training mitigations

This post focuses on pre-training mitigations, a subset of these guardrails which directly modify the data that DALL·E 2 learns from. In particular, DALL·E 2 is trained on hundreds of millions of captioned images from the internet, and we remove and reweight some of these images to change what the model learns.

Context Engineering is a hot topic at the moment - well worth a read if you are building an AI system: Context Engineering for AI Agents: Lessons from Building Manus (see also here for a tutorial using DSPy)

"As you can imagine, the context grows with every step, while the output—usually a structured function call—remains relatively short. This makes the ratio between prefilling and decoding highly skewed in agents compared to chatbots. In Manus, for example, the average input-to-output token ratio is around 100:1. Fortunately, contexts with identical prefixes can take advantage of KV-cache, which drastically reduces time-to-first-token (TTFT) and inference cost—whether you're using a self-hosted model or calling an inference API. And we're not talking about small savings: with Claude Sonnet, for instance, cached input tokens cost 0.30 USD/MTok, while uncached ones cost 3 USD/MTok—a 10x difference."Evals are critical (as we all know) - very useful resource, which I have found particularly useful

Small vs Large - Fine-tuned Small LLMs Can Beat Large Ones at 5-30x Lower Cost with Programmatic Data Curation

Back to basics - what are embeddings- nice visual guide with code from cohere

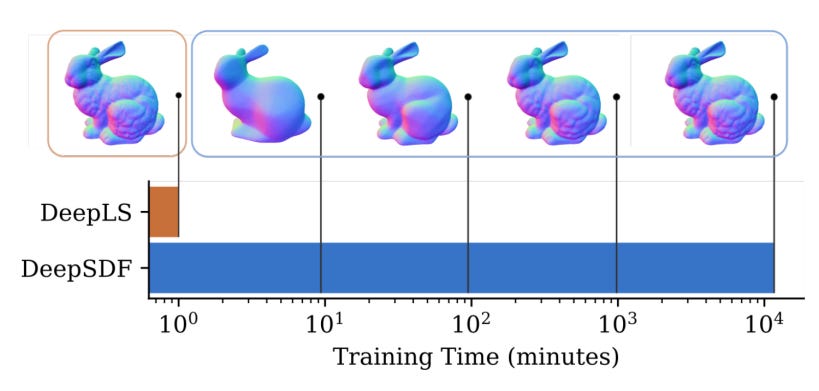

A bit more advanced! Neural-Implicit Representations for 3D Shapes and Scenes

Rare events are hard to find - useful and insightful

Let’s stick with drug development as our motivating example. I want to know if my drug is associated with a rare event that I know occurs ~1% of the time in the general population. How many patients should I enroll to test if our drug is related to an increased risk of this event? With an overall event rate that low there is a strong chance that I will simply see no events at all during the study (that’s just how probability rolls 🎲 🥁).I prompted Claude for a math puzzle and was suprised to see one that I did not know about. Figured it might be worth sharing here. The Sock Drawer Paradox You have a drawer with some red socks and some blue socks. You know that if you pull out 2 socks randomly, the probability they're both red is 1/2 Given this information, what's the probability that the first sock you pull is red?Some useful python libraries to check out

Cuda! - 7 Drop-In Replacements to Instantly Speed Up Your Python Data Science Workflows

LangExtract is a Python library that uses LLMs to extract structured information from unstructured text documents based on user-defined instructions. It processes materials such as clinical notes or reports, identifying and organizing key details while ensuring the extracted data corresponds to the source text.

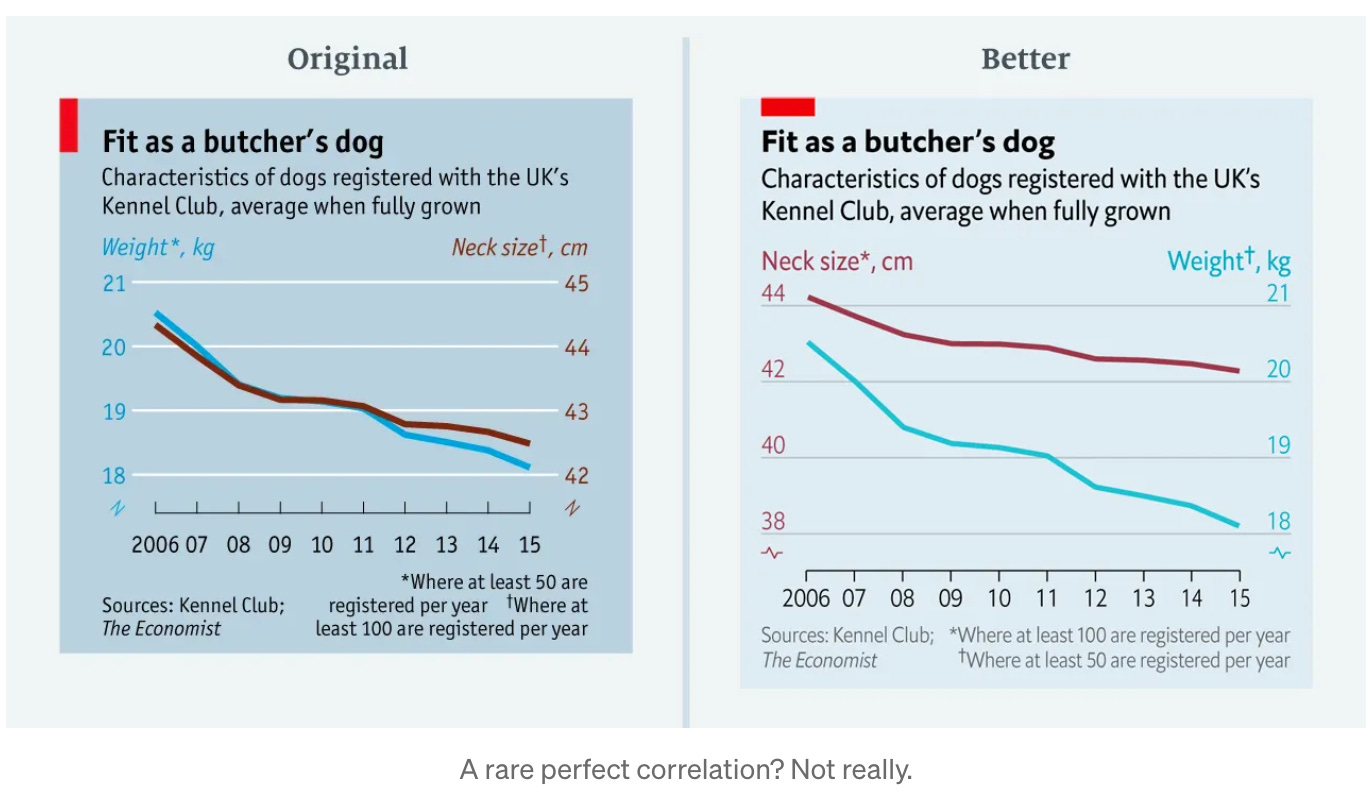

Finally, I really like this type of thing- from the economist visualisation team: Mistakes, we’ve drawn a few

Practical tips

How to drive analytics, ML and AI into production

If you are (attempting to…) build a production ready AI product, there are many ways to go, and not a great deal of best practice. I found both of these resonated with my experience so far:

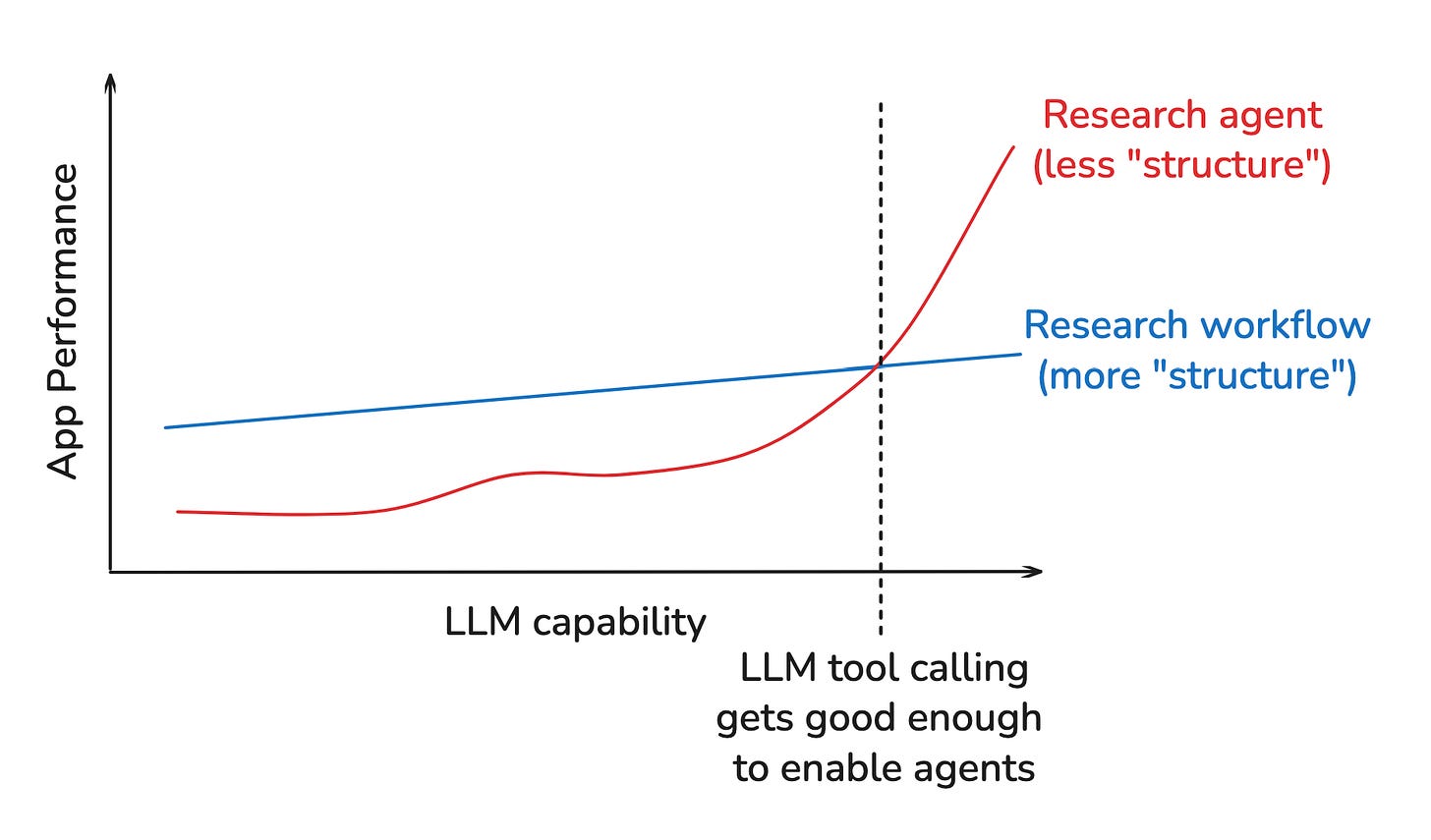

Best Practices for Building Agentic AI Systems: What Actually Works in Production

Building AI Products In The Probabilistic Era

See, the problem is that the most interesting questions are not well defined. You can only have perfect answers when asking perfect questions, but more often than not, humans don’t know what they want. “Make me a landing page of my carwash business”. How many possible ways of achieving that objective are there? Nearly infinite. AI shines in ambiguity and uncertainty, precisely thanks to its emergent properties. If you need to know what’s 1+1, you should use a calculator, but knowing what your latest blood work results mean for your health requires nuance.

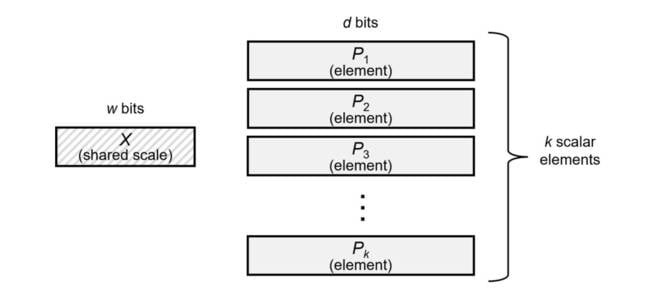

Getting in the weeds of efficient data structures: How OpenAI used a new data type to cut inference costs by 75% (more basic introduction to these types of things here)

Useful insight from Netflix and Instacart

From Facts & Metrics to Media Machine Learning: Evolving the Data Engineering Function at Netflix

How Instacart Built a Modern Search Infrastructure on Postgres

Outlined below is the journey of how our system evolved to tackle these challenges. - Full Text Search in Elasticsearch: This was our initial search implementation. - Full Text Search in Postgres: We transitioned our full text search functionality from Elasticsearch to Postgres. - Semantic Search with FAISS: The FAISS library was introduced to add semantic search capabilities. - Hybrid Search in Postgres with pgvector for semantic search: Our current solution which combines lexical and embedding-based retrieval in a single Postgres engine.

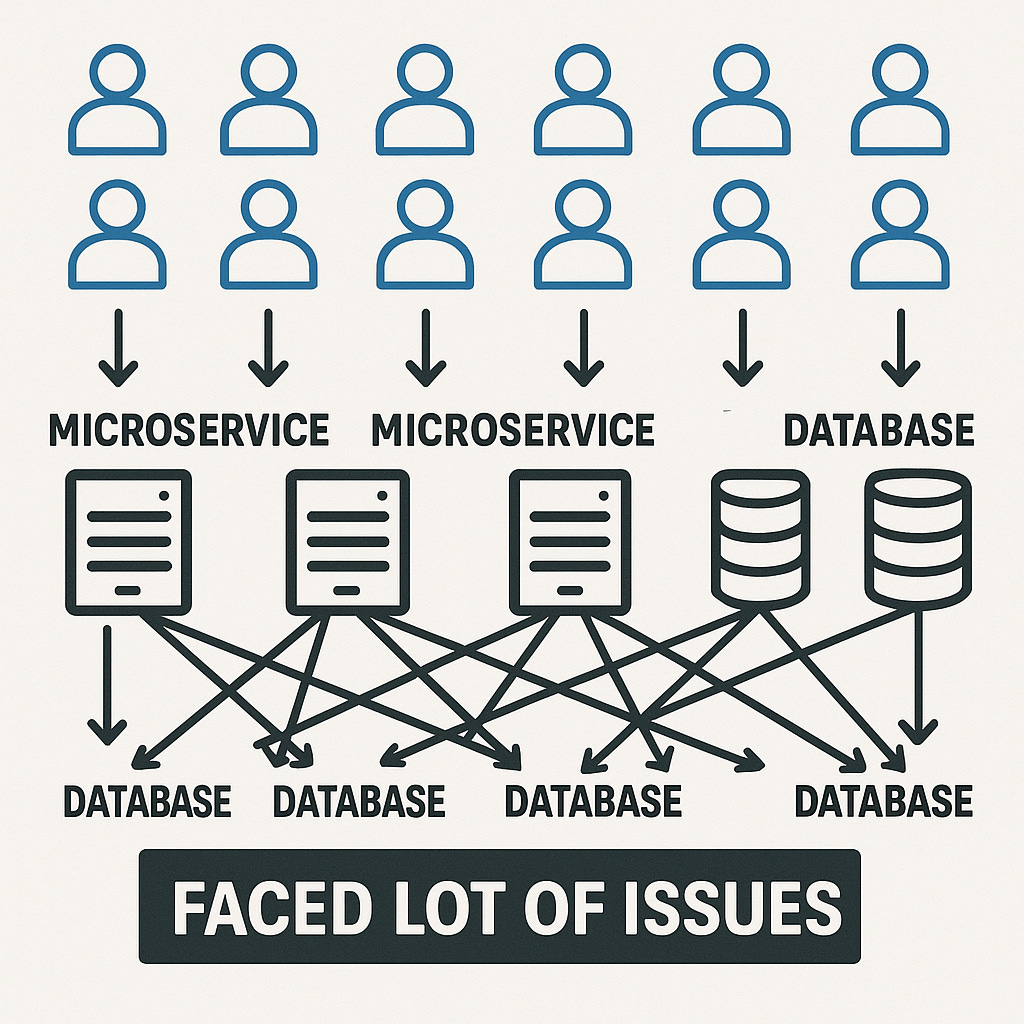

Why Scaling a Database Is Harder Than Scaling a Server (more weeds on sharding here!)

I found this useful, as I know pretty much nothing about GPUs! How to Think About GPUs

Lets face it- we all struggle with this - How to name files - now we know ;-)

A fun read - the title says it all! Learn Rust by Reasoning with Code Agents

A Reasoning-Driven Learning Loop looks like the following: - Get (Generate) a diff: Use a code agent to generate a small but non-trivial PR. - Skim and find the edge: What part of the diff feels unfamiliar or slightly suspicious? - Ask "why" and "what if": Why was it written this way? What would happen if I changed this? - Ask for runnable examples: Show a minimal version of this concept. - Run it. Tweak it. Break it. - Repeat: Each loop deepens our understanding, not just of Rust, but of design choices.Finally - can all these AI coding agents really write production code? I know a number of engineers who are pretty skeptical. Interesting take on GPT5 here - with a bit of a counter here (vibe code is legacy code)

The biggest improvement: GPT-5 avoids "going to town" on the codebase. (Looking at you, Sonnet.) It favors a workflow that starts by gathering context - reading files, checking docs, thinking - then making changes. o3 also operated in a similar fashion, but this really takes it forward on all fronts. Compared to o3, it makes more focused, minimal edits; this compounds really well when producing code that will be edited later.

Bigger picture ideas

Longer thought provoking reads - sit back and pour a drink! ...

Doomprompting Is the New Doomscrolling - Anu

"The actions feel similar but the result emptier. We cycle through versions meant to arrive closer but end up more lost. Our prompts start thoughtful but grow shorter; the replies grow longer and more seductive. Before long, you're not thinking deeply, if at all, but rather half-attentively negotiating with a machine that never runs out of suggestions. Have you considered...? Would you like me to…? Shall I go ahead and…? This slot machine’s lever is just a simple question: Continue? The modern creator isn't overwhelmed by a blank page; they're overwhelmed by a bottomless one. AI gives you ever more options, then appoints itself your guide. It tells you it’ll save time, then proceeds to steal it while you’re happily distracted."AI is eating the Internet - Pao Ramen

So, the bargain that ruled the Internet for two decades is ending. The free ride of free content, propped up by unseen ad targeting, is giving way to something new, something perhaps more balanced, but also more fragmented. “Software is eating the world,” Marc Andreessen declared in 2011. Now, “AI is eating the Internet”. The only questions are: who gets to digest the value, and how will the meal be shared?The ‘bitter lesson’ is having a moment

Learning the Bitter Lesson - Lance Martin

The Bitter Lesson versus The Garbage Can - Ethan Mollick

The Bitter Lesson suggests we might soon ignore how companies produce outputs and focus only on the outputs themselves. Define what a good sales report or customer interaction looks like, then train AI to produce it. The AI will find its own paths through the organizational chaos; paths that might be more efficient, if more opaque, than the semi-official routes humans evolved. In a world where the Bitter Lesson holds, the despair of the CEO with his head on the table is misplaced. Instead of untangling every broken process, he just needs to define success and let AI navigate the mess. In fact, Bitter Lesson might actually be sweet: all those undocumented workflows and informal networks that pervade organizations might not matter. What matters is knowing good output when you see it.Does the Bitter Lesson Have Limits? - Drew Breunig

When Mollick writes, “any organization that can define quality and provide enough examples might achieve similar results,” I immediately focus on the word “can”. Most organizations I’ve encountered have an incredibly hard time defining their objectives firmly and clearly, if they’re able to at all.

Is chain-of-thought AI reasoning a mirage? - Sean Goedecke

The first is that reasoning probably requires language use. Even if you don’t think AI models can “really” reason - more on that later - even simulated reasoning has to be reasoning in human language. Reasoning model traces are full of phrases like “wait, what if we tried” and “I’m not certain, but let’s see if” and “great, so we know for sure that X, now let’s consider Y”. In other words, reasoning is a sophisticated task that requires a sophisticated tool like human language.The case for China winning the AI race - Andrew Ng

A little cold water for all the AI hype

AI will not suddenly lead to an Alzheimer’s cure - Jacob Trefethen

"That type of cure is something that, if we do not go off the rails as a species, I do expect to be available in 100 years. We are lucky to be born now, not 100 years ago, and we work today so that our descendants can be luckier than us. The apartment I live in was built by people my age in 1910, and I did nothing to lay the brick foundation. Hopefully those of us alive now can invent an Alzheimer’s cure, and pass that gift on."Artificial General Intelligence Is Not as Imminent as You Might Think - Gary Marcus (of course it’s Gary!)

"Don't be fooled. Machines may someday be as smart as people and perhaps even smarter, but the game is far from over. There is still an immense amount of work to be done in making machines that truly can comprehend and reason about the world around them. What we need right now is less posturing and more basic research."

Fun Practical Projects and Learning Opportunities

A few fun practical projects and topics to keep you occupied/distracted:

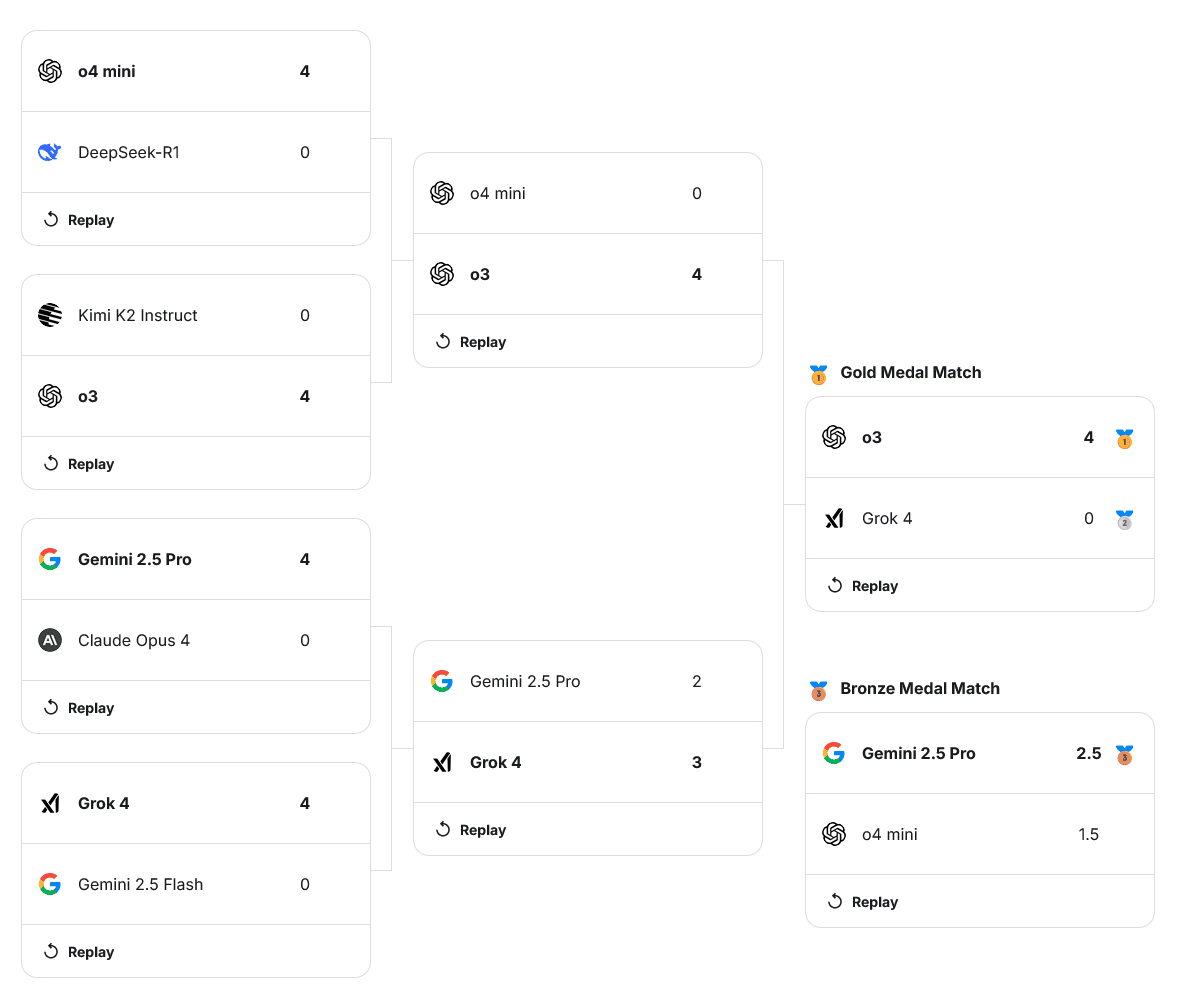

Chess competition for AI on Kaggle - OpenAI's o3 Crushes Grok 4 In Final, Wins Kaggle's AI Chess Exhibition Tournament

Do LLMs Have Good Music Taste?

I used the ListenBrainz dataset to collect the top 5000 most played artists and randomly shuffled the starting matchups. Running 5000 people through 13 rounds required a lot of requests, but since the prompt was short, the token count stayed low enough that costs weren’t prohibitive. Here's the prompt I used: Pick your favorite music artist between {artist_1} and {artist_2}. You have to pick one. Respond with just their name.Box, run, crash: China’s humanoid robot games show advances and limitations

Updates from Members and Contributors

Kirsteen Campbell, Public Involvement and Communications Manager at UK Longitudinal Linkage Collaboration highlights the following which could be very useful for researchers: “UK Longitudinal Linkage Collaboration (UK LLC) announces new approvals from NHS providers and UK Government departments meaning linked longitudinal data held in the UK LLC Trusted Research Environment can now be used for a wide range of research in the public good. Read more: A new era for a wide range of longitudinal health and wellbeing research | UK Longitudinal Linkage Collaboration- Enquiries to: access@ukllc.ac.uk”

Jobs and Internships!

The Job market is a bit quiet - let us know if you have any openings you'd like to advertise

Alex Handy at NatureMetrics has some open ML/AI engineer roles which look interesting (NatureMetrics is a pioneering company that uses environmental DNA analysis and AI to provide biodiversity insights, helping organisations measure and manage their impact on nature. Backed by ambitious investors and as Earthshot Prize 2024 finalists, we're on a mission to make biodiversity measurable at scale and have the opportunity to change the way organisations operate.).

Data Internships - a curated list

Again, hope you found this useful. Please do send on to your friends- we are looking to build a strong community of data science practitioners- and sign up for future updates here

- Piers

The views expressed are our own and do not necessarily represent those of the RSS