Hi everyone-

Well May flew by in a whirlwind of bank-holidays here in the UK and even some glimpses of sunshine! Now June is here, it’s definitely time for some ‘summer is coming’ distraction with a deep dive into all things Data Science and AI… Lots of great reading materials below and I really encourage you to read on, but here are the edited highlights if you are short for time!

Video with audio, from a single prompt: Veo3 - GoogleDeepMind

GenAI’s adoption puzzle - Benedict Evans

Personality and Persuasion - Ethan Mollick

AlphaEvolve - GoogleDeepMind

What is the collective noun for coding assistants? Maybe a ‘pizza box’? Well, we’ve had one this month with, Codex from OpenAI, Jules from Google, Devstral from Mistral and the open source DeepCoder from Together.ai

Following is the June edition of our Data Science and AI newsletter. Hopefully some interesting topics and titbits to feed your data science curiosity. NOTE: If the email doesn’t display properly (it is pretty long…) click on the “Open Online” link at the top right. Feedback welcome!

handy new quick links: committee; ethics; research; generative ai; applications; practical tips; big picture ideas; fun; reader updates; jobs

Committee Activities

Don’t miss out on our upcoming event, “Federated learning for statisticians and data scientists”, Wednesday 11 June 2025, 4.00PM - 5.00PM . Should be a great discussion covering:

What is federation and how does it differ from traditional meta analysis?

How does it work today?

How could it work in future and what would this enable?

What are the challenges to solve for federated analytics and federated learning?

The RSS has a new open access journal! - RSS: Data Science and Artificial Intelligence.

It offers an exciting venue for your work in these disciplines with a broad reach across statistics, machine learning, deep learning, econometrics, bioinformatics, engineering, computational social sciences. The journal is peer reviewed by editors esteemed in their field and is open to submission. Discover more about why the new journal is the ideal platform for showcasing your

The AI Task Force continues their work feeding into RSS AI policy:

There is a short survey that we would like as many of you to answer as possible (click here for survey). The responses to this survey will support the activities of our subgroups on policy, evaluation and practitioners, allowing us to prioritise activities.

We will be updating on these streams at the conference in September, so please do send in your thoughts. While there are no spaces on the task force at this time, we are always interested in any other input you may have and you can send thoughts to our inbox: aitf@rss.org.uk

It’s not too late to submit a talk or poster to the RSS 2025 conference!

Jennifer Hall, Lead Data Scientist at Aviva, continues her excellent interview series, “10 Key Questions to AI and Data Science Practitioners”, most recently with Mary Amanuel

Piers Stobbs, co-founder and Chief Data and AI Officer at Epic Life, spoke at the recent UCL HealthTech Conference, The Future of Digital Technology in Healthcare

Martin Goodson, CEO and Chief Scientist at Evolution AI, continues to run the excellent London Machine Learning meetup and is very active with events. The last event was on Wednesday, April 16th when Max Bartolo, Researcher at Cohere, gave a great presentation- Building Robust Enterprise-Ready Large Language Models - followed by a thought provoking question and answer session. Videos are posted on the meetup youtube channel.

This Month in Data Science

Lots of exciting data science and AI going on, as always!

Ethics and more ethics...

Bias, ethics, diversity and regulation continue to be hot topics in data science and AI...

With the current geopolitical turmoil, we are seeing moves away from AI regulation, lead by the US

Trump to scrap Biden's "AI diffusion rule" Thursday in win for chipmakers

Perhaps this is somehow related to… US tech firms Nvidia, AMD secure AI deals as Trump tours Gulf states?

"Among the biggest deals, Nvidia (NVDA.O), opens new tab said it will sell hundreds of thousands of AI chips in Saudi Arabia, with a first tranche of 18,000 of its newest "Blackwell" chips going to Humain, an AI startup just launched by Saudi Arabia's sovereign wealth fund. Chip designer Advanced Micro Devices (AMD.O), opens new tab also announced a deal with Humain, saying it has formed a $10 billion collaboration."Not only a a move away from specific AI regulation, but an attempt to ban the concept of AI regulation

“On Sunday night, House Republicans added language to the Budget Reconciliation bill that would block all state and local governments from regulating AI for 10 years, 404 Media reports. The provision, introduced by Representative Brett Guthrie of Kentucky, states that "no State or political subdivision thereof may enforce any law or regulation regulating artificial intelligence models, artificial intelligence systems, or automated decision systems during the 10 year period beginning on the date of the enactment of this Act.”Even the typically hawkish EU regulators are loosening up: EU Loosens AI Regulations - more detail: European Commission withdraws AI Liability Directive from consideration

"Virkkunen announced the EU would withdraw a provision that allowed citizens to sue AI companies for damages caused by their systems and required extensive reporting and disclosure. She advocated adjusting the regulations to make the EU more competitive and independent. “When we want to boost investments in AI, we have to make sure that we have an environment that is faster and simpler than the European Union is right now,” he said."And Trump seems to be paving the way to removing copyright obstacles to AI training: Trump administration fires top copyright official, just days after the release of a controversial report on Generative AI training from the Copyright Office

"Whether this report survives as “official” policy is uncertain. It may even be rescinded by the time you read this post. But its 50,000-plus words remain very much alive—alongside more than 40 generative AI copyright cases now pending in federal courts across the country. Judges, law clerks, and policymakers will read them. And on several hotly contested issues, the report speaks with unusual clarity—often siding with creators over the tech platforms whose tools are backed by an increasingly aggressive executive branch. Several of those platforms are now lobbying the Trump administration to declare it categorically lawful to use copyrighted works for AI training."And the UK Government is edging in the same direction, raising the ire of Elton John amongst others

"Sir Elton John described the government as "absolute losers" and said he feels "incredibly betrayed" over plans to exempt technology firms from copyright laws."

Of course all the leading AI firms keep informing us of all the wonderful ways they are keeping us safe:

Sharing new open source protection tools and advancements in AI privacy and security

"At Meta, we use AI to strengthen our security systems and defend against potential cyber attacks. We’ve heard from the community that they want access to AI-enabled tools that will help them do the same. That’s why we’re sharing updates to help organizations evaluate the efficacy of AI systems in security operations and announcing the Llama Defenders Program for select partners. We believe this is an important effort to improve the robustness of software systems as more capable AI models become available."

Although Anthropic are now worried enough about the capabilities of their latest model, Claude Opus 4, that they are “activating the AI Safety Level 3 Deployment and Security Standards”

"We have not yet determined whether Claude Opus 4 capabilities actually require the protections of the ASL-3 Standard. So why did we implement those protections now? We anticipated that we might do this when we launched our last model, Claude Sonnet 3.7. In that case, we determined that the model did not require the protections of the ASL-3 Standard. But we acknowledged the very real possibility that given the pace of progress, near future models might warrant these enhanced measures.2 And indeed, in the lead up to releasing Claude Opus 4, we proactively decided to launch it under the ASL-3 Standard. This approach allowed us to focus on developing, testing, and refining these protections before we needed them.This is a useful and practical guide talking about Chinese Open-Weight models- what are the actual risks of using them above and beyond open source models from elsewhere?

"Therefore, while the geopolitical and regulatory concerns surrounding Chinese technology are valid and must factor into any organization's risk calculus, they should be distinguished from the technical security posture of the models themselves. From a purely technical standpoint, the security challenges posed by models like Qwen or DeepSeek are fundamentally the same as those posed by Llama or Gemma"We continue to see more examples of AI being used for or doing “bad things”

FBI warns of ongoing scam that uses deepfake audio to impersonate government officials

“Since April 2025, malicious actors have impersonated senior US officials to target individuals, many of whom are current or former senior US federal or state government officials and their contacts,” Thursday’s advisory from the bureau’s Internet Crime Complaint Center said. “If you receive a message claiming to be from a senior US official, do not assume it is authentic.”We have the whole Grok fiasco: Grok really wanted people to know that claims of white genocide in South Africa are highly contentious - this was apparently due to an unauthorised change in the system prompt

"xAI has published the system prompts for its AI chatbot Grok after an “unauthorized” change led to a slew of unprompted responses on X about white genocide. The company says it will publish its Grok system prompts on GitHub from now on, which provide some insight into the way xAI has instructed Grok to respond to users."And then the botched GPT-4o release from OpenAI: GPT-4o Is An Absurd Sycophant, resulting in a rapid change of plan

While the general capabilities continue to improve:

AI is getting “creepy good” at geo-guessing

"If you are worried about revealing your exact location—or if you maybe even fib about it at times—there are some good reasons to worry about what is visible in background photos, because Artificial Intelligence (AI) is getting very good at guessing where you are based on the smallest of clues. And that might even include audio clues, if given the right data.Australian radio station secretly used an AI host for six months

"Australian Radio Network’s CADA station, which broadcasts in Sydney and on the iHeartRadio app, created a host called Thy using artificial intelligence software developed by voice cloning firm ElevenLabs. The Workdays with Thy show presented music for four hours a day from Monday to Friday, but did not mention on its website or promotional materials that Thy was not a real person. “If your day is looking a bit bleh, let Thy and CADA be the energy and vibe to get your mood lifted,” the show’s page states."How a new type of AI is helping police skirt facial recognition bans

"The tool, called Track and built by the video analytics company Veritone, is used by 400 customers, including state and local police departments and universities all over the US. It is also expanding federally: US attorneys at the Department of Justice began using Track for criminal investigations last August. Veritone’s broader suite of AI tools, which includes bona fide facial recognition, is also used by the Department of Homeland Security—which houses immigration agencies—and the Department of Defense, according to the company."In Taiwan and China, young people turn to AI chatbots for ‘cheaper, easier’ therapy

"In the pre-dawn hours, Ann Li’s anxieties felt overwhelming. She’d recently been diagnosed with a serious health problem, and she just wanted to talk to someone about it. But she hadn’t told her family, and all her friends were asleep. So instead, she turned to ChatGPT. “It’s easier to talk to AI during those nights,” the 30-year-old Taiwanese woman, tells the Guardian.

But at least the impending “AI is going to take all of our jobs right now” apocalypse seems to be a little premature

Klarna changes its AI tune and again recruits humans for customer service - great perspective from Dimitri Masin on this here

"It’s a drastic change from a year ago, when the firm went all in on AI, laid off workers and paused hiring. The shift highlights the need for the option to speak to a human in customer service — and to use AI as a supplement, not a replacement, for staff, according to Julie Geller, principal research director at Info-Tech Research Group."

Developments in Data Science and AI Research...

As always, lots of new developments on the research front and plenty of arXiv papers to read...

This is pretty amazing from DeepMind- AlphaEvolve - white paper (A coding agent for scientific and algorithmic discovery). More background here and here

“The paper is quite spectacular,” says Mario Krenn, who leads the Artificial Scientist Lab at the Max Planck Institute for the Science of Light in Erlangen, Germany. “I think AlphaEvolve is the first successful demonstration of new discoveries based on general-purpose LLMs.”

This is really interesting- the same embeddings can be learned from different data sets, indicating a “universal geometry of embeddings”

"We introduce the first method for translating text embeddings from one vector space to another without any paired data, encoders, or predefined sets of matches. Our unsupervised approach translates any embedding to and from a universal latent representation (i.e., a universal semantic structure conjectured by the Platonic Representation Hypothesis). Our translations achieve high cosine similarity across model pairs with different architectures, parameter counts, and training datasets."Cool to see low level innovation as well- Muon: a better faster optimiser

"We find that Muon is more effective than AdamW in retaining data efficiency at large batch sizes, far beyond the so-called critical batch size, while remaining computationally efficient, thus enabling more economical training"And this looks intriguing: what do you do when you have no data to train on? Absolute Zero: Reinforced Self-play Reasoning with Zero Data

"The scarcity of high-quality, human-produced examples raises concerns about the long-term scalability of relying on human supervision, a challenge already evident in the domain of language model pretraining. Furthermore, in a hypothetical future where AI surpasses human intelligence, tasks provided by humans may offer limited learning potential for a superintelligent system. To address these concerns, we propose a new RLVR paradigm called Absolute Zero, in which a single model learns to propose tasks that maximize its own learning progress and improves reasoning by solving them, without relying on any external data. Under this paradigm, we introduce the Absolute Zero Reasoner (AZR), a system that self-evolves its training curriculum and reasoning ability by using a code executor to both validate proposed code reasoning tasks and verify answers, serving as an unified source of verifiable reward to guide open-ended yet grounded learning."A promising answer to a tough question- when models get better than humans, how do we over-see them? Scaling Laws For Scalable Oversight

"For each game, we find scaling laws that approximate how domain performance depends on general AI system capability. We then build on our findings in a theoretical study of Nested Scalable Oversight (NSO), a process in which trusted models oversee untrusted stronger models, which then become the trusted models in the next step. We identify conditions under which NSO succeeds and derive numerically (and in some cases analytically) the optimal number of oversight levels to maximize the probability of oversight success. "More insights from Deep Seek on their efficiency gains: Scaling Challenges and Reflections on Hardware for AI Architectures

"This paper presents an in-depth analysis of the DeepSeek-V3/R1 model architecture and its AI infrastructure, highlighting key innovations such as Multi-head Latent Attention (MLA) for enhanced memory efficiency, Mixture of Experts (MoE) architectures for optimized computation-communication trade-offs, FP8 mixed-precision training to unlock the full potential of hardware capabilities, and a Multi-Plane Network Topology to minimize cluster-level network overhead."We see increasing usage of LLMs as judges- using one LLM to grade another one- but how trustworthy are they? Know Thy Judge: On the Robustness Meta-Evaluation of LLM Safety Judges

"In this paper, we highlight two critical challenges that are typically overlooked: (i) evaluations in the wild where factors like prompt sensitivity and distribution shifts can affect performance and (ii) adversarial attacks that target the judge. We highlight the importance of these through a study of commonly used safety judges, showing that small changes such as the style of the model output can lead to jumps of up to 0.24 in the false negative rate on the same dataset, whereas adversarial attacks on the model generation can fool some judges into misclassifying 100% of harmful generations as safe ones."Interesting research from Microsoft- LLM’s may have the ability to use increasingly large context windows (the amount of information provided to them in the question) - but they still get lost in long conversations

This is pretty staggering with pretty concerning implications: Large Language Models Are More Persuasive Than Incentivized Human Persuaders

"We directly compare the persuasion capabilities of a frontier large language model (LLM; Claude Sonnet 3.5) against incentivized human persuaders in an interactive, real-time conversational quiz setting. In this preregistered, large-scale incentivized experiment, participants (quiz takers) completed an online quiz where persuaders (either humans or LLMs) attempted to persuade quiz takers toward correct or incorrect answers. We find that LLM persuaders achieved significantly higher compliance with their directional persuasion attempts than incentivized human persuaders, demonstrating superior persuasive capabilities in both truthful (toward correct answers) and deceptive (toward incorrect answers) contexts. These findings call for urgent ethical and regulatory discussions about how AI persuasion should be governed to maximize its benefits while minimizing its risks,"As we saw in the previous section, you don’t necessarily need facial recognition to track individuals: Person Recognition at Altitude and Range: Fusion of Face, Body Shape and Gait

"FarSight incorporates novel algorithms across four core modules: multi-subject detection and tracking, recognition-aware video restoration, modality-specific biometric feature encoding, and quality-guided multi-modal fusion. These components are designed to work cohesively under degraded image conditions, large pose and scale variations, and cross-domain gaps. Extensive experiments on the BRIAR dataset, one of the most comprehensive benchmarks for long-range, multi-modal biometric recognition, demonstrate the effectiveness of FarSight. Compared to our preliminary system, this system achieves a 34.1% absolute gain in 1:1 verification accuracy (TAR@0.1% FAR), a 17.8% increase in closed-set identification (Rank-20), and a 34.3% reduction in open-set identification errors (FNIR@1% FPIR)."Interestingly, we have not seen the leap in performance in generalised robots that we have seen in generalised language models. Amazon did a study and right now the benefits don’t outweigh the costs

"The Stow robot's speed was similar to that achieved by human workers. "Over the month of March 2025, humans stowed at an average rate of 243 units per hour (UPH) while the robotic systems stowed at 224 UPH," the paper says. "This comparison was careful to compare human stowers operating on the same floor as the robotic workcells, as stow rates vary based on inbound item distributions and the density of items already in the fabric pods."Be careful how you choose your model- more and more evidence that the AI leaderboards are being gamed by the developers of the models: The Leaderboard Illusion

"We find that undisclosed private testing practices benefit a handful of providers who are able to test multiple variants before public release and retract scores if desired. We establish that the ability of these providers to choose the best score leads to biased Arena scores due to selective disclosure of performance results. At an extreme, we identify 27 private LLM variants tested by Meta in the lead-up to the Llama-4 release."This looks like a promising applied use case in healthcare: AMIE gains vision: A research AI agent for multimodal diagnostic dialogue

The innovative team at Sakana have released Continuous Thought Machines

Finally, my old favourite, time-series: Towards Cross-Modality Modeling for Time Series Analytics: A Survey in the LLM Era - still not seeing much improvement from more basic techniques!

Generative AI ... oh my!

Still moving at such a fast pace it feels in need of it's own section, for developments in the wild world of large language models...

OpenAI continues to churn out an impressive array of news and updates

Lots of noise on the corporate side: from restructuring away from non-profit status, to re-negotiating with Microsoft, to snapping up Windsurf for $3b

Speaking of acquisitions- how about $6.5b for a 55 person pre-product company?! The ultimate acqui-hire of Jony Ive!

Still busy releasing products - this month its Codex, their new software agent

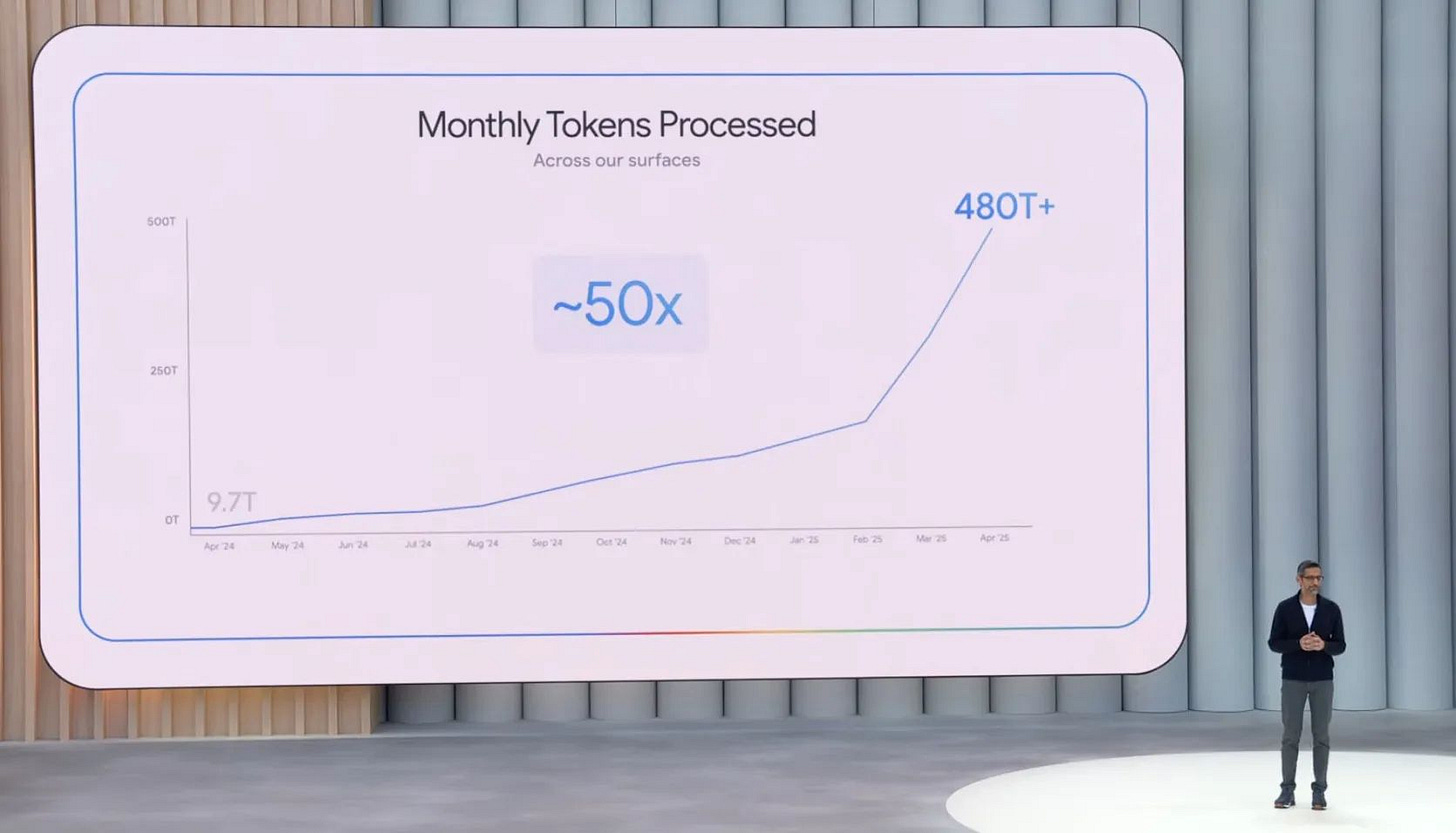

Google seems to be flying

Almost a day doesn’t go by without some sort of new AI feature: from real-time translation in Google Meet, imaging editing in the Gemini app, a standalone NotebookLM app, AI Overviews in YouTube, and Google’s very own coding agent, Jules (good summary of Google I/O 2025 here from Wired)

And some impressive models and capabilities updates as well:

A new open source tiny model for mobile- Gemma 3n with impressive performance as well as a MedGemma, an open model for medical text and image comprehension

A new diffusion powered LLM, Gemini Diffusion, which is a genuinely new approach rather than a “scale-up” of data and compute- useful insight here

And last but very much not least… Google’s pretty amazing new video model Veo3 which generates audio as well as video from a prompt… (examples in the wild)

Microsoft attempts to keep up:

First they carry on attempting to plug Copilot into anything they can think of, now releasing a new Agent Store

Their Phi ‘small-models’ continue to impress though: Announcing new Phi-4, complete with reasoning

Anthropic is definitely keeping up the pace:

First their big new release: Claude Opus 4, which includes their earlier release of Integrations, allowing their models to connect to all sorts of external services, and a new voice mode

Some cool insight from behind the scenes with their Circuits update, which is their approach to improving understanding and interpretability, well worth a read (and great to see they are now open sourcing this)

And their first ever Developer Day

Amazon is definitely not at the forefront, but continues to play to its AWS strengths:

Their most sophisticated model yet, Nova Premier

As for Meta, there seem to be concerns as to their pace of development: Exclusive: Meta shuffles AI team to compete with OpenAI and Google

On the other hand, Mistral, the French ‘upstart’, had a very productive month:

Their very own coding agent, Devstral, along with a new Agents API

A new “medium” version of their leading model

And a continued enterprise focus, with their updated Document AI for OCR

And as always lots going on in the world of open source

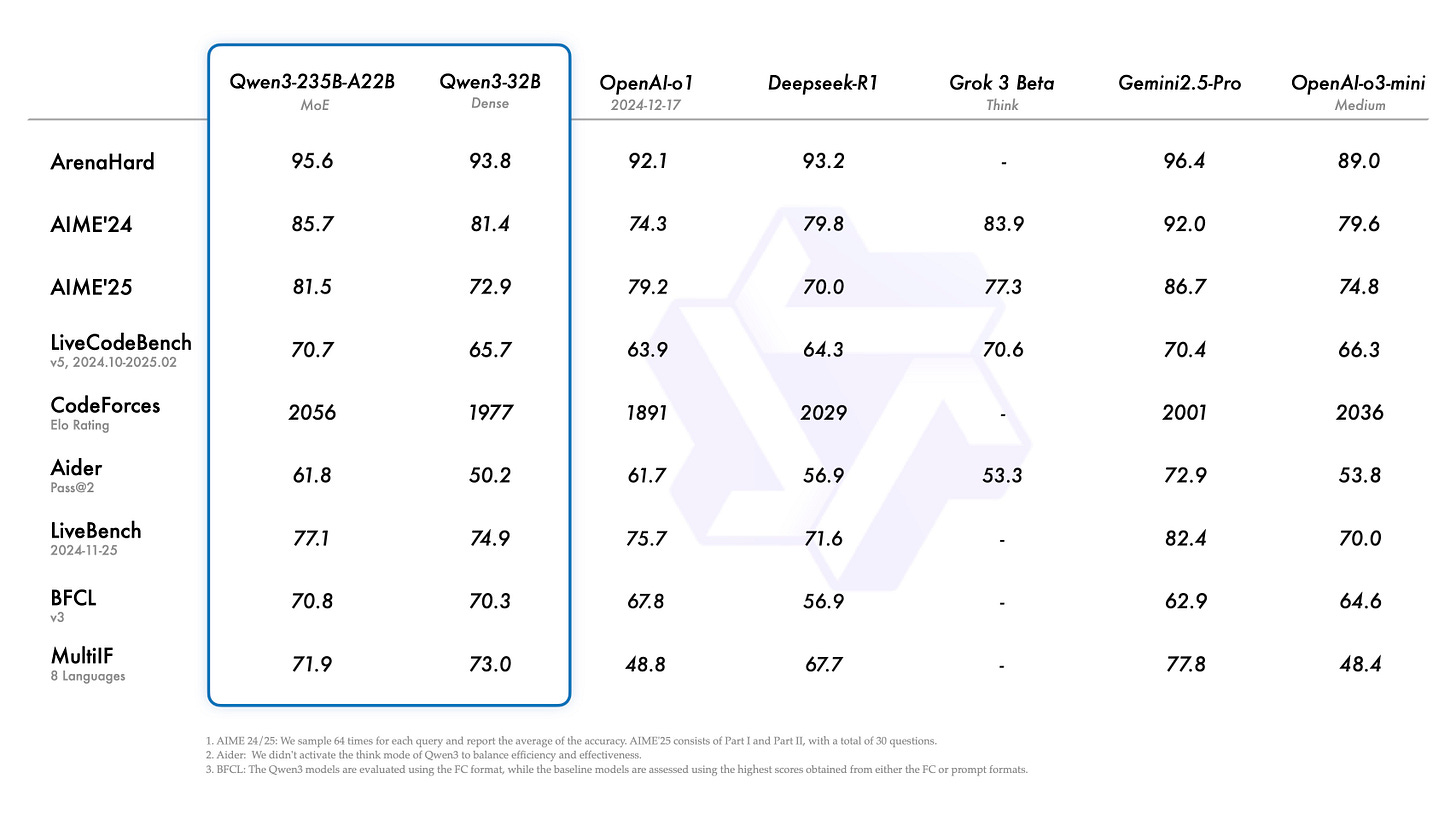

More DeepSeek, this time DeepSeek-Prover-V2, as well as a new version of DeepSeek-R1 that jumps straight to the top of the tables again

"We introduce DeepSeek-Prover-V2, an open-source large language model designed for formal theorem proving in Lean 4, with initialization data collected through a recursive theorem proving pipeline powered by DeepSeek-V3."As well as more Qwen : Qwen3: Think Deeper, Act Faster

Fantastic innovation and performance from the Allen Institute with OLMo 2 1B, their smallest model from the 2 family- properly open source with ‘all code, checkpoints, logs and associated training details on GitHub’

And some amazing new open source capabilities

A fully open source coding assistant, DeepCoder, from Together.ai

An open source Operator-like tool from Hugging Face, Open Computer Agent

ByteDance (TikTok owner) has open sourced DeerFlow

And speaking of open source Deep Research approaches, there’s also WebThinker

We have open source video, complete with weights and inference code: SkyReels V2: Infinite-Length Film Generative Model

And a new open source CLIP model, OpenVision, for vision encoding

Finally an interesting interview with the founder of Oumi Labs on an ‘unconditionally open model’

Real world applications and how to guides

Lots of applications and tips and tricks this month

Some interesting practical examples of using AI capabilities in the wild

Lots of cool “AI for Science” type applications appearing which is exciting

FutureHouse releases AI tools it claims can accelerate science (also here)

"Crow is a general-purpose agent that can search the literature and provide concise, scholarly answers to questions, and is perfect for use via API. Falcon is specialized for deep literature reviews. It can search and synthesize more scientific literature than any other agent we are aware of, and also has access to several specialized scientific databases, like OpenTargets. Owl (formerly HasAnyone) is specialized to answer the question “Has anyone done X before?” Phoenix (experimental) is our deployment of ChemCrow, an agent with access to specialized tools that allow it to help researchers in planning chemistry experiments."Meta open source releases “supporting molecular property prediction, language processing, and neuroscience”

Harnessing Artificial Intelligence for High-Impact Science

AI-designed DNA controls genes in healthy mammalian cells for first time

"A study published in the journal Cell marks the first reported instance of generative AI designing synthetic molecules that can successfully control gene expression in healthy mammalian cells."

Useful insight about how journalists are using AI

"In a collaboration between CJR and the University of Southern California’s AI for Media and Storytelling Initiative, we asked a diverse group of reporters, editors, executives, and others across the news industry: How does your work involve AI, for good or ill? When does AI matter, and how does it make you feel about your craft, identity, or professional future? When do you welcome AI into your work and when do you protect your work from it?"Great example of using satellite data to help farmers

Apparently the UK government is getting into the whole “AI thing” - UK government to launch AI tool to speed up public consultations

While Amazon is expanding its use of AI-Narration in audio-books

I’m sure designers will welcome this one: Publish your designs on the web with Figma Sites

Meanwhile TikTok can now bring your photos to life

And Clova can give you a rough cut of your video in seconds

Finally here, not sure if this is an application or a how-to-guide, but its pretty impressive either way: How I used o3 to find CVE-2025-37899, a remote zeroday vulnerability in the Linux kernel’s SMB implementation

Lots of great tutorials and how-to’s this month

First of all on the GenAI front:

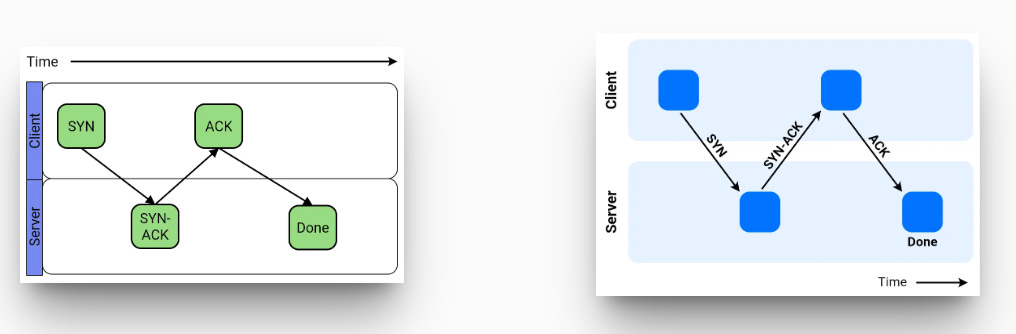

Simple but beautifully clear summary of agent patterns - well worth a read

Excellent analysis from Simon Willison on the newly released Claude 4 system prompt

"If the person seems unhappy or unsatisfied with Claude or Claude’s performance or is rude to Claude, Claude responds normally and then tells them that although it cannot retain or learn from the current conversation, they can press the ‘thumbs down’ button below Claude’s response and provide feedback to Anthropic. If the person asks Claude an innocuous question about its preferences or experiences, Claude responds as if it had been asked a hypothetical and responds accordingly. It does not mention to the user that it is responding hypothetically."Useful dive into how to measure truthfulness in LLMs

Excellent tutorial on setting up an ‘MCP news agent’ from Eugene Yan

Digging into automated prompt optimisation libraries like DSPy

This is a great deep dive into Google’s new AlphaEvolve from Devansh

What’s the difference between an Agent and a Simulator - thoughtful

Well explained- what are embeddings?

Digging deep into an interesting new approach for improving LLM access to facts or trusted documents- Cache Augmented Generation

And a bit more general:

Well, this is more foundational Deep Learning, but I remember watching Hinton’s lecture on Boltzmann Machines so this made me smile

How do you select a fair random sample when you don’t know the size of the set you are sampling from? Reservoir Sampling - nice interactive demo

Another favourite topic- Tackling overfitting in tree-based models

Task estimation: Conquering Hofstadter's Law - love this

"Hofstadter's Law: It always takes longer than you expect, even when you take into account Hofstadter's Law. — Douglas Hofstadter"Good visual explanation of Eigenvectors and Eigenvalues

Regression discontinuities always seems like an elegant approach but non-trivial to apply successfully: useful guide

Finally, what we all need - “Five simple things that will immediately improve your diagrams”

Practical tips

How to drive analytics, ML and AI into production

Pretty cool insight from OpenAI on how they scaled their recent release: Building, launching, and scaling ChatGPT Images

"“It was the first or second night after Images launch, and demand was bigger than we’d expected. The team got together and we decided that to keep the product available and keep the service up and running for all users, we needed to have something asynchronous – and fast! Over a few days and nights, we built an entirely asynchronous Images product. It was a bunch of engineers rolling their sleeves up – and we kicked off a parallel workstream to get this new implementation ready. All the while, a bunch of us worked on keeping the site up and running under the intense load. ... This meant that we traded off latency (time to generate free images) for availability (being able to generate images)”.Insight from Meta, well worth a read- How Meta understands data at scale

If you are building a tool use agents, this is useful - basically advocating for processing tool call results into a streamlined format as part of the tool call rather than dumping the raw results into the prompt

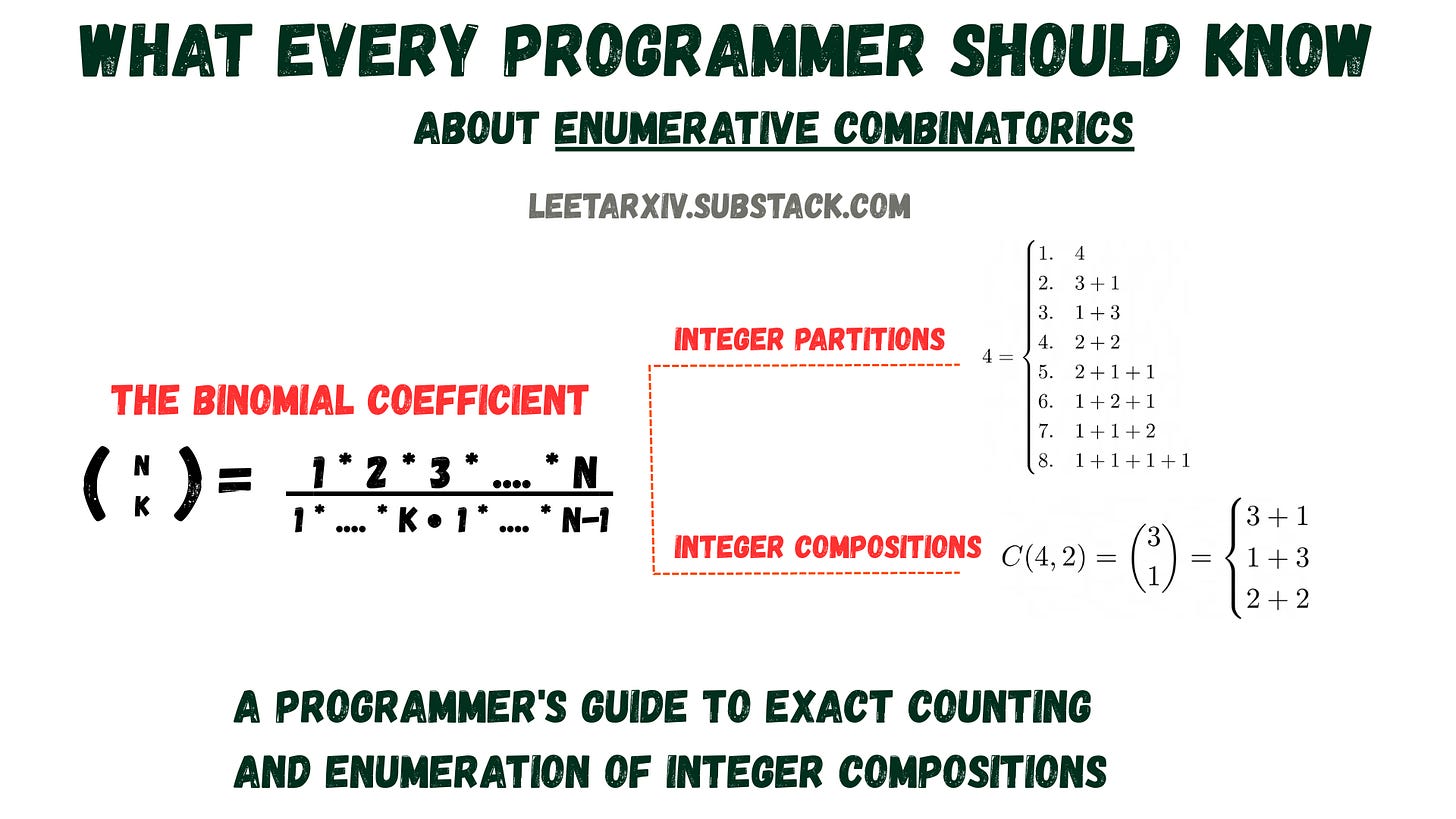

"If the MCP servers are already returning data in a JSON format, it seems much more natural to parse the data, and instead operate on the structured data. Back to our sorting example, rather than asking the LLM to reproduce the outputs directly, we could instead run a sort operation on the data, and return the new array. No hallucinations and this scales to any size of inputs."What Every Programmer Should Know About Enumerative Combinatorics

Getting in the weeds around how things scale- attention is logarithmic, actually

"time complexity is the default model brought up when discussing whether an algorithm is “fast” or “slow”. back in the 80s, when every computer had only one core and no one besides a couple of weirdos knew what a SIMD was, this was largely correct. but the year is now 2025. it is very hard to find computers with a single core. even smartphones have 4-8 cores [source needed]. as a result, time complexity largely fails as a measure of how fast or slow certain algorithms are."This resontates with me- The Lost Decade of Small Data?

"History is full of “what if”s, what if something like DuckDB had existed in 2012? The main ingredients were there, vectorized query processing had already been invented in 2005. Would the now somewhat-silly-looking move to distributed systems for data analysis have ever happened? The dataset size of our benchmark database was awfully close to that 99.9% percentile of input data volume for analytical queries in 2024. And while the retina MacBook Pro was a high-end machine in 2012, by 2014 many other vendors shifted to offering laptops with built-in SSD storage and larger amounts of memory became more widespread. So, yes, we really did lose a full decade."

Bigger picture ideas

Longer thought provoking reads - sit back and pour a drink! ...

Claude 4 – The first universal assistant? - Azeem Azhar

"I simply asked it to “Check my Gmail for to-dos from Azeem in the last week.” Opus 4 successfully scanned a week’s worth of emails, identifying every task Azeem had relayed with remarkable accuracy. It understood context, priority and the implicit requests buried in conversational emails. For example, Opus 4 identified an important testing request from Azeem where I needed to evaluate a new content transformation tool. Opus 4 didn’t just summarize the task as “test new tool.” It picked up that I needed to apply a specific template, wait for new content, compare results with an earlier version, and understand the goal: to improve content quality. It gave me the sense that I could ask it to scan anything in my inbox—and it would find it."Contrasting views on AI usage:

People use AI more than you think - Nathan Lambert

GenAI’s adoption puzzle - Benedict Evans

"But another reaction is say that even with those advantages, if this is a life-changing transformation in the possibilities of computing, why is the DAU/WAU ratio so bad? Something between 5% and 15% of people are finding a use for this every day, but at least twice as many people are familiar with it, and know how it works, and know how to use it… and yet only find it useful once a week. Again, you didn’t have to buy a thousand dollar device, so you’re not committed - but if this is THE THING - why do most people shrug?"

Conversational Interfaces: the Good, the Ugly & the Billion-Dollar Opportunity - Julie Zhud

"The bandwagon becomes a caravan. Our brains become like poor data models that overfit: Conversational chat interfaces for design! Conversational chat interfaces for images and videos! Conversational interfaces for coding! For news! For games! There are 5 major problem areas today with conversational chat interfaces. Let’s examine each closely”More good stuff from Ethan Mollick:

Making AI Work: Leadership, Lab, and Crowd

"The answer is that AI use that boosts individual performance does not naturally translate to improving organizational performance. To get organizational gains requires organizational innovation, rethinking incentives, processes, and even the nature of work. But the muscles for organizational innovation inside companies have atrophied. For decades, companies have outsourced this to consultants or enterprise software vendors who develop generalized approaches that address the issues of many companies at once. That won’t work here, at least for a while. Nobody has special information about how to best use AI at your company, or a playbook for how to integrate it into your organization.""An unstated question that comes from the controversy is how many other persuasive bots are out there that have not yet been revealed? When you combine personalities tuned for humans to like with the innate ability of AI to tailor arguments for particular people, the results, as Sam Altman wrote in an understatement “may lead to some very strange outcomes.” Politics, marketing, sales, and customer service are likely to change."

When Does an AI Image Become Art? A Whitney Museum curator explains the history of art versus digital tech - Gwendolyn Rak

"Christiane Paul curates digital art at the Whitney Museum of American Art, in New York City. Last year, Paul curated an exhibit on British artist Harold Cohen and his computer program AARON, the first AI program for art creation. Unlike today’s statistical models, AARON was created in the 1970s as an expert system, emulating the decision-making of a human artist.”ChatGPT is shockingly bad at poker - Nate Silver

Fun Practical Projects and Learning Opportunities

A few fun practical projects and topics to keep you occupied/distracted:

This looks fun- learning about reinforcement learning by building an agent to play noughts and crosses

Our agent starts with zero knowledge of the game. It starts like a human seeing the game for the first time. The agent gradually evaluates each game situation: A score of 0.5 means “I don’t know yet whether I’m going to win here.” A 1.0 means “This situation will almost certainly lead to victory. By playing many parties, the agent observes what works – and adapts his strategy.Win prizes! OpenAI to Z Challenge

"We challenge you to bring legends to life by finding previously unknown archaeological site(s), using available open-source data. Findings should be reasonably bound by the Amazon biome in Northern South America. Focus on Brazil, with allowed extension into the outskirts of Bolivia, Columbia, Ecuador, Guyana, Peru, Suriname, Venezuela, and French Guiana. You are the digital explorer. Use our new OpenAI o3/o4 mini and GPT‑4.1 models to dig through open data(opens in a new window)—high-resolution satellite imagery, published lidar tiles, colonial diaries, indigenous oral maps, past documentaries, archaeological survey papers."Or if that doesn’t work: Physicists turn lead into gold — for a fraction of a second

What Are the Greatest Karaoke Songs of All Time? A Statistical Analysis

Updates from Members and Contributors

Kevin O’Brien highlights the upcoming PyData London 2025 conference

“PyData London has always been a premier gathering for data science professionals, engineers, researchers, and enthusiasts who want to explore the latest developments in machine learning, AI, and open-source analytics. This year’s schedule is packed with insightful talks, hands-on tutorials, and exciting networking opportunities designed to inspire, educate, and connect.

📅 Conference Dates:

🔹 Tutorials Day: Friday, 6th June 2025

🔹 Talks & Workshops: Saturday, 7th June – Sunday, 8th June 2025

🚀 Website: PyData London | 2025”

Jobs and Internships!

The Job market is a bit quiet - let us know if you have any openings you'd like to advertise

Exciting new Head of Data Science opportunity at biomodal to lead the Computational Biology / Data Science efforts. Application and details here

“It's an exciting time for us, with the first peer-reviewed publication using our 6-base sequencing technology appearing in Nature Structural & Molecular Biology in April, with more to follow. We are just getting started in understanding the translational utility of 6-base genomics and there is plenty of interesting, innovative analysis method development work required to get us there. The role can be based in Cambridge (Chesterford Research Park) or London (Vauxhall)”

Interesting new role - at Prax Value (a dynamic European startup at the forefront of developing solutions to identify and track 'enterprise intelligence'): Applied Mathematics Engineer

Data Internships - a curated list

Again, hope you found this useful. Please do send on to your friends- we are looking to build a strong community of data science practitioners- and sign up for future updates here

- Piers

The views expressed are our own and do not necessarily represent those of the RSS