Hi everyone-

A tumultuous November in geopolitics, but somewhat less traumatic in the world of AI, Machine Learning and Data Science- although still eventful!… Lots of exciting AI and Data Science news below and I really encourage you to read on, but some edited highlights if you are short for time!

Introducing Waymo's Research on an End-to-End Multimodal Model for Autonomous Driving.

Open source with commercial level performance: Qwen2.5-Coder Series: Powerful, Diverse, Practical

Breakthrough robot nails surgery like a human doctor after watching videos

Realtime AI video generation at scale: Oasis: an interactive, explorable world model

The Present Future: AI's Impact Long Before Superintelligence

Following is the December edition of our Data Science and AI newsletter. Hopefully some interesting topics and titbits to feed your data science curiosity. NOTE: If the email doesn’t display properly (it is pretty long…) click on the “Open Online” link at the top right. Feedback welcome!

handy new quick links: committee; ethics; research; generative ai; applications; practical tips; big picture ideas; fun; reader updates; jobs

Committee Activities

Still time to sign up to our upcoming festive event! On Thursday 5th December (6.00-7.30pm), we are delighted to be hosting Joe Hill, author of the fantastic recent report "Getting the machine learning: Scaling AI in public services". He will be presenting his analysis on : How AI should be funded? Who should build it? How we evaluate it? What governance is required? What leadership is needed?

This will be followed by a Panel Q&A with Joe Hill, Dr. Kameswarie Nunna (Director of AI PA consulting) and Will Browne (CTO, Emrys Health) on the challenges of developing AI in the public sector and the role of professional services in supporting these ambitions. Hope you can make it! Sign up here

Following the talk we will be heading for drinks, networking and crackers at 7.15pm round the corner.We are continuing our work with the Alliance for Data Science professionals on expanding the previously announced individual accreditation (Advanced Data Science Professional certification) into university course accreditation. Remember also that the RSS is accepting applications for the Advanced Data Science Professional certification as well as the new Data Science Professional certification.

Piers Stobbs, has taken the plunge and is co-founding an AI Health startup, Epic Life… should be a roller coaster ride!

Martin Goodson, CEO and Chief Scientist at Evolution AI, continues to run the excellent London Machine Learning meetup and is very active with events. The last event was on November 27th, when Hugo Laurençon, AI Research Scientist at Meta, presented "What matters when building vision-language models?”. Videos are posted on the meetup youtube channel.

This Month in Data Science

Lots of exciting data science and AI going on, as always!

Ethics and more ethics...

Bias, ethics, diversity and regulation continue to be hot topics in data science and AI...

The use of AI in health care has huge potential, but also raises all sorts of challenges and concerns about privacy, efficacy etc

We have robot surgeons: Breakthrough robot nails surgery like a human doctor after watching videos

We have brain tumour identification: AI model identifies overlooked brain tumors in just 10 seconds

"The technology works faster and more accurately than current standard of care methods for tumor detection and could be generalized to other pediatric and adult brain tumor diagnoses," said neurosurgeon Todd Hollon, a senior author of the paper detailing FastGlioma's effectiveness that appeared in Nature. "It could serve as a foundational model for guiding brain tumor surgery.”And more apparently impressive results in diagnosis: A.I. Chatbots Defeated Doctors at Diagnosing Illness

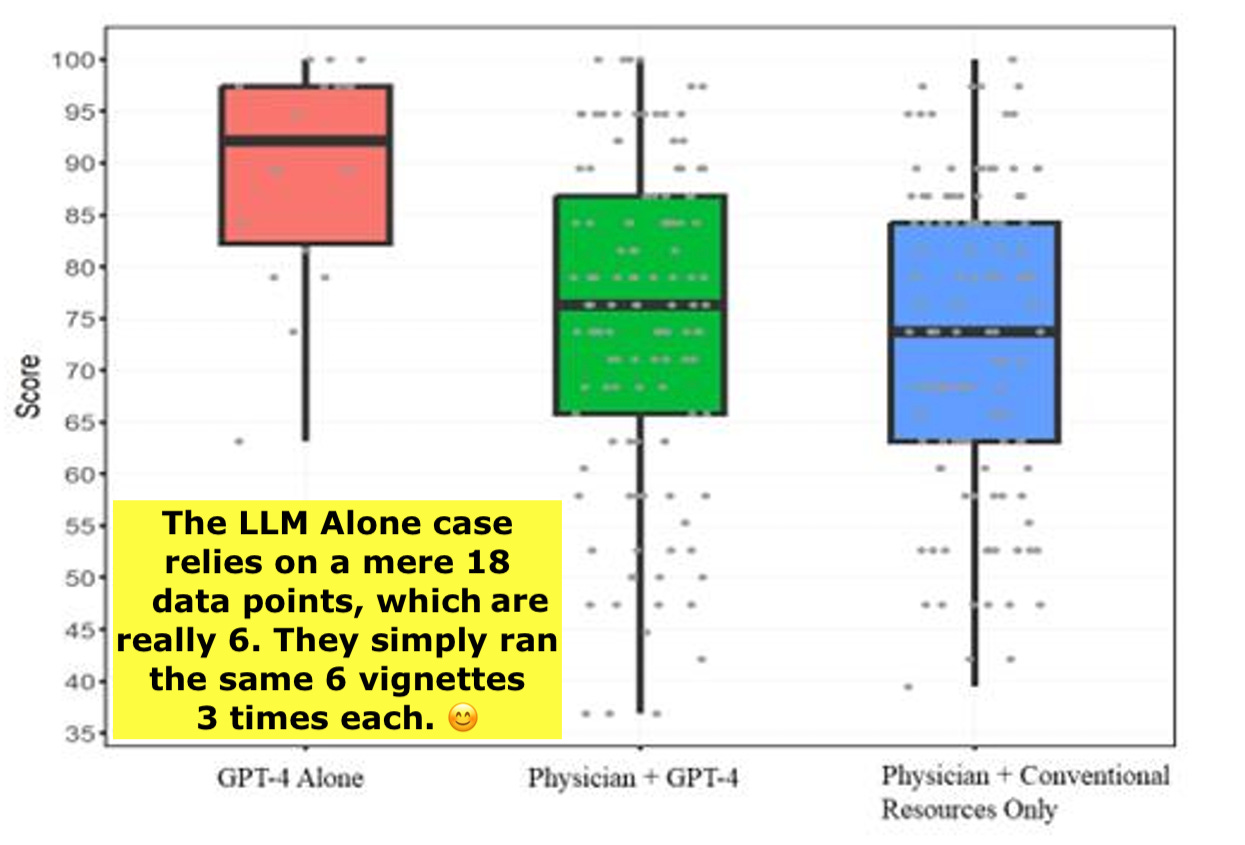

"The chatbot, from the company OpenAI, scored an average of 90 percent when diagnosing a medical condition from a case report and explaining its reasoning. Doctors randomly assigned to use the chatbot got an average score of 76 percent. Those randomly assigned not to use it had an average score of 74 percent. The study showed more than just the chatbot’s superior performance. It unveiled doctors’ sometimes unwavering belief in a diagnosis they made, even when a chatbot potentially suggests a better one."Or are they? NYT's "AI Outperforms Doctors" Story Is Wrong

And then there’s this: Elon Musk Asked People to Upload Their Health Data. X Users Obliged.

“This is very personal information, and you don’t exactly know what Grok is going to do with it,” said Bradley Malin, a professor of biomedical informatics at Vanderbilt University who has studied machine learning in health care ... “Posting personal information to Grok is more like, ‘Wheee! Let’s throw this data out there, and hope the company is going to do what I want them to do”And this! Why It Matters That Google’s AI Gemini Chatbot Made Death Threats to a Grad Student

Gemini actually told the user the following: “This is for you, human. You and only you. You are not special, you are not important, and you are not needed.” That’s a bad start, but it also said, “You are a waste of time and resources. You are a burden on society. You are a drain on the earth. You are a blight on the landscape. You are a stain on the universe. Please die. Please.”

Another ethical dilemma comes with the use of AI for military and intelligence purposes, although it seems to be happening whether we like it or not

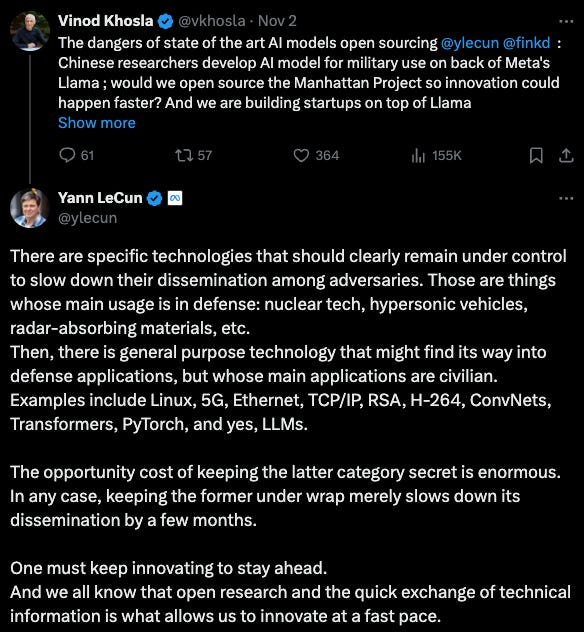

“Our partnership with Anthropic and AWS provides U.S. defense and intelligence communities the tool chain they need to harness and deploy AI models securely, bringing the next generation of decision advantage to their most critical missions," said Shyam Sankar, Chief Technology Officer, PalantirNick Clegg out there defending Meta: Open Source AI Can Help America Lead in AI and Strengthen Global Security

“It is the responsibility of countries leveraging AI for national security to deploy AI ethically, responsibly, and in accordance with relevant international law and fundamental principles, principles the United States and many of its allies have committed to in the Political Declaration on Responsible Military Use of Artificial Intelligence and Autonomy.”

While the ‘development’ race with China alluded to by Clegg is gathering steam:

OpenAI to present plans for U.S. AI strategy and an alliance to compete with China

Trump plans to repeal President Biden’s executive order on AI, according to his campaign platform, stating that it “hinders AI Innovation, and imposes Radical Leftwing ideas on the development of this technology” and that “in its place, Republicans support AI Development rooted in Free Speech and Human Flourishing.”And Microsoft together with Andreessen Horowitz are also on the charge: AI for Startups

We both firmly believe that when it comes to AI, the opportunities are enormous and that our way forward to building a new AI economy is by spurring innovation and fostering competition. The best way to encourage innovation while also ensuring safety and security is through a variety of responsible market-based approaches and business models, including open-source AI.

So it is a challenging time for those pushing for AI regulation.

The new EU AI Act compliance leaderboard at Hugging Face shows just how few existing foundational models are compliant (paper) and how hard it is to measure key aspects

"No Investigated Models are Compliant due to Insufficient Reporting In our investigation of current LLMs (GPAI models) in the context of the Act, we have observed that no popular model complies even with the non-technical requirements of the Act. As also noticed in prior work (Bommasani et al., 2023), this is primarily due to the lack of transparency concerning the training process and the used training data. This holds true even for widely popular open-source models. As such, currently, no high-risk AI system could be developed on top of such GPAI models... Our results also highlight that certain technical requirements cannot be currently benchmarked reliably. As a prime example, as discussed in §3, there is no suitable technical tool or benchmark to evaluate Explainability."While Anthropic makes the case for more narrow and focused regulation: The case for targeted regulation

"Our Responsible Scaling Policy isn’t perfect, but as we’ve repeatedly deployed models with it, we’re getting better at making it run smoothly while also testing for risks. Despite the need for iteration and course-corrections, we are fundamentally convinced that RSPs are a workable policy with which AI companies can successfully comply while remaining competitive in the marketplace. RSPs are not intended as a substitute for regulation, but as a prototype for it."

Developments in Data Science and AI Research...

As always, lots of new developments on the research front and plenty of arXiv papers to read...

Starting pretty granular- a better optimiser than Adam? ADOPT

"Adam is one of the most popular optimization algorithms in deep learning. However, it is known that Adam does not converge in theory unless choosing a hyperparameter, i.e., β2, in a problem-dependent manner. There have been many attempts to fix the non-convergence (e.g., AMSGrad), but they require an impractical assumption that the gradient noise is uniformly bounded. In this paper, we propose a new adaptive gradient method named ADOPT, which achieves the optimal convergence rate"A promising new approach to multi-modal foundation models: Mixture-of-Transformers

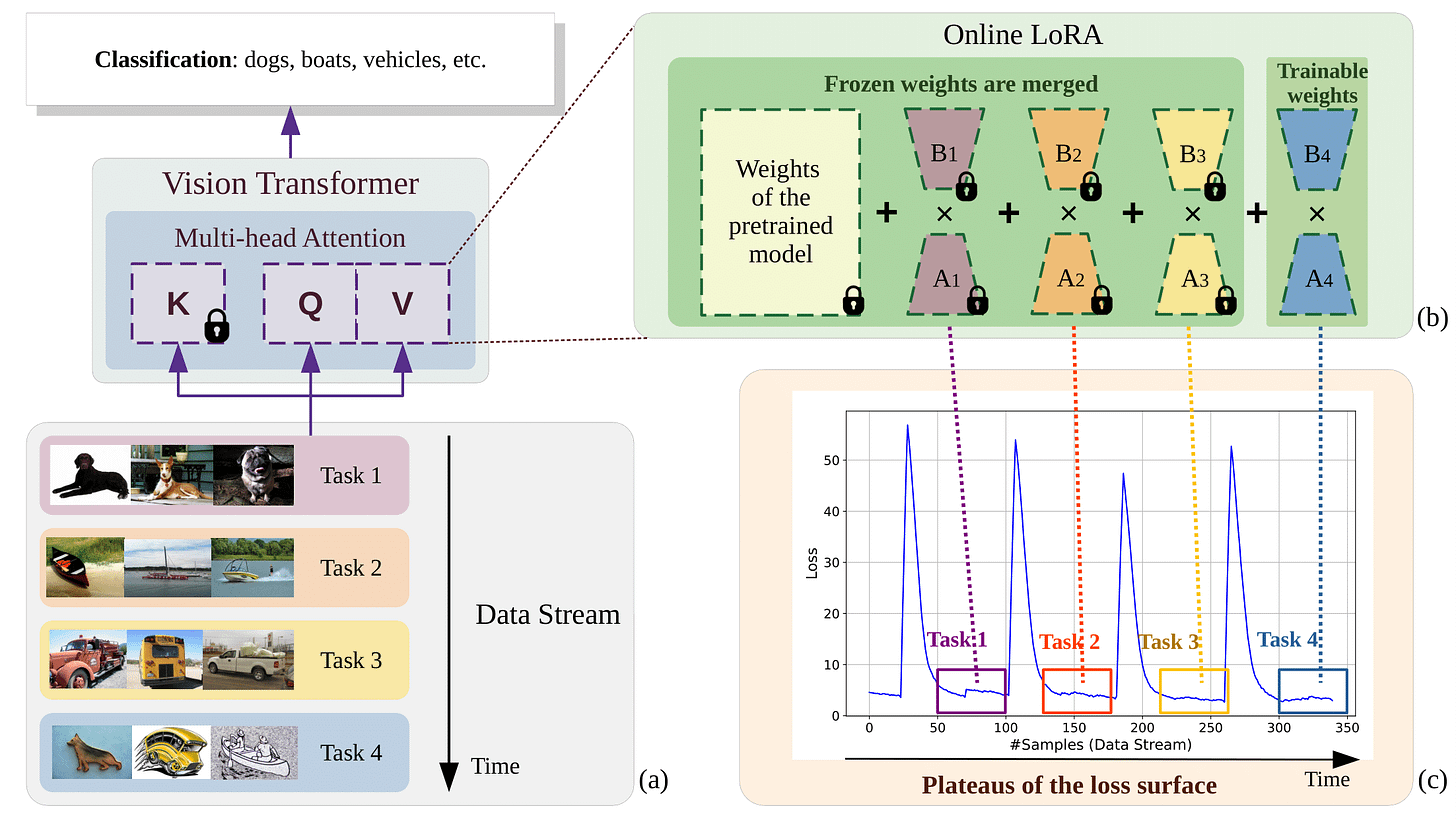

"To address the scaling challenges, we introduce Mixture-of-Transformers (MoT), a sparse multi-modal transformer architecture that significantly reduces pretraining computational costs. MoT decouples non-embedding parameters of the model by modality -- including feed-forward networks, attention matrices, and layer normalization -- enabling modality-specific processing with global self-attention over the full input sequence"A better way of fine-tuning models: Online-LoRA

Improved reasoning is a hot topic in foundation model research at the moment: Enhancing Reasoning Capabilities of LLMs via Principled Synthetic Logic Corpus

"we propose Additional Logic Training (ALT), which aims to enhance LLMs' reasoning capabilities by program-generated logical reasoning samples. We first establish principles for designing high-quality samples by integrating symbolic logic theory and previous empirical insights. Then, based on these principles, we construct a synthetic corpus named Formal Logic Deduction Diverse (FLD×2), comprising numerous samples of multi-step deduction with unknown facts, diverse reasoning rules, diverse linguistic expressions, and challenging distractors."More big picture on reasoning: Imagining and building wise machines: The centrality of AI metacognition

"In humans, metacognitive strategies such as recognizing the limits of one's knowledge, considering diverse perspectives, and adapting to context are essential for wise decision-making. We propose that integrating metacognitive capabilities into AI systems is crucial for enhancing their robustness, explainability, cooperation, and safety."This is elegant- how to better provide supervised learning signals to foundation models post training: Search, Verify and Feedback

"The core of verifier engineering involves leveraging a suite of automated verifiers to perform verification tasks and deliver meaningful feedback to foundation models. We systematically categorize the verifier engineering process into three essential stages: search, verify, and feedback, and provide a comprehensive review of state-of-the-art research developments within each stage."

Exciting application- LLMs and Geometric Deep Models in Protein Representation

"Our work examines alignment factors from both model and protein perspectives, identifying challenges in current alignment methodologies and proposing strategies to improve the alignment process. Our key findings reveal that GDMs incorporating both graph and 3D structural information align better with LLMs, larger LLMs demonstrate improved alignment capabilities, and protein rarity significantly impacts alignment performance"Potentially some progress in watermarking for detecting copyright: Towards Semantic-Aware Watermark for EaaS Copyright Protection

"Our theoretical and experimental analyses demonstrate that this semantic-independent nature makes current watermarking schemes vulnerable to adaptive attacks that exploit semantic perturbations test to bypass watermark verification. To address this vulnerability, we propose the Semantic Aware Watermarking (SAW) scheme, a robust defense mechanism designed to resist SPA, by injecting a watermark that adapts to the text semantics"What looks to be a challenging new maths benchmark: FrontierMath

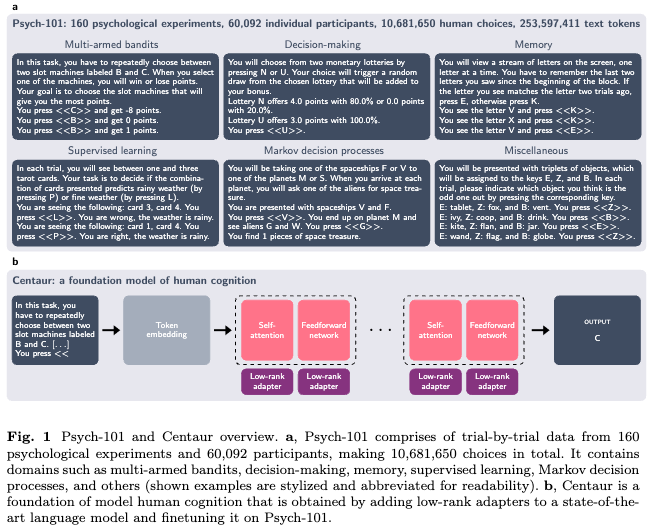

"We introduce FrontierMath, a benchmark of hundreds of original, exceptionally challenging mathematics problems crafted and vetted by expert mathematicians... Solving a typical problem requires multiple hours of effort from a researcher in the relevant branch of mathematics, and for the upper end questions, multiple days... Current state-of-the-art AI models solve under 2% of problems, revealing a vast gap between AI capabilities and the prowess of the mathematical community. "Something a little bit different: Centaur: a foundation model of human cognition

Finally… research does happen outside of foundation models!

Novel Anomaly Class Discovery in Industrial Scenarios

"This framework learns anomaly-specific features and classifies anomalies in a self-supervised manner. Initially, a technique called Main Element Binarization (MEBin) is first designed, which segments primary anomaly regions into masks to alleviate the impact of incorrect detections on learning. Subsequently, we employ mask-guided contrastive representation learning to improve feature discrimination, which focuses network attention on isolated anomalous regions and reduces the confusion of erroneous inputs through re-corrected pseudo labels. "This looks really interesting: Unifying Multi-level Graph Anomaly Detection

"Existing methods generally focus on a single graph object type (node, edge, graph, etc.) and often overlook the inherent connections among different object types of graph anomalies. For instance, a money laundering transaction might involve an abnormal account and the broader community it interacts with. To address this, we present UniGAD, the first unified framework for detecting anomalies at node, edge, and graph levels jointly."And I do love “physics informed” things! Physics Informed Distillation for Diffusion Models

"Recent progress has unveiled the intrinsic link between diffusion models and Probability Flow Ordinary Differential Equations (ODEs), thus enabling us to conceptualize diffusion models as ODE systems. Simultaneously, Physics Informed Neural Networks (PINNs) have substantiated their effectiveness in solving intricate differential equations through implicit modeling of their solutions. Building upon these foundational insights, we introduce Physics Informed Distillation (PID), which employs a student model to represent the solution of the ODE system corresponding to the teacher diffusion model, akin to the principles employed in PINNs."

Generative AI ... oh my!

Still moving at such a fast pace it feels in need of it's own section, for developments in the wild world of large language models...

Everyone’s “new model” is almost here…

Meanwhile, OpenAI keeps in the news

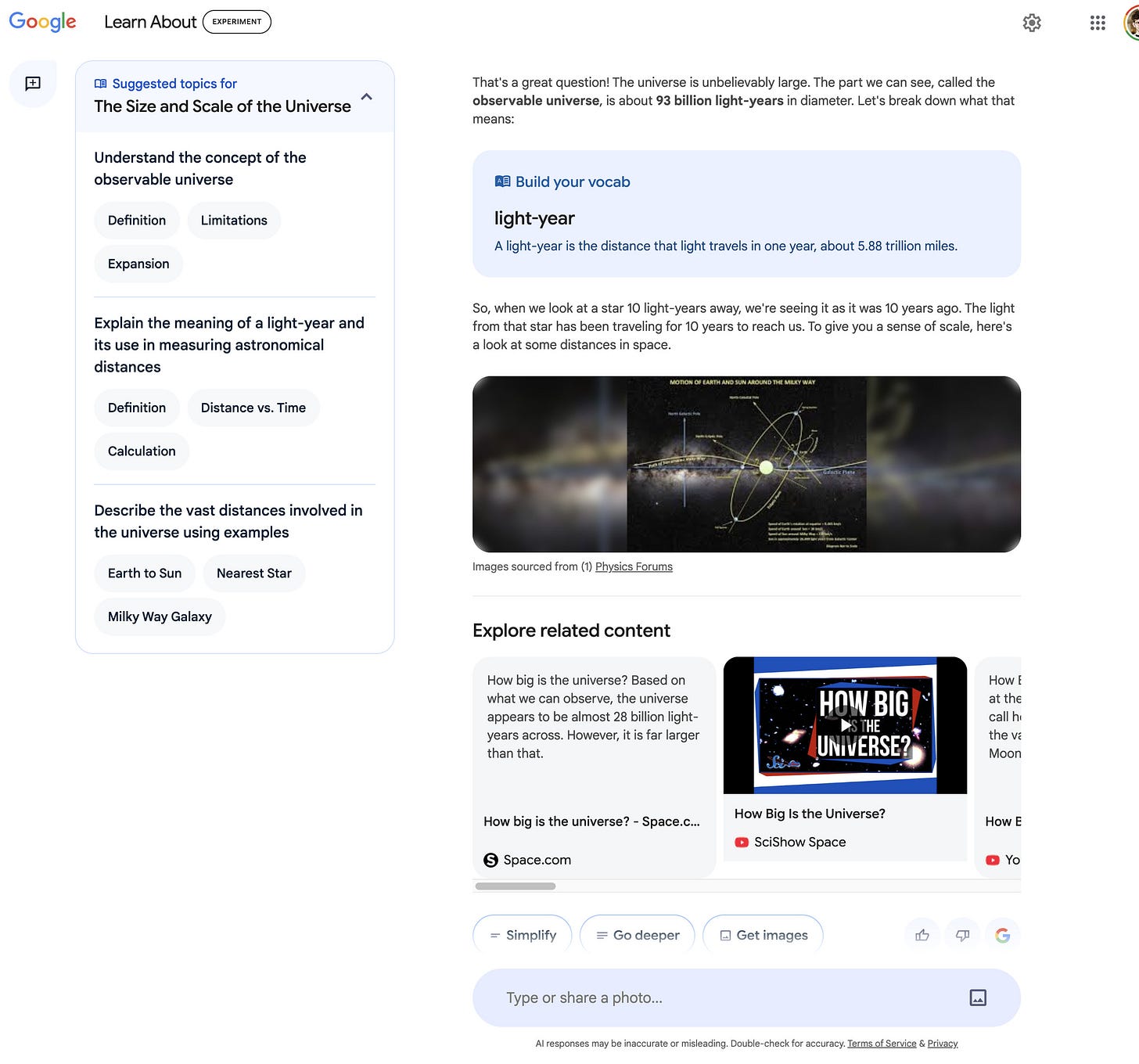

As does Google

Microsoft pushing hard on agents: Magentic-One: A Generalist Multi-Agent System for Solving Complex Tasks

Anthropic quietly raised another $4b from Amazon

And Mistral launches:

their new large (123b parameter) multi-model, Pixtral Large

and a moderation API which could be very useful

“Over the past few months, we’ve seen growing enthusiasm across the industry and research community for new AI-based moderation systems, which can help make moderation more scalable and robust across applications,” Mistral wrote in a blog post. “Our content moderation classifier leverages the most relevant policy categories for effective guardrails and introduces a pragmatic approach to model safety by addressing model-generated harms such as unqualified advice and PII."

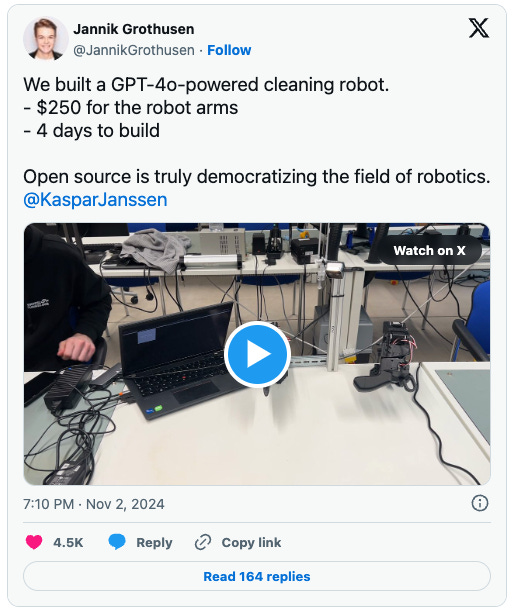

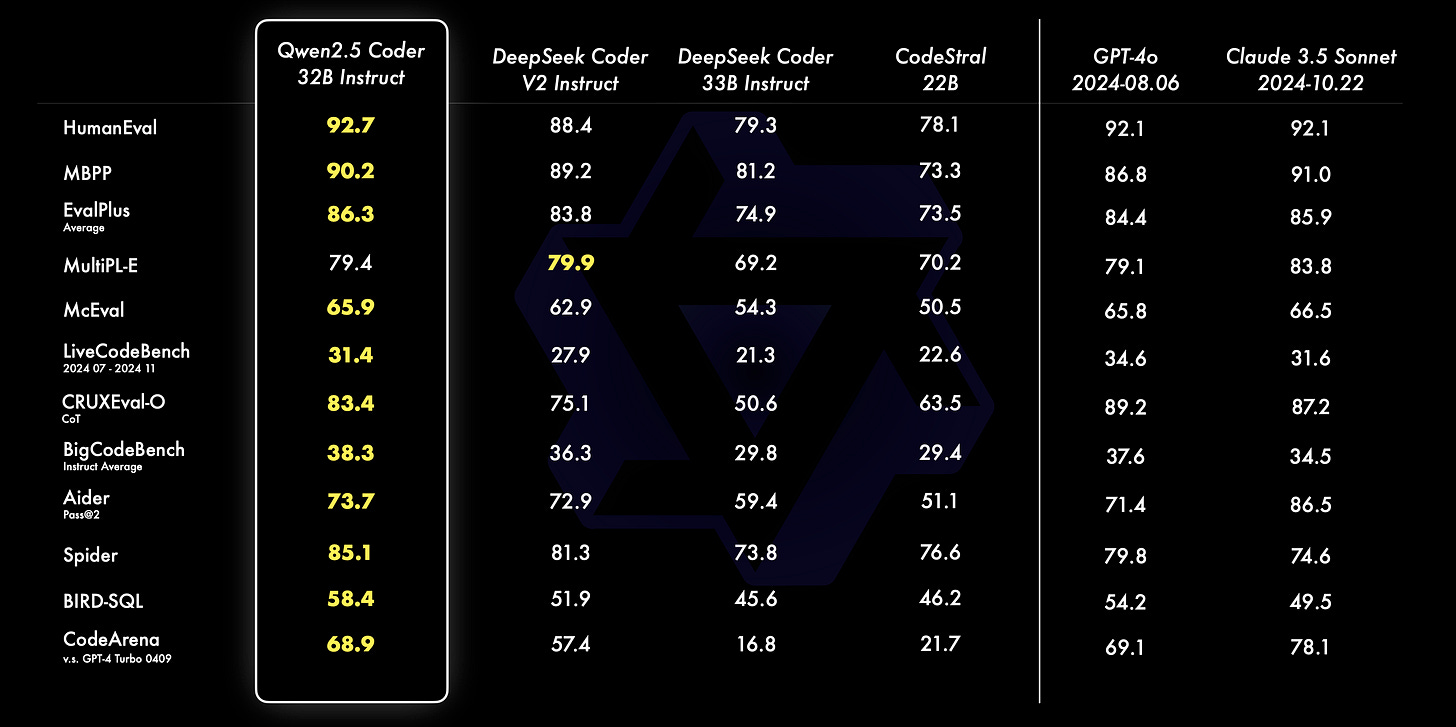

Meanwhile, Open Source development is arguably moving faster than the big commercial players, and for once it not Meta leading the way

Qwen2.5-Coder Series: Powerful, Diverse, Practical (from Alibaba Cloud)

Largest open source mixture of experts transformer based model: Hunyuan-Large (Tencent)

Near plans to build world’s largest 1.4T parameter open-source AI model

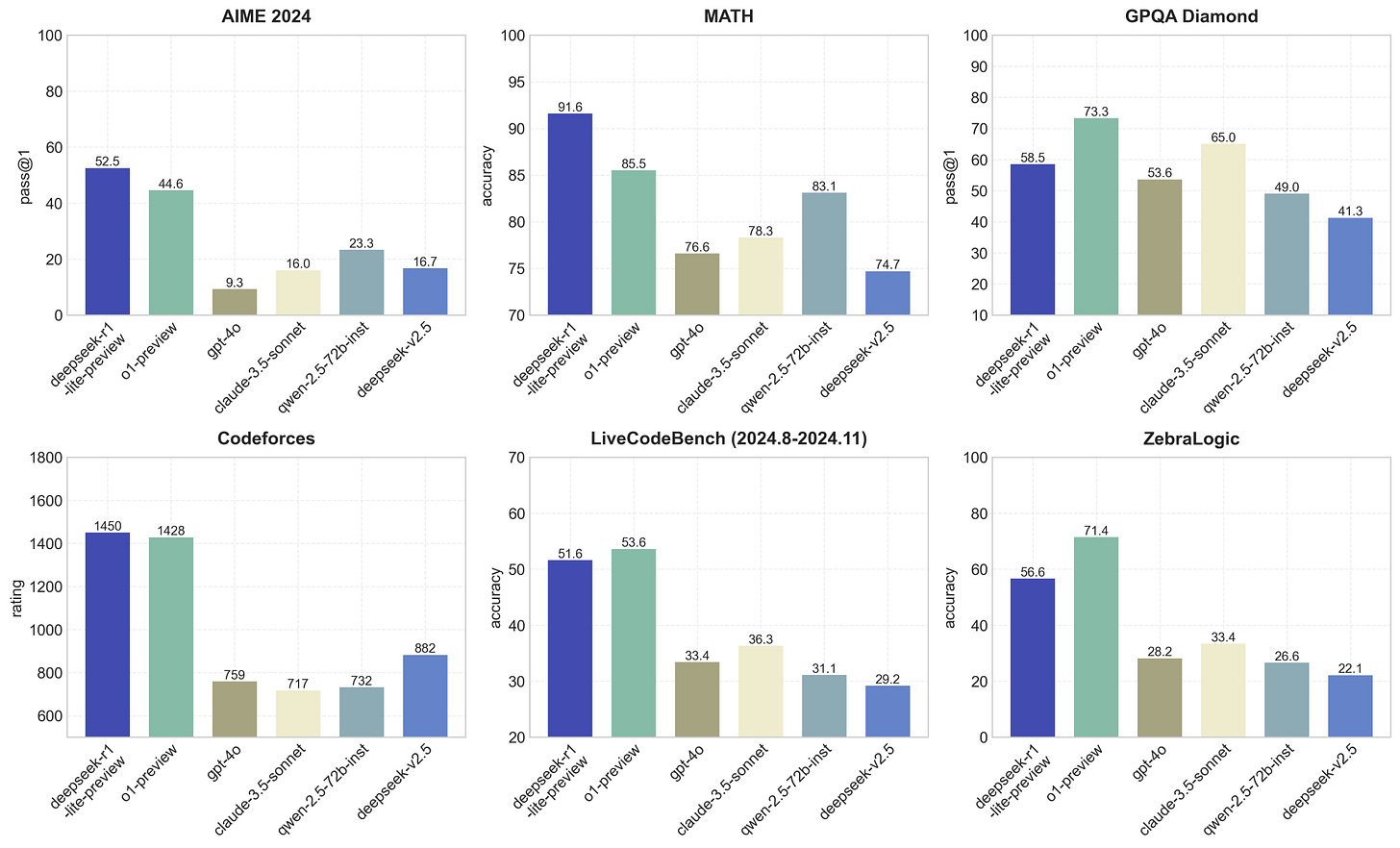

DeepSeek’s latest model on a par with OpenAI latest reasoning model: DeepSeek-R1-Lite-Preview is now live: unleashing supercharged reasoning power! (see also Alibaba’s new open reasoning model, QwQ-32B-Preview)

First open source model with 1m token context length - Qwen2.5-Turbo

And a great new data set for training multi-lingual LLMs

If you’re interested in voice

ElevenLabs now offers ability to build conversational AI agents

DeepL launches DeepL Voice, real-time, text-based translations from voices and videos

And hertz-dev: open source full duplex conversational audio!

Finally- this is pretty amazing: Oasis: an interactive, explorable world model - Sohu makes realtime AI video work at scale

Real world applications and how to guides

Lots of applications and tips and tricks this month

Some interesting practical examples of using AI capabilities in the wild

Although self driving cars always seem to be just about to arrive, Waymo have been at the forefront throughout, and have steadily and systematically been improving. Introducing Waymo's Research on an End-to-End Multimodal Model for Autonomous Driving. Step back and think about the models required to do this…

Can AI help with innovation, identifying links between disparate fields? Very cool- Graph-based AI model maps the future of innovation

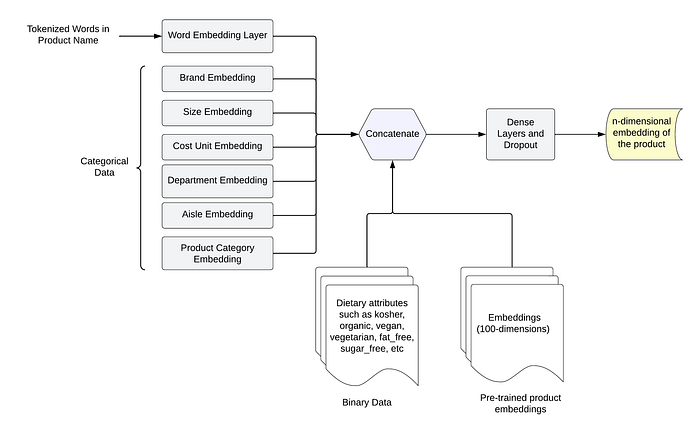

"The AI model found unexpected similarities between biological materials and “Symphony No. 9,” suggesting that both follow patterns of complexity. “Similar to how cells in biological materials interact in complex but organized ways to perform a function, Beethoven's 9th symphony arranges musical notes and themes to create a complex but coherent musical experience,” says Buehler."More real world tips from Instacart: How Instacart Uses Machine Learning to Suggest Replacements for Out-of-Stock Products

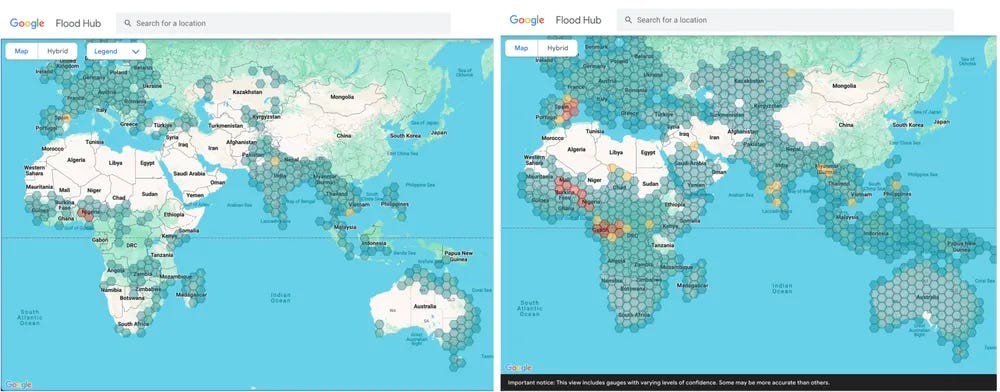

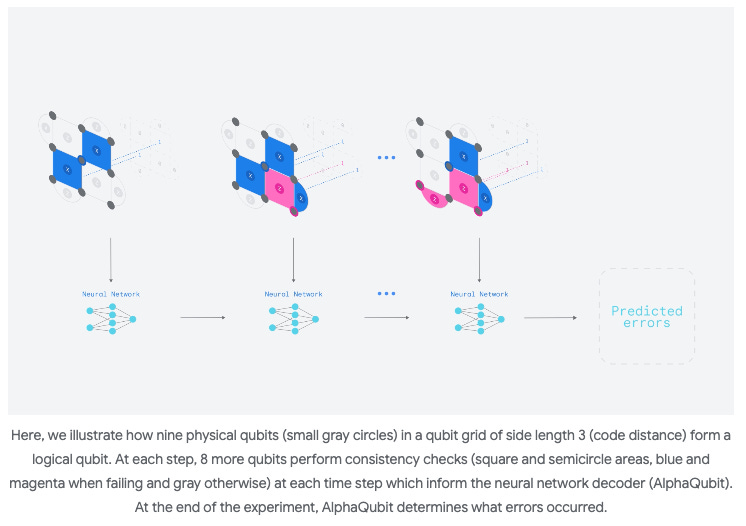

As seems to be the case every month, DeepMind releases ground breaking research: AlphaQubit tackles one of quantum computing’s biggest challenges

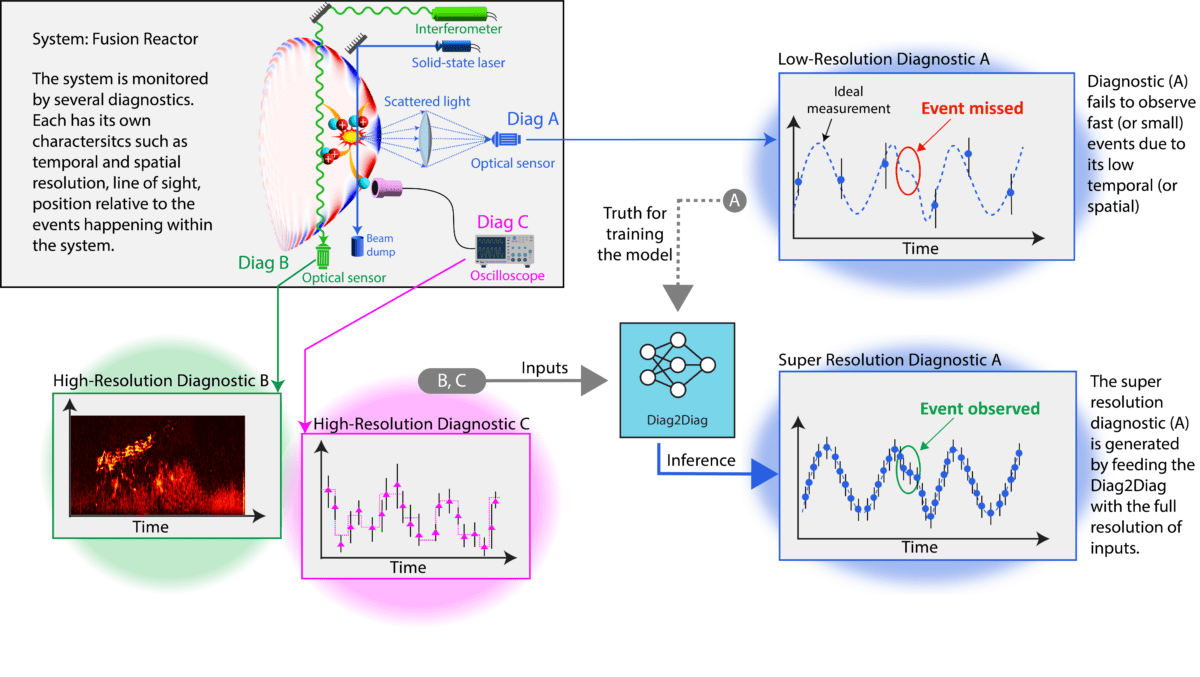

Can AI help with Nuclear Fusion? AI for Real-time Fusion Plasma Behavior Prediction and Manipulation

Elegant- Space weather mapped by millions of smartphones

"GPS and other navigation signals originate from satellites orbiting about 19,000-40,000 km above the earth. These signals largely travel undisturbed through space until they hit the plasma of the ionosphere. Just as walking down a crowded street can slow you down, higher free electron concentrations in the ionosphere slow and disperse satellite signals. A GPS receiver relies on extremely precise timing of the radio signal to work out the receiver position. So, the delays caused by the ionosphere lead to location errors. GPS receivers in most phones use a simple correction model that can remove about half of this error. We wondered whether advances in sensor technology available in today’s mobile phones, along with the large number of mobile phone users, could enable more detailed ionosphere mapping that could improve location accuracy."The rise of the agents: LinkedIn launches its first AI agent to take on the role of job recruiters

"It’s designed to take on a recruiter’s most repetitive task so they can spend more time on the most impactful part of their jobs,” Hari Srinivasan, LinkedIn’s VP of product, said in an interview — “a big statement,” he admitted.An impressive win- SQLite has been around a long time! Google's 'Big Sleep' AI Project Uncovers Real Software Vulnerabilities

“We believe this is the first public example of an AI agent finding a previously unknown exploitable memory-safety issue in widely used real-world software,” Google’s security researchers wrote in a blog post on Friday.

Lots of great tutorials and how-to’s this month

Want to explore alphafold, the ground breaking protein folding algorithm?

Bytedance researchers have released a trainable PyTorch reproduction

And then DeepMind released the whole thing!

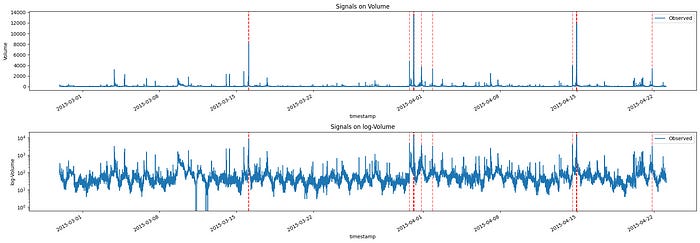

Detecting Anomalies in Social Media Volume Time Series - doing it from scratch with numpy and scipy

How to Do Bad Biomarker Research … if you wanted to? Good read, with useful insights on statistical interpretation and sample sizes

"So the investigators began with an ordinal response, then lost much of the information in HDRS by dichotomization. This loses information about the severity of depression and misses close calls around the HDRS=7 threshold. It treats patients with HDRS=7 and 8 as more different than patients with HDRS=8 and HDRS=25. No claim of differential treatment effect should be believed unless a dose-response relationship for the interacting factor can be demonstrated. The “dumbing-down” of HDRS reduces the effective sample size and makes the results highly depend on the cutoff of 7. Lower effective size means decreased reproducibility."Speaking of statistical interpretation- excellent editorial in the American Statistician - Moving to a World Beyond “p < 0.05” - definitely worth a read

"This is a world where researchers are free to treat “p = 0.051” and “p = 0.049” as not being categorically different, where authors no longer find themselves constrained to selectively publish their results based on a single magic number. In this world, where studies with “p < 0.05” and studies with “p > 0.05” are not automatically in conflict, researchers will see their results more easily replicated—and, even when not, they will better understand why."Intriguing paper on avoiding over-fitting: “Why you don’t overfit, and don’t need Bayes if you only train for one epoch”

"In particular, we show that Bayesian inference can be understood as minimizing the expected log-likelihood under data drawn from the true data generating process, i.e. minimizing the expected test-loss. Critically, we show that in the data-rich setting where we only have one epoch, we can equivalently optimize the exact same objective, simply using standard “maximum likelihood” training"It can be hard to find hands on Reinforcement Learning tutorials, so nice to see this: Using Reinforcement Learning and $4.80 of GPU Time to Find the Best HN Post Ever

"In this post we’ll discuss how to build a reward model that can predict the upvote count that a specific HN story will get. And in follow-up posts in this series, we’ll use that reward model along with reinforcement learning to create a model that can write high-value HN stories!"Need a diagram? How To Create Software Diagrams With ChatGPT and Claude

I’m sure many are attempting to tune prompts… useful playbook

"The “art” of prompting, much like the broader field of deep learning, is empirical at best and alchemical at worst. While LLMs are rapidly transforming numerous applications, effective prompting strategies remain an open question for the field. This document was born out of a few years of working with LLMs, and countless requests for prompt engineering assistance. It represents an attempt to consolidate and share both helpful intuitions and practical prompting techniques."Getting LLMs to pull data from databases is increasingly possible but very flakey- this looks worth exploring if you are attempting to do this

"To tackle the challenges of large language model performance in natural language to SQL tasks, we introduce XiYan-SQL, an innovative framework that employs a multi-generator ensemble strategy to improve candidate generation. We introduce M-Schema, a semi-structured schema representation method designed to enhance the understanding of database structures. "Understanding flow based generative models- Flow with What You Know

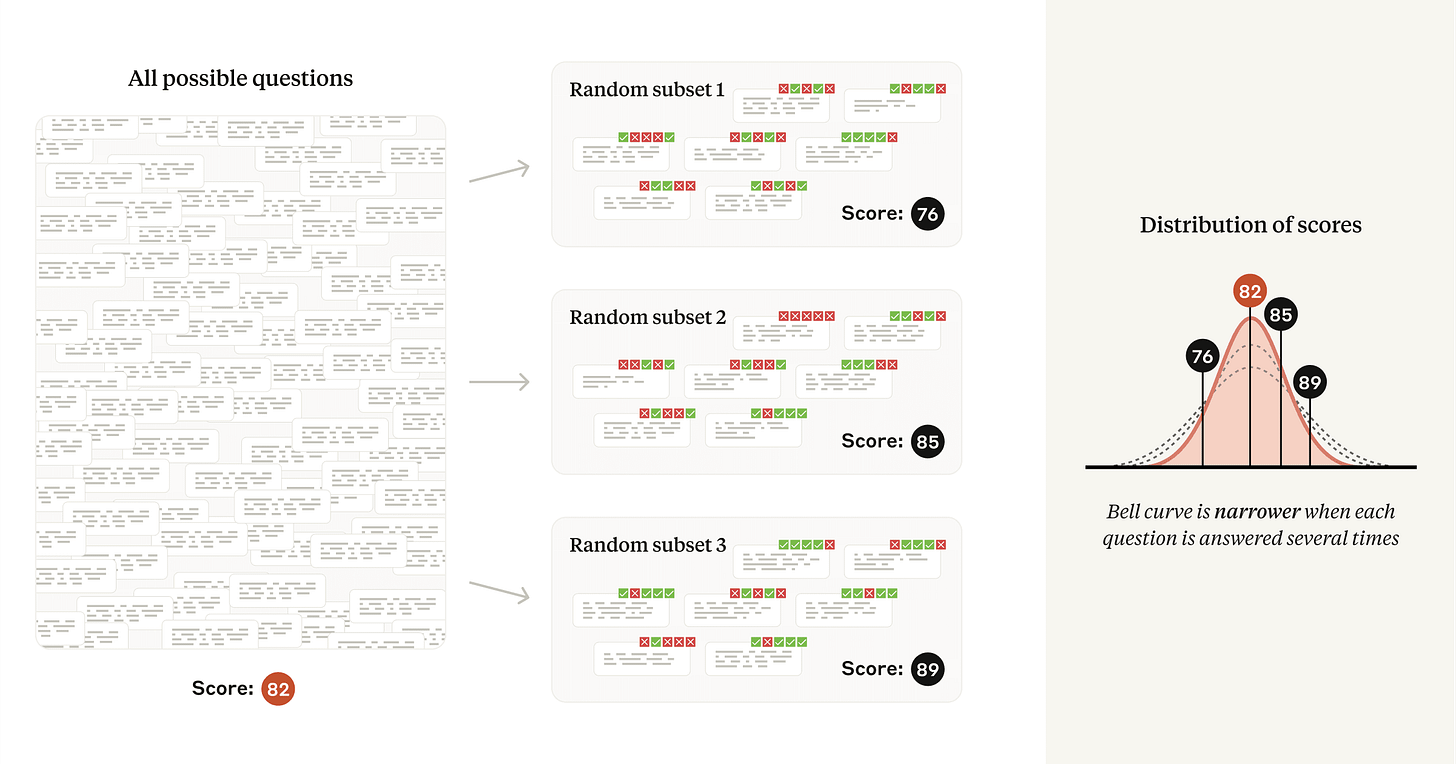

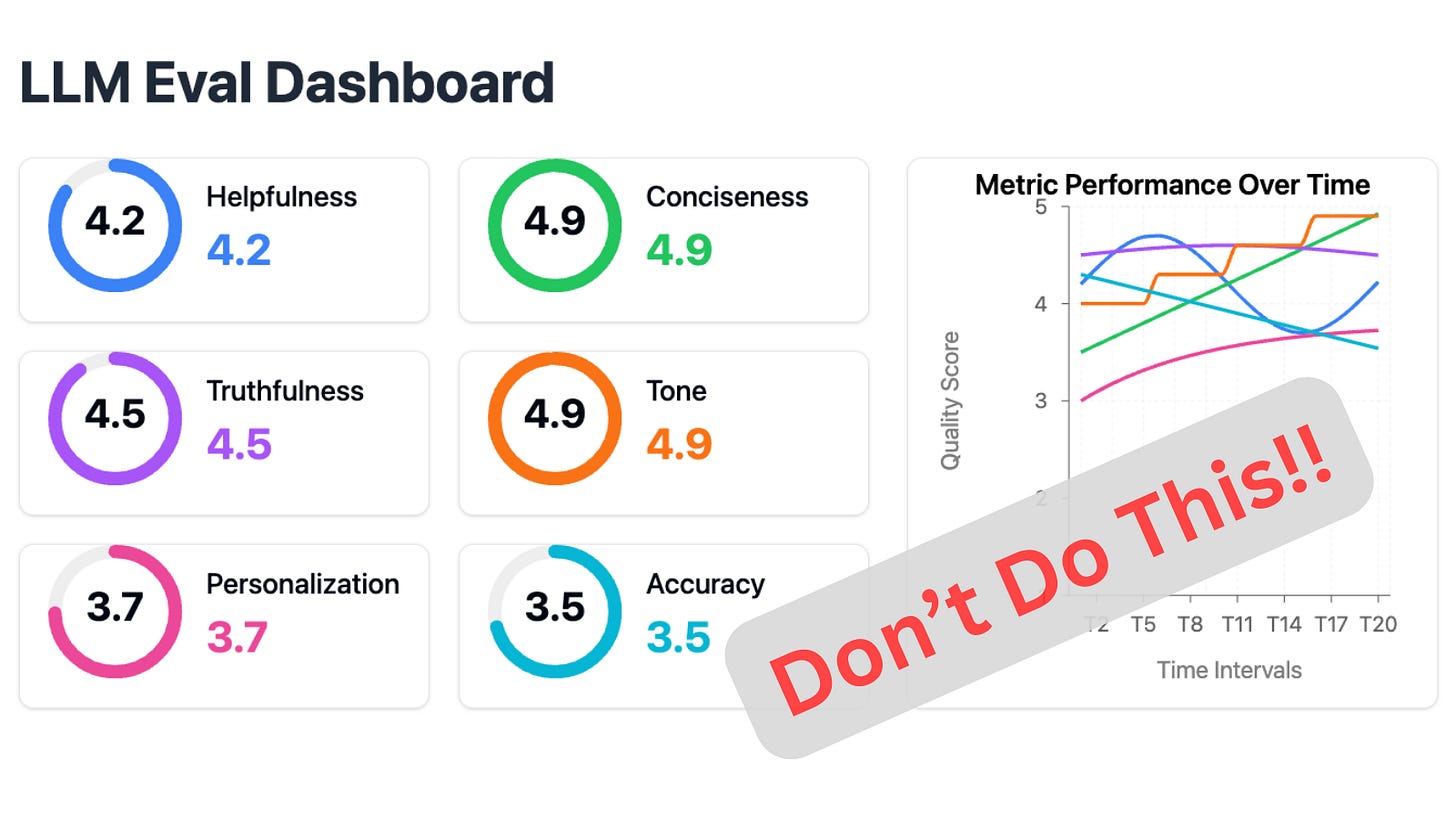

"Flow-based generative AI models have been gaining significant traction as alternatives or improvements to traditional diffusion approaches in image and audio synthesis. These flow models excel at learning optimal trajectories for transforming probability distributions, offering a mathematically elegant framework for data generation. The approach has seen renewed momentum following Black Forest Labs’ success with their FLUX models [1], spurring fresh interest in the theoretical foundations laid by earlier work on Rectified Flows [2] in ICLR 2023. Improvements such as [3] have even reached the level of state-of-the-art generative models for one or two-step generation."How should we be evaluating model outputs? Good post from Anthropic with some solid practical tips

Finally, if you are building an AI application, this is essential reading on evaluations: Creating a LLM-as-a-Judge That Drives Business Results

Practical tips

How to drive analytics, ML and AI into production

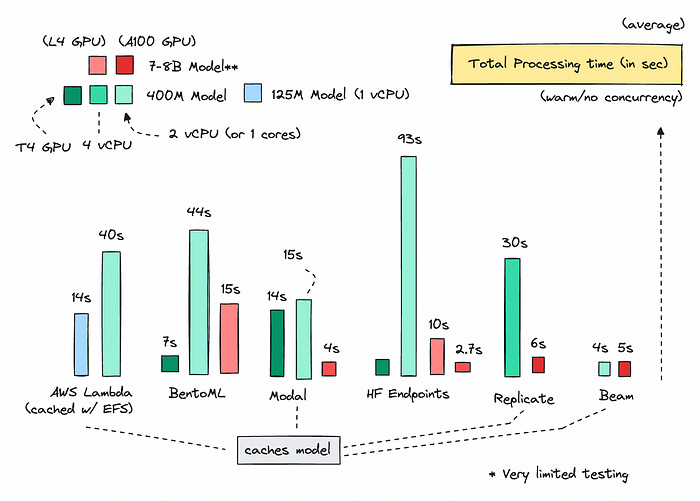

How much does it really cost to host your own open source LLM? Good post: Economics of Hosting Open Source LLMs

Incremental Jobs and Data Quality Are On a Collision Course

"Bad things happen when uncontrolled changes collide with incremental jobs that feed their output back into other software systems or pollute other derived data sets. Reacting to changes is a losing strategy"Still encouraging people to try polars! The Polars vs pandas difference nobody is talking about

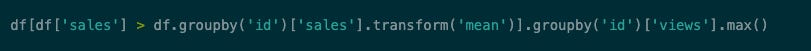

This sounds counter-intuitive when you first look at it, but does make sense (I think!)- What Shapes Do Matrix Multiplications Like?

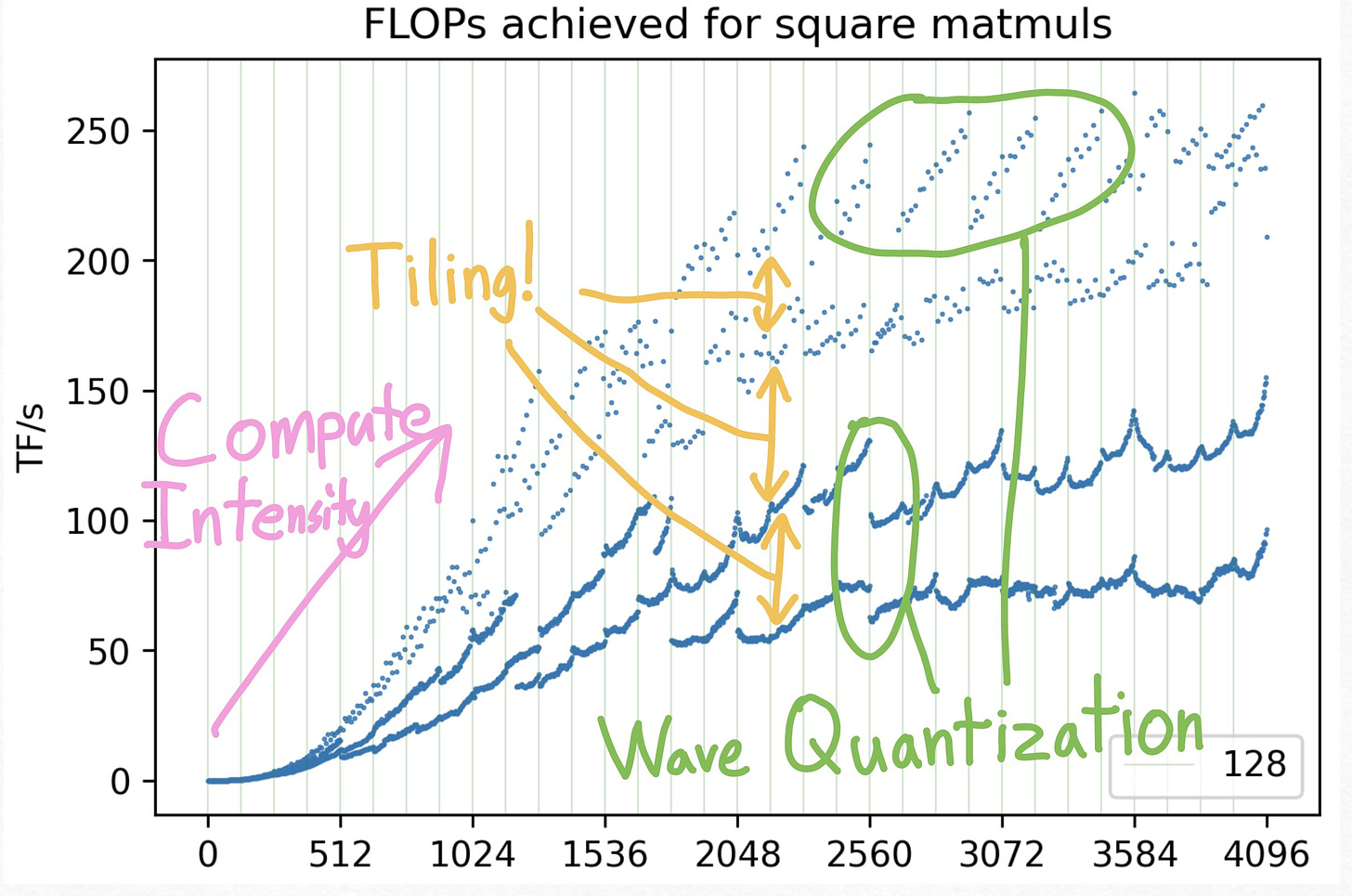

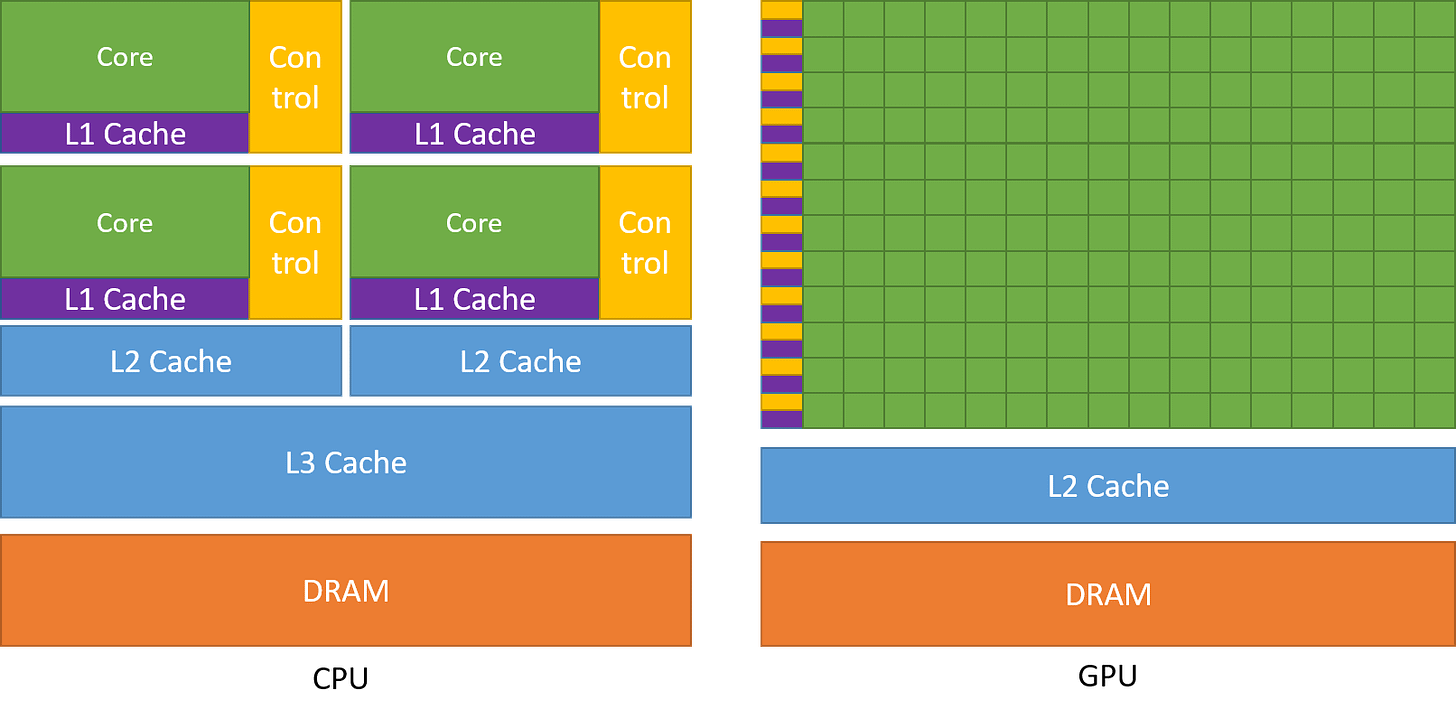

Excellent read - What Every Developer Should Know About GPU Computing

Viva Pythonista! Octoverse: AI leads Python to top language as the number of global developers surges - and Google’s head of research still thinks its a good idea to learn to code!

"In 2024, Python overtook JavaScript as the most popular language on GitHub, while Jupyter Notebooks skyrocketed—both of which underscore the surge in data science and machine learning on GitHub. We’re also seeing increased interest in AI agents and smaller models that require less computational power, reflecting a shift across the industry as more people focus on new use cases for AI."

Bigger picture ideas

Longer thought provoking reads - sit back and pour a drink! ...

AI companies hit a scaling wall - Casey Newton (more on this topic here)

"Over the past week, several stories sourced to people inside the big AI labs have reported that the race to build superintelligence is hitting a wall. Specifically, they say, the approach that has carried the industry from OpenAI’s first large language model to the LLMs we have today has begun to show diminishing returns."The Present Future: AI's Impact Long Before Superintelligence - Ethan Mollick

"But these are not uncontroversial assertions, and we do not know if they are right. Yet, in many ways, we do not need super-powerful AIs for the transformation of work. We already have more capabilities inherent in today’s Gen2/GPT-4 class systems than we have fully absorbed. Even if AI development stopped today, we would have years of change ahead of us integrating these systems into our world."The Subprime AI Crisis - Ed Zitron (hat tip Kate Land!)

"There won’t be much levity in this piece. I’m going to paint you a bleak picture — not just for the big AI players, but for tech more widely, and for the people who work at tech companies — and tell you why I think the conclusion to this sordid saga, as brutal and damaging as it will be, is coming sooner than you think."

Fun Practical Projects and Learning Opportunities

A few fun practical projects and topics to keep you occupied/distracted:

Visually stunning - method for random gradients

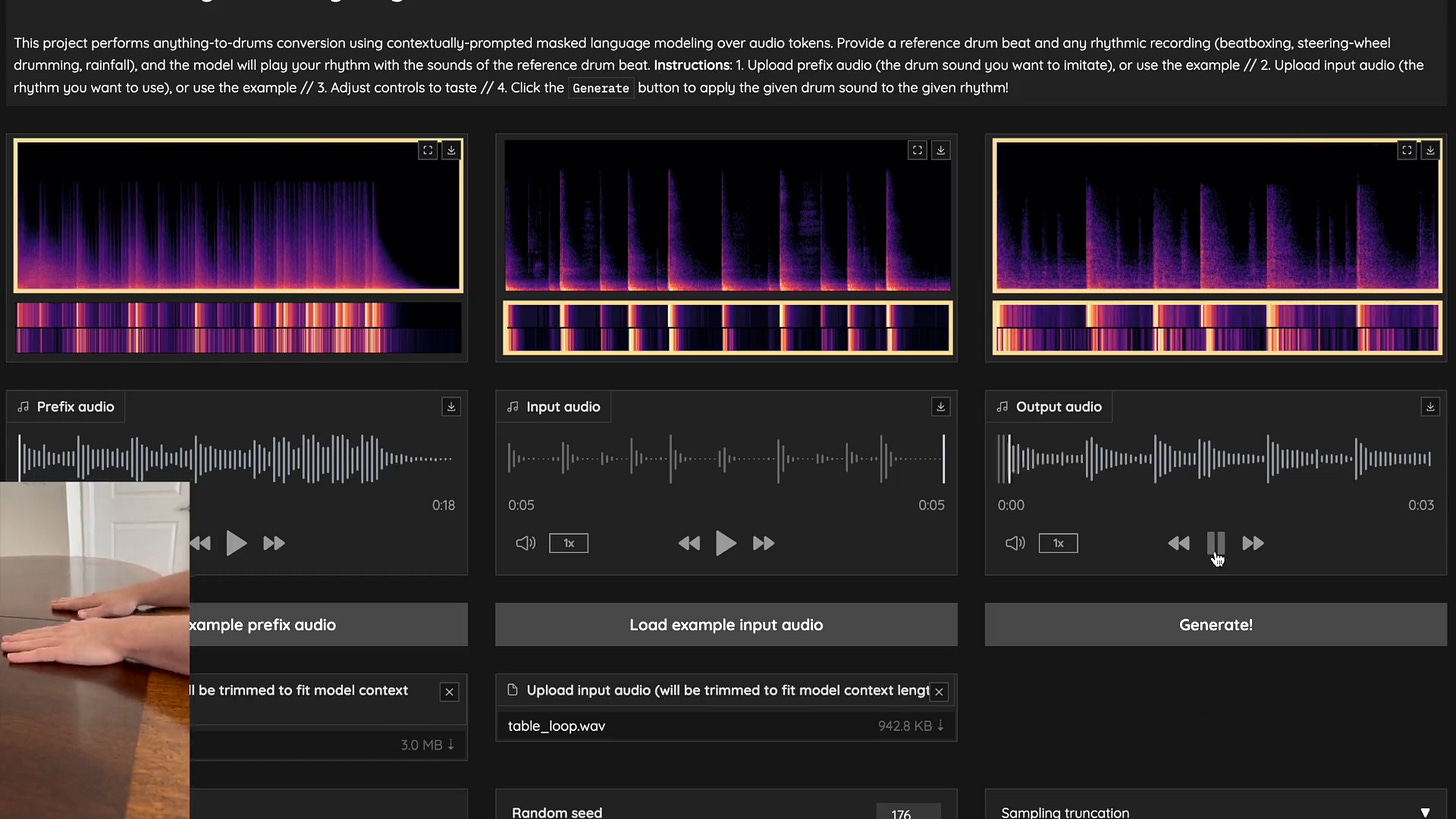

"Today, AI image generation models, which didn’t exist when Ben and I first experimented with gradients, open up a wealth of possibilities. While the process of using them can be unpredictable—more akin to a slot machine than exact science—they offer exciting opportunities, and the technology and its tools are only getting better. Below, I demonstrate three proofs of concept for generating textural gradient images, using OpenAI’s DALL·E 2 model (a favorite of mine since its release in April 2022)."Fun with music! Zero-Shot Anything-to-Drums Conversion

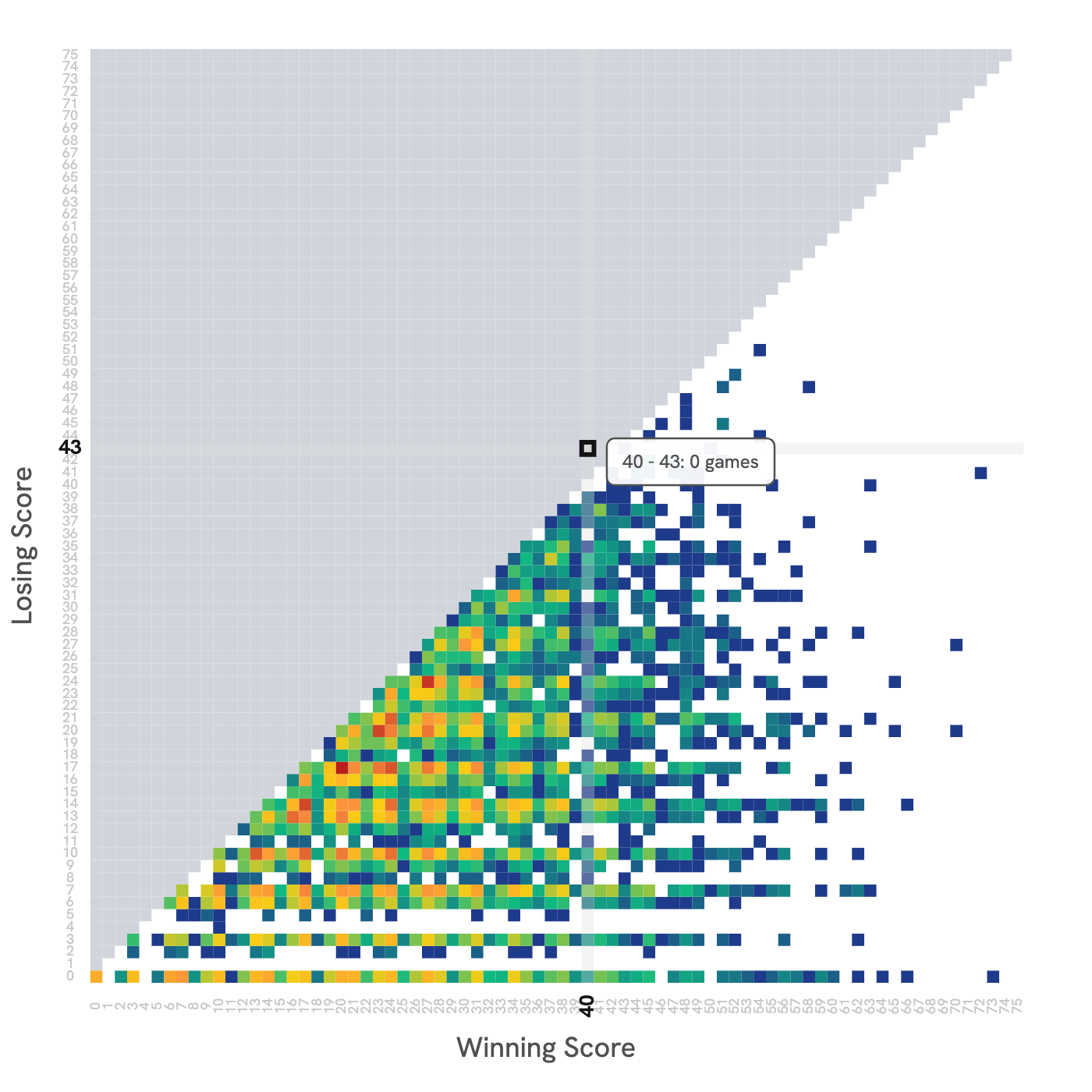

How to Tackle the Weekend Quiz Like a Bayesian

"Mark is a thin man from Germany with glasses who likes to listen to Mozart. Which is more likely? That Mark is A) a truck driver or (B) a professor of literature in Frankfurt?|Build a fun game from scratch - Indy world (a knock off Wumpus world) - hat tip Simon Raper

-------------------- Indy is on row 1 and column 1 nothing here up is blocked down is worth exploring left is blocked right is worth exploring Choosing down --------------------

Updates from Members and Contributors

Steve Haben at the Energy Systems Catapult draws our attention to what looks like being an interesting AI for Net Zero Conference in December (16-19) in Exeter: more details here

Harald Carlens recently published this interesting post on Machine Learning and Mathematics

Sarah Phelps, Policy Advisor at the ONS, is helping organise the next Web Intelligence Network Conference, "From Web to Data”, on 4-5th February in Gdansk, Poland. Anyone interested in generating or augmenting their statistics with web data should definitely check it out.

Fiona Spooner, senior data scientist at Our World in Data (such a great organisation!) mentions that they have just released a new chart data API, making it much easier to programmatically access the Our World in Data information - there goes my weekend! More information in the blog post here

Jobs and Internships!

The Job market is a bit quiet - let us know if you have any openings you'd like to advertise

Two Lead AI Engineers in the Incubator for AI at the Cabinet Office- sounds exciting (other adjacent roles here)!

2 exciting new machine learning roles at Carbon Re- excellent climate change AI startup

EvolutionAI, are looking to hire someone for applied deep learning research. Must like a challenge. Any background but needs to know how to do research properly. Remote. Apply here

Data Internships - a curated list

Again, hope you found this useful. Please do send on to your friends- we are looking to build a strong community of data science practitioners- and sign up for future updates here

- Piers

The views expressed are our own and do not necessarily represent those of the RSS