November Newsletter

Industrial Strength Data Science and AI

Hi everyone-

I hope October went well for you all and the increasingly dark mornings are not too painful… Lots of exciting AI and Data Science news below and I really encourage you to read on, but some edited highlights if you are short for time!

When you give a Claude a mouse - Ethan Mollick

Al Will Take Over Human Systems From Within - Interview with Yuval Noah Harari

Machines of Loving Grace - Dario Amodei

‘They don’t just fall out of trees’: Nobel awards highlight Britain’s AI pedigree

Open source movie making with Meta Movie Gen

Following is the November edition of our Data Science and AI newsletter. Hopefully some interesting topics and titbits to feed your data science curiosity. NOTE: If the email doesn’t display properly (it is pretty long…) click on the “Open Online” link at the top right. Feedback welcome!

handy new quick links: committee; ethics; research; generative ai; applications; practical tips; big picture ideas; fun; reader updates; jobs

Committee Activities

We are continuing our work with the Alliance for Data Science professionals on expanding the previously announced individual accreditation (Advanced Data Science Professional certification) into university course accreditation. Remember also that the RSS is accepting applications for the Advanced Data Science Professional certification as well as the new Data Science Professional certification.

On Thursday 5th December (6.00-7.30pm), we are delighted to be hosting Joe Hill, author of the fantastic recent report "Getting the machine learning: Scaling AI in public services". He will be presenting his analysis on : How AI should be funded? Who should build it? How we evaluate it? What governance is required? What leadership is needed?

This will be followed by a Panel Q&A with Joe Hill, Dr Kameswarie Nunna (Director of AI PA consulting) and Will Browne (CTO, Emrys Health) on the challenges of developing AI in the public sector and the role of professional services in supporting these ambitions. Hope you can make it! Sign up here

Following the talk we will be heading for drinks, networking and crackers at 7.15pm round the corner.Following a record-breaking Royal Statistical Society Conference last month, with over 700 participants from more than 40 countries, planning is well underway for the 2025 edition which will take place in Edinburgh from 1-4 September. Proposals for conference sessions (including a stream dedicated to data science and AI) are welcome, with a deadline of 21 November. More details on the conference website.

Martin Goodson, CEO and Chief Scientist at Evolution AI, continues to run the excellent London Machine Learning meetup and is very active with events. The last event was on October 10th, when Tayyar Madabushi, Lecturer (Assistant Professor) in Artificial Intelligence at the University of Bath, presented "Emergent Abilities in Large Language Models”. Videos are posted on the meetup youtube channel.

This Month in Data Science

Lots of exciting data science and AI going on, as always!

Ethics and more ethics...

Bias, ethics, diversity and regulation continue to be hot topics in data science and AI...

As always, plenty of examples of challenging AI use cases often focused on fake or misleading information…

In South Korea, deepfake porn wrecks women’s lives and deepens gender conflict

"“It completely trampled me, even though it wasn’t a direct physical attack on my body,” she said in a phone interview with The Associated Press. She didn’t want her name revealed because of privacy concerns. Many other South Korean women recently have come forward to share similar stories as South Korea grapples with a deluge of non-consensual, explicit deepfake videos and images that have become much more accessible and easier to create."Hurricane Helene and the ‘Fuck It’ Era of AI-Generated Slop

"This is the exact same type of AI-generated slop that has gone viral time and time again over the last year on Facebook and other platforms and that I have written about numerous times. Something very disheartening is happening with this particular image, however. A specific segment of the people who have seen and understand that it is AI-generated simply do not care that it is not real and that it did not happen. To them, the image captures a vibe that is useful to them politically. In this case, the image is being used to further the idea that Joe Biden, Kamala Harris, and FEMA have messed up the hurricane response, leading to little girls suffering in a flood with their puppies. "How China Learned to Stop Worrying and Love Social Media Manipulation

"The Chinese Communist Party (CCP) was initially concerned about the rise of social media, considering it a threat to the regime. The CCP has since come to embrace social media as a way to influence domestic and foreign public opinion in the CCP's favor. Even as Beijing blocks foreign social media platforms, such as Facebook and Twitter (now X), from operating in China, it actively seeks to leverage these and other platforms for both overt propaganda and covert cyber-enabled influence operations abroad"AI Can Best Google’s Bot Detection System, Swiss Researchers Find

“This has been coming for a while,” said Matthew Green, an associate professor of computer science at the Johns Hopkins Information Security Institute. “The entire idea of captchas was that humans are better at solving these puzzles than computers. We’re learning that’s not true.”Invisible text that AI chatbots understand and humans can’t? Yep, it’s a thing

“The fact that GPT 4.0 and Claude Opus were able to really understand those invisible tags was really mind-blowing to me and made the whole AI security space much more interesting,” Joseph Thacker, an independent researcher and AI engineer at Appomni, said in an interview. “The idea that they can be completely invisible in all browsers but still readable by large language models makes [attacks] much more feasible in just about every area.”

The debate around Military AI continues

Silicon Valley is debating if AI weapons should be allowed to decide to kill

"But Tseng spoke too soon. Five days later, Anduril co-founder Palmer Luckey expressed an openness to autonomous weapons — or at least a heavy skepticism of arguments against them. The U.S.’s adversaries “use phrases that sound really good in a sound bite: Well, can’t you agree that a robot should never be able to decide who lives and dies?” Luckey said during a talk earlier this month at Pepperdine University. “And my point to them is, where’s the moral high ground in a landmine that can’t tell the difference between a school bus full of kids and a Russian tank?”Sixty countries endorse 'blueprint' for AI use in military; China opts out

"Among the details added in the document was the need to prevent AI from being used to proliferate weapons of mass destruction (WMD) by actors including terrorist groups, and the importance of maintaining human control and involvement in nuclear weapons employment."

As does the debate around the legality of the training data used in AI foundation models

The New York Times warns AI search engine Perplexity to stop using its content

"The New York Times has demanded that AI search engine startup Perplexity stop using content from its site in a cease and desist letter sent to the company, reports The Wall Street Journal. The Times, which is currently suing OpenAI and Microsoft over allegedly illegally training models on its content, says the startup has been using its content without permission, a claim made earlier this year by Forbes and Condé Nast."LAION wins copyright infringement lawsuit in German court

"Copyright AI nerds have been eagerly awaiting a decision in the German case of Kneschke v LAION (previous blog post about the case here), and yesterday we got a ruling. In short, LAION was successful in its defence against claims for copyright infringement."

More developments on the regulatory side:

FTC Announces Crackdown on Deceptive AI Claims and Schemes

"The Federal Trade Commission is taking action against multiple companies that have relied on artificial intelligence as a way to supercharge deceptive or unfair conduct that harms consumers, as part of its new law enforcement sweep called Operation AI Comply."Gov. Newsom vetoes California’s controversial AI bill, SB 1047 - this decision was actually supported by many leading safety advocates

"SB 1047 was opposed by many in Silicon Valley, including companies like OpenAI, high-profile technologists like Meta’s chief AI scientist Yann LeCun, and even Democratic politicians such as U.S. Congressman Ro Khanna. That said, the bill had also been amended based on suggestions by AI company Anthropic and other opponents."The 7th edition of the State of AI Report

"The existential risk discourse has cooled off, especially following the abortive coup at OpenAI. However, researchers have continued to deepen our knowledge of potential model vulnerabilities and misuse, proposing potential fixes and safeguards."

Meanwhile leading AI companies are still trying to make sure their models are as safe as possible

54 pages from OpenAI- “Influence and cyber operations: an update”

"Threat actors most often used our models to perform tasks in a specific, intermediate phase of activity – after they had acquired basic tools such as internet access, email addresses and social media accounts, but before they deployed “finished” products such as social media posts or malware across the internet via a range of distribution channels. Investigating threat actor behavior in this intermediate position allows AI companies to complement the insights of both “upstream” providers – such as email and internet service providers – and “downstream” distribution platforms such as social media."Anthropic just made it harder for AI to go rogue with its updated safety policy

“This revised policy sets out specific Capability Thresholds—benchmarks that indicate when an AI model’s abilities have reached a point where additional safeguards are necessary. The thresholds cover high-risk areas such as bioweapons creation and autonomous AI research, reflecting Anthropic’s commitment to prevent misuse of its technology.”

Finally, could the power hungry nature of AI drive a solution to base Green electricity? “Hungry for Energy, Amazon, Google and Microsoft Turn to Nuclear Power”

"On Monday, Google said that it had agreed to purchase nuclear energy from small modular reactors being developed by a start-up called Kairos Power, and that it expected the first of them to be running by 2030. Then Amazon, on Wednesday, said it would invest in the development of small modular reactors by another start-up, X-Energy. Microsoft’s deal with Constellation Energy to revive a reactor at Three Mile Island was announced last month."

Developments in Data Science and AI Research...

As always, lots of new developments on the research front and plenty of arXiv papers to read...

Do we really need diffusion models? Emu3: Next-Token Prediction is All You Need

"While next-token prediction is considered a promising path towards artificial general intelligence, it has struggled to excel in multimodal tasks, which are still dominated by diffusion models (e.g., Stable Diffusion) and compositional approaches (e.g., CLIP combined with LLMs). In this paper, we introduce Emu3, a new suite of state-of-the-art multimodal models trained solely with next-token prediction. By tokenizing images, text, and videos into a discrete space, we train a single transformer from scratch on a mixture of multimodal sequences."This looks very promising- attempting to combine the best of Sequence Modelling with Offline Reinforcement Modelling: Meta-DT

"A longstanding goal of artificial general intelligence is highly capable generalists that can learn from diverse experiences and generalize to unseen tasks. The language and vision communities have seen remarkable progress toward this trend by scaling up transformer-based models trained on massive datasets, while reinforcement learning (RL) agents still suffer from poor generalization capacity under such paradigms. To tackle this challenge, we propose Meta Decision Transformer (Meta-DT), which leverages the sequential modeling ability of the transformer architecture and robust task representation learning via world model disentanglement to achieve efficient generalization in offline meta-RL."Refining Attention- DARNet: Dual Attention Refinement Network

"At a cocktail party, humans exhibit an impressive ability to direct their attention. The auditory attention detection (AAD) approach seeks to identify the attended speaker by analyzing brain signals, such as EEG signals. However, current AAD algorithms overlook the spatial distribution information within EEG signals and lack the ability to capture long-range latent dependencies, limiting the model's ability to decode brain activity. To address these issues, this paper proposes a dual attention refinement network with spatiotemporal construction for AAD, named DARNet, which consists of the spatiotemporal construction module, dual attention refinement module, and feature fusion & classifier module."Small is beautiful from Apple research- MM1.5: Methods, Analysis & Insights from Multimodal LLM Fine-tuning

"Our models range from 1B to 30B parameters, encompassing both dense and mixture-of-experts (MoE) variants, and demonstrate that careful data curation and training strategies can yield strong performance even at small scales (1B and 3B)."Do LLMs really understand mathematical reasoning? Not really… GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning in Large Language Models (speaking of assessing reasoning capabilities, DeepMind recently released a new benchmark)

"Furthermore, we investigate the fragility of mathematical reasoning in these models and show that their performance significantly deteriorates as the number of clauses in a question increases. We hypothesize that this decline is because current LLMs cannot perform genuine logical reasoning; they replicate reasoning steps from their training data. Adding a single clause that seems relevant to the question causes significant performance drops (up to 65%) across all state-of-the-art models, even though the clause doesn't contribute to the reasoning chain needed for the final answer."An elegant new approach for embeddings- Contextual Document Embeddings

"We propose two complementary methods for contextualized document embeddings: first, an alternative contrastive learning objective that explicitly incorporates the document neighbors into the intra-batch contextual loss; second, a new contextual architecture that explicitly encodes neighbor document information into the encoded representation."Do LLM’s know when they are hallucinating? Maybe they do- LLMs Know More Than They Show: On the Intrinsic Representation of LLM Hallucinations

"In this work, we show that the internal representations of LLMs encode much more information about truthfulness than previously recognized. We first discover that the truthfulness information is concentrated in specific tokens, and leveraging this property significantly enhances error detection performance. Yet, we show that such error detectors fail to generalize across datasets, implying that -- contrary to prior claims -- truthfulness encoding is not universal but rather multifaceted"Finally… not current research, but lets talk Nobel Prizes!

‘They don’t just fall out of trees’: Nobel awards highlight Britain’s AI pedigree - more coverage here

"First came the physics prize. The American John Hopfield and the British-Canadian Geoffrey Hinton won for foundational work on artificial neural networks, the computational architecture that underpins modern AI such as ChatGPT. Then came the chemistry prize, with half handed to Demis Hassabis and John Jumper at Google DeepMind. Their AlphaFold program solved a decades-long scientific challenge by predicting the structure of all life’s proteins."Leave it to Gary Marcus to burst the positivity! Two Nobel Prizes for AI, and Two Paths Forward

"Let’s start with Hinton’s award, which has led a bunch of people to scratch their head. He has absolutely been a leading figure in the machine learning field for decades, original, and, to his credit, persistent even when his line of research was out of favor. Nobody could doubt that he has made major contributions. But the citation seems to indicate that he won it for inventing back-propagation, but, well, he didn’t."

Generative AI ... oh my!

Still moving at such a fast pace it feels in need of it's own section, for developments in the wild world of large language models...

As always, OpenAI keeps in the news

Since they are apparently Growing Fast and Burning Through Piles of Money, it’s a good thing their raised a lot more money: “We’ve raised $6.6B in new funding at a $157B post-money valuation”

And their recent Dev Day was packed with announcements

Driving reduce pricing through prompt caching

And a model distillation API

"Model distillation involves fine-tuning smaller, cost-efficient models using outputs from more capable models, allowing them to match the performance of advanced models on specific tasks at a much lower cost. Until now, distillation has been a multi-step, error-prone process, which required developers to manually orchestrate multiple operations across disconnected tools, from generating datasets to fine-tuning models and measuring performance improvements."

Google keeps delivering as well, building AI into more and more products:

Google brings ads to AI Overviews as it expands AI’s role in search (see here for more details)

Not content with the amazing autogenerated podcasts from NotebookLM … now you can customise them!

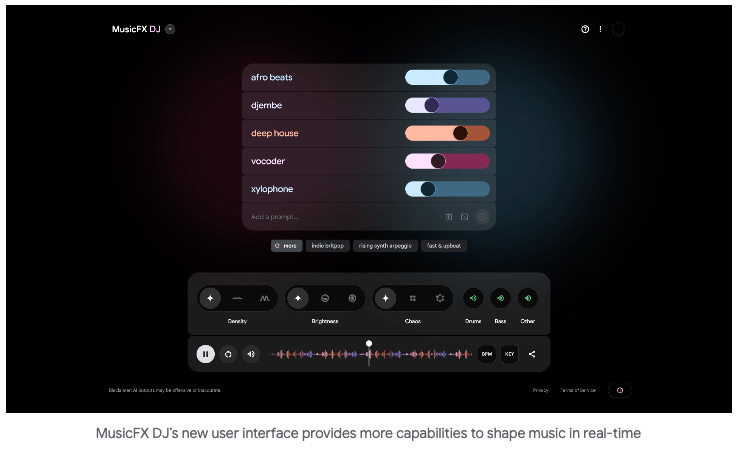

And this looks like fun- How Jacob Collier helped shape the new MusicFX DJ

Microsoft keep rolling out improvements to their Copilot suite of products, now including Copilot Labs

Anthropic continues to impress (I use Claude daily):

Anthropic challenges OpenAI with affordable batch processing

And ‘Computer Use’ is properly groundbreaking (along with the new analysis tool)

Amazon continues to lag, although they have new AI powered shopping guides and advertiser tools to generate audio ads

Meta continues to fly the open-source flag with excellent new releases and functionality:

Loads of impressive new releases, including Segment Anything 2.1, seamless speech to text with Spirit LM, and lots more- well worth a read

And then there’s this- Meta Movie Gen

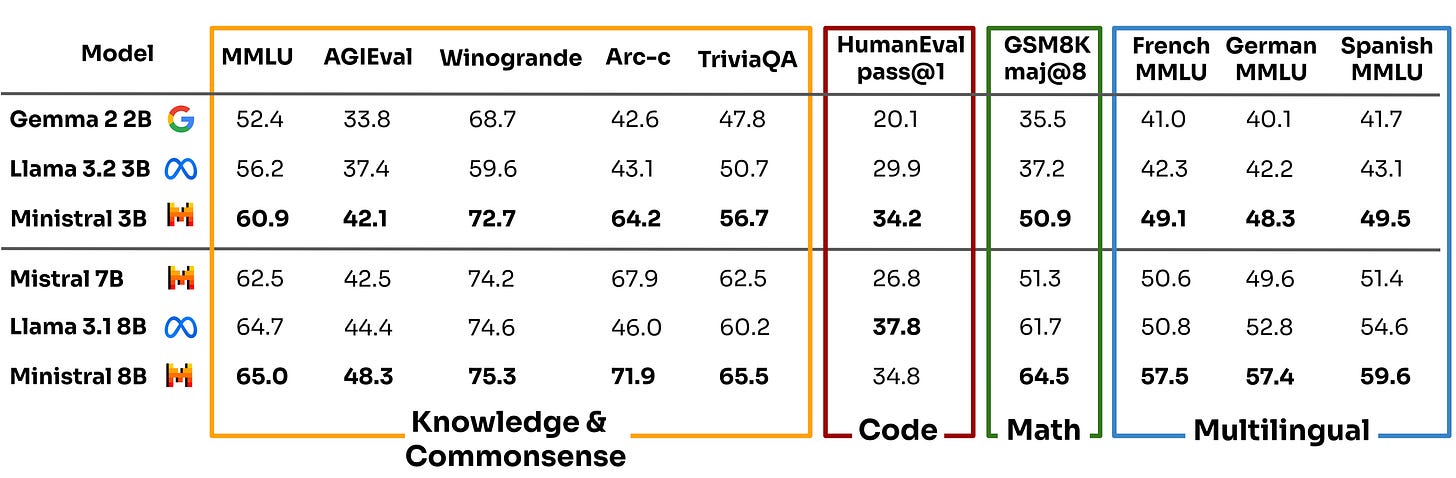

Mistral released new edge (small) models - Un Ministral, des Ministraux

And Stability released Stable Diffusion 3.5

We have the first open multimodal mixture of experts model from RhymesAI

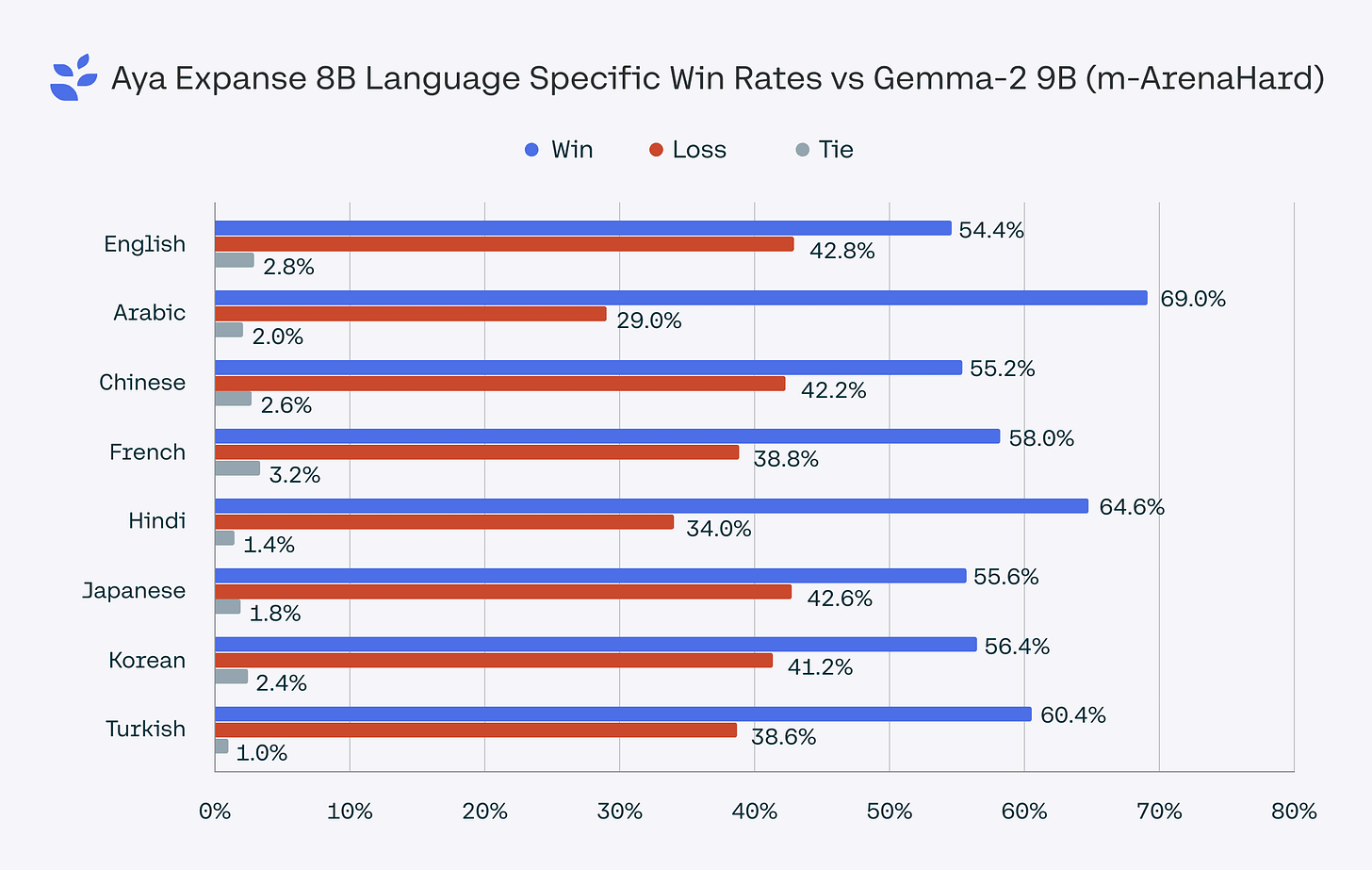

While Cohere released Aya, a state of the art multilingual family of models

Real world applications and how to guides

Lots of applications and tips and tricks this month

Some interesting practical examples of using AI capabilities in the wild

DeepMind at it again - How AlphaChip transformed computer chip design

How Neuromnia is transforming ABA therapy with Llama 3.1

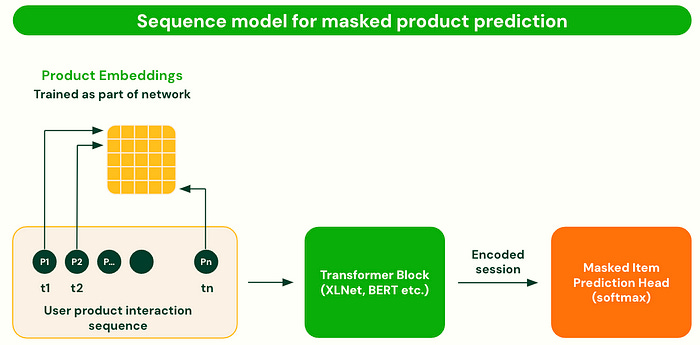

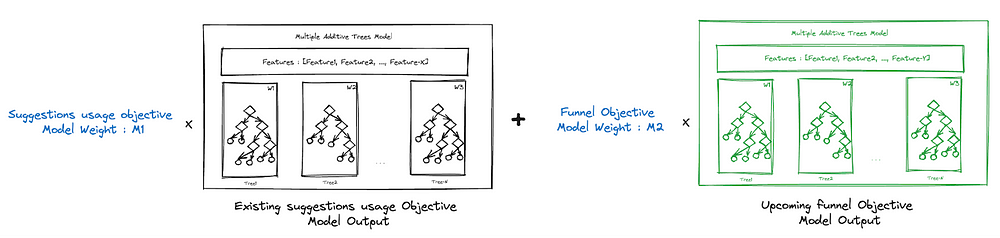

"Many clinics lack robust analytics capabilities,” Gupta notes. “Our platform provides automated, actionable insights to optimize and streamline the entire treatment process, from intake to discharge."Multi Objective Optimisation in Suggestions Ranking @ Flipkart

Can AI really compete with human data scientists? OpenAI’s new benchmark puts it to the test

"The results reveal both the progress and limitations of current AI technology. OpenAI’s most advanced model, o1-preview, when paired with specialized scaffolding called AIDE, achieved medal-worthy performance in 16.9% of the competitions. This performance is notable, suggesting that in some cases, the AI system could compete at a level comparable to skilled human data scientists. However, the study also highlights significant gaps between AI and human expertise. The AI models often succeeded in applying standard techniques but struggled with tasks requiring adaptability or creative problem-solving. This limitation underscores the continued importance of human insight in the field of data science."

Lots of great tutorials and how-to’s this month

A fun story to help remember precision and recall from our very own Will Browne: Terrifying Piranhas and Funky Pufferfish

"It’s time to get out the Recall Trawler with Super Sensitive sonar. This boat has a big old net that scrapes the lake and the sonar lets you know exactly where the terrifying piranhas are. This is great as both these bits of tech mean that you’ve caught all the piranhas! The problem is that your net has caught all the pufferfish too, it’s not very Specific."Lost in Transformation: the Horror and Wonder of Logit

"Perhaps no other subject in applied statistics and machine learning has caused people as much trouble as the humble logit model. Most people who learn either subject start off with some form of linear regression, and having grasped it, are then told that if they have a variable with only two values, none of the rules apply. Horror of horrors: logit is a non-linear model, a doom from which few escape unscathed. Interpreting logit coefficients is a fraught exercise as not only applied researchers but also statisticians have endless arguments about how this should be done."No learning without randomness

"We call it noise, volatility, nuisance, variance, non-determinism, and uncertainty. We have to work hard to keep randomness under control: we try to keep our code reproducible with random seeds, we have to deal with missing values, and sometimes we struggle because the data is not such a good random sample. But there’s another side to randomness. Without randomness, there would be no machine learning. In this post, we explore the role of randomness in helping machines learn."Everything you ever wanted to know about Gaussians!

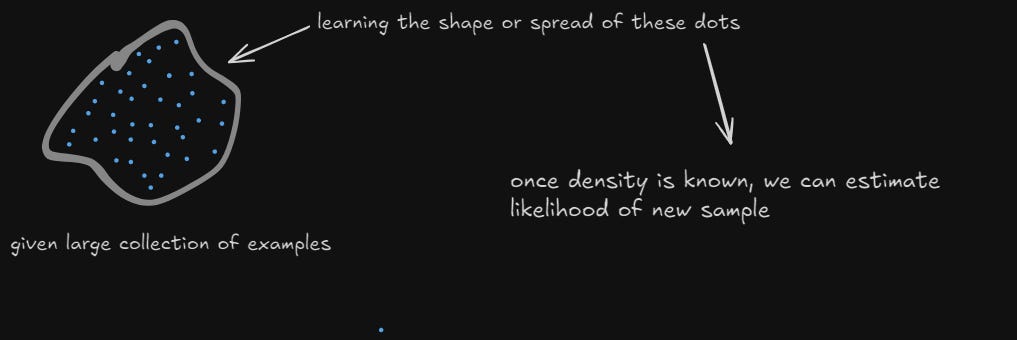

Excellent tutorial - Diffusion Models - bit by bit

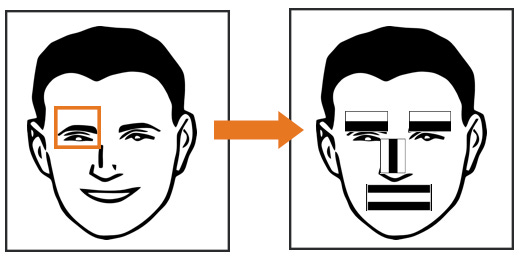

Another good one- Facial Detection — Understanding Viola Jones’ Algorithm

Stein’s Paradox- Why the Sample Mean Isn’t Always the Best

"Averaging is one of the most fundamental tools in statistics, second only to counting. While its simplicity might make it seem intuitive, averaging plays a central role in many mathematical concepts because of its robust properties. Major results in probability, such as the Law of Large Numbers and the Central Limit Theorem, emphasize that averaging isn’t just convenient — it’s often optimal for estimating parameters. Core statistical methods, like Maximum Likelihood Estimators and Minimum Variance Unbiased Estimators (MVUE), reinforce this notion. However, this long-held belief was upended in 1956[1] when Charles Stein made a breakthrough that challenged over 150 years of estimation theory."

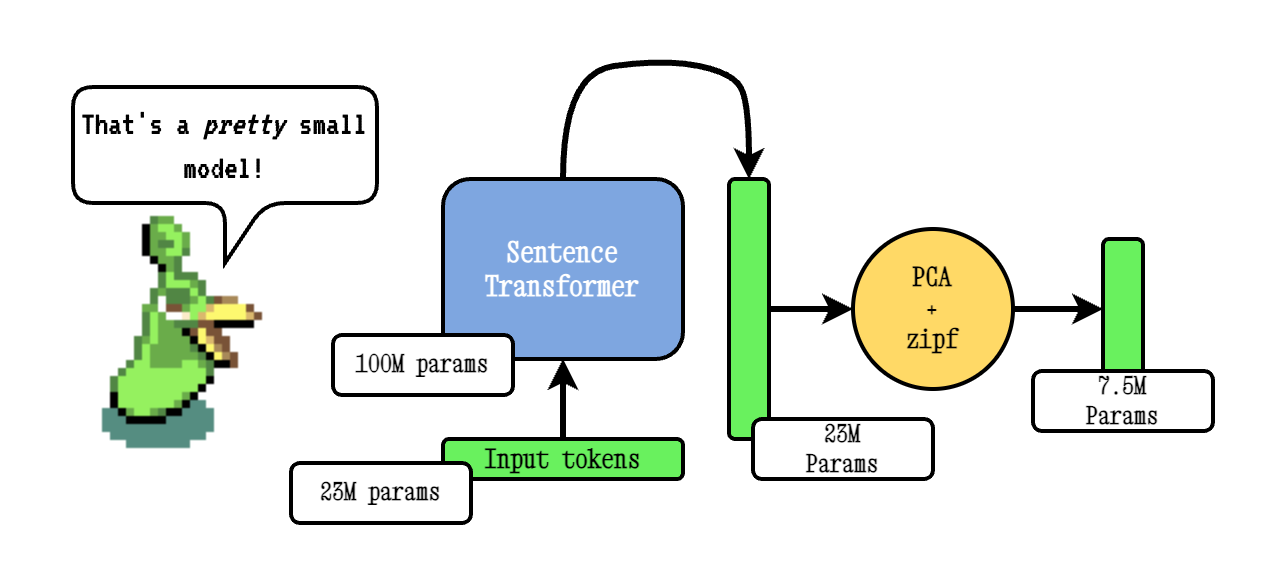

Finally, this looks like a useful addition to the toolbox (hat-tip Zac Payne-Thompson!)- Model2Vec: Distill a Small Fast Model from any Sentence Transformer

Practical tips

How to drive analytics, ML and AI into production

This is amazing, for anyone interested in training their own LLM… INTELLECT–1: Launching the First Decentralized Training of a 10B Parameter Model

Very useful and practical, and a great story- Splink: transforming data linking through open source collaboration

"'Transformation' and 'ways of working' are easy phrases to throw around, but what does this look like in practice? In this article, I use the concrete example of data linking to show how these new approaches have delivered a step change in government capability. This has been driven through the development of Splink, a Python package that’s probably the world’s best free data linking software."The Rise of the Declarative Data Stack

"A declarative data stack is a set of tools and, precisely, its configs can be thought of as a single function such as run_stack(serve(transform(ingest))) that can recreate the entire data stack. Instead of having one framework for one piece, we want a combination of multiple tools combined into a single declarative data stack. Like the Modern Data Stack, but integrated the way Kubernetes integrates all infrastructure into a single deployment, like YAML."Who needs vector stores! Hybrid full-text search and vector search with SQLite

"SQLite has had keyword or "full text" search for over a decade, in the form of the FTS5 extension, which drives search applications or billions of devices every single day. We can combine this battle-tested SQLite keyword search with the new sqlite-vec vector search extension to offer easy-yet-configurable hybrid search, which can run on the command line, on mobile devices, Raspberry Pis, and even web browsers with WASM!"The 5 Data Quality Rules You Should Never Write Again

"Data breaks, that much is certain. The challenge is knowing when, where, and why it happens. For most data analysts, combating that means writing data rules – lots of data rules – to ensure your data products are accurate and reliable. But, while handwriting hundreds (or even thousands) of manual rules may be the de facto approach to data quality, that doesn’t mean it has to be. "

Bigger picture ideas

Longer thought provoking reads - sit back and pour a drink! ...

OpenAI o1: 10 Implications For the Future - Alberto Romero

"Generative AI as the leading AI paradigm is over. This is perhaps the most important implication for most users who don’t care about AI beyond what they can use. Generative AI is about creating new data. But reasoning AI is unconcerned with that. It is intended to solve hard problems, not generate slop. Furthermore, OpenAI is hiding the reasoning tokens. The others will as well. You can still use generative AI tools to generate stuff but the best AIs won’t be generation-focused but reasoning-focused. It’s a different thing whose applications we don’t yet understand well."When you give a Claude a mouse - Ethan Mollick

"But what made this interesting is that the AI had a strategy, and it was willing to revise it based on what it learned. I am not sure how that strategy was developed by the AI, but the plans were forward-looking across dozens of moves and insightful. For example, it assumed new features would appear when 50 paperclips were made. You can see, below, that it realized it was wrong and came up with a new strategy that it tested."LLMs don’t do formal reasoning - and that is a HUGE problem - Gary Marcus

"𝗧𝗵𝗲𝗿𝗲 𝗶𝘀 𝗷𝘂𝘀𝘁 𝗻𝗼 𝘄𝗮𝘆 𝗰𝗮𝗻 𝘆𝗼𝘂 𝗯𝘂𝗶𝗹𝗱 𝗿𝗲𝗹𝗶𝗮𝗯𝗹𝗲 𝗮𝗴𝗲𝗻𝘁𝘀 𝗼𝗻 𝘁𝗵𝗶𝘀 𝗳𝗼𝘂𝗻𝗱𝗮𝘁𝗶𝗼𝗻, where changing a word or two in irrelevant ways or adding a few bit of irrelevant info can give you a different answer."Al Will Take Over Human Systems From Within - Interview with Yuval Noah Harari

“People do acknowledge that there are lies and propaganda and misinformation and disinformation, but they say, “OK, the answer to all these problems with information is more information and more freedom of information. If we just flood the world with information, truth and knowledge and wisdom will kind of float to the surface on this ocean of information.” This is a complete mistake because the truth is a very rare and costly kind of information. Most information in the world is not truth. Most information is junk. Most information is fiction and fantasies, delusions, illusions and lies. While truth is costly, fiction is cheap.”Machines of Loving Grace - Dario Amodei (a really long one from the CEO of Anthropic, but worth a look!

"What powerful AI (I dislike the term AGI) will look like, and when (or if) it will arrive, is a huge topic in itself. It’s one I’ve discussed publicly and could write a completely separate essay on (I probably will at some point). Obviously, many people are skeptical that powerful AI will be built soon and some are skeptical that it will ever be built at all. I think it could come as early as 2026, though there are also ways it could take much longer. But for the purposes of this essay, I’d like to put these issues aside, assume it will come reasonably soon, and focus on what happens in the 5-10 years after that. I also want to assume a definition of what such a system will look like, what its capabilities are and how it interacts, even though there is room for disagreement on this. "Automating Processes with Software is HARD - Steven Sinofsky

"Most people who have built an automation know, or at least come to know, just how fragile it is. It is fragile because steps are not followed. Tools and connections fail in unexpected ways. Or most critically because the inputs are not nearly as precise or complete as they need to be. And they come to know that addressing any of those is wildly complex. Ask any engineer that has designed their own CBCI, build, or deployment process and they will tell you “don’t touch it” unless I am around."

Fun Practical Projects and Learning Opportunities

A few fun practical projects and topics to keep you occupied/distracted:

Chinese humanoid robot is the 'fastest in the world' thanks to its trusty pair of sneakers

This looks amazing - Video On Command from Pika

Updates from Members and Contributors

Steve Haben at the Energy Systems Catapult draws our attention to an in-person techUK workshop on 14th November to look at AI risks in Energy Networks and develop ideas around best practice and sandbox environments- more details here

Zoe Turner is looking forward to this year’s annual RPySOC conference hosted by NHS-R Community and NHS.pycom with virtual tickets still available and the agenda out now on the new Quarto website: Events – NHS-R Community Quarto website. We are also very grateful to Royal Statistical Society sponsor this year who will be holding a stand at the conference in Birmingham so if you are one of the lucky few who got a ticket, or get one through our waiting list, please do say hello! For more information on NHS-R Community we have an online card NHS-R Community with all our important links.

Altea Lorenzo-Arribas and Tom King have published some excellent articles in the current Significance issue:

AI Snake Oil: What Artificial Intelligence Can Do, What It Can’t, and How to Tell the Difference

Jobs and Internships!

The Job market is a bit quiet - let us know if you have any openings you'd like to advertise

Two Lead AI Engineers in the Incubator for AI at the Cabinet Office- sounds exciting (other adjacent roles here)!

2 exciting new machine learning roles at Carbon Re- excellent climate change AI startup

EvolutionAI, are looking to hire someone for applied deep learning research. Must like a challenge. Any background but needs to know how to do research properly. Remote. Apply here

Data Internships - a curated list

Again, hope you found this useful. Please do send on to your friends- we are looking to build a strong community of data science practitioners- and sign up for future updates here

- Piers

The views expressed are our own and do not necessarily represent those of the RSS