Hi everyone-

Another month flies by and as always lots going on in the world of AI and Data Science… I really encourage you to read on, but some edited highlights if you are short for time!

Groundbreaking - Med-Gemini from Google

Amazing demo - Be My Eyes Accessibility with GPT-4o

An inspirational quote from Andrew Ng

"A good way to get started in AI is to start with coursework, which gives a systematic way to gain knowledge, and then to work on projects. For many who hear this advice, “projects” may evoke a significant undertaking that delivers value to users. But I encourage you to set a lower bar and relish small, weekend tinkering projects that let you learn, even if they don’t result in a meaningful deliverable. "

Following is the June edition of our Data Science and AI newsletter. Hopefully some interesting topics and titbits to feed your data science curiosity. NOTE: If the email doesn’t display properly (it is pretty long…) click on the “Open Online” link at the top right. Feedback welcome!

handy new quick links: committee; ethics; research; generative ai; applications; practical tips; big picture ideas; fun; reader updates; jobs

Committee Activities

We are continuing our work with the Alliance for Data Science professionals on expanding the previously announced individual accreditation (Advanced Data Science Professional certification) into university course accreditation. Remember also that the RSS is accepting applications for the Advanced Data Science Professional certification as well as the new Data Science Professional certification.

Martin Goodson, CEO and Chief Scientist at Evolution AI, continues to run the excellent London Machine Learning meetup and is very active with events. The last event was on March 27th, when Meng Fang, Assistant Professor in AI at the University of Liverpool, presented "Large Language Models Are Neurosymbolic Reasoners”. Videos are posted on the meetup youtube channel - and future events will be posted here.

This Month in Data Science

Lots of exciting data science and AI going on, as always!

Ethics and more ethics...

Bias, ethics, diversity and regulation continue to be hot topics in data science and AI...

More tales of AI applications misbehaving or being used for questionable purposes:

CEO of world’s biggest ad firm targeted by deepfake scam

"Fraudsters created a WhatsApp account with a publicly available image of Read and used it to set up a Microsoft Teams meeting that appeared to be with him and another senior WPP executive, according to the email obtained by the Guardian. During the meeting, the impostors deployed a voice clone of the executive as well as YouTube footage of them. The scammers impersonated Read off-camera using the meeting’s chat window."AI usage in the Indian Election is increasingly pervasive

"AI content makers like Polymath are sought after by national and regional politicians in India amid what is being touted as the biggest election in the world. Four AI content agencies told Rest of World they are seeing more demand than they can manage, with political parties in the country projected to spend over $50 million on AI-generated campaign material this year. Even as they look to maximize their earnings, however, the AI content companies are filtering out “unethical” requests for fake content that could propagate misinformation."How China is using AI news anchors to deliver its propaganda

The proliferation of fakes on Etsy

"But I'd never seen this collection of his work. I was shocked and delighted to discover Morris was somehow also a fine-art illustrator?? No one had ever told me. And a way better illustrator than I would have imagined. These prints echoed his other work, but with far more detail, rich colours, and compositional beauty. Perhaps he did these later in life? Perhaps the other work I'd seen was by a younger, less skilled Morris?"And Google still hasn’t fixed Gemini’s biased image generator

"The datasets used to train image generators like Gemini’s generally contain more images of white people than people of other races and ethnicities, and the images of non-white people in those datasets reinforce negative stereotypes. Google, in an apparent effort to correct for these biases, implemented clumsy hardcoding under the hood. And now it’s struggling to suss out some reasonable middle path that avoids repeating history."

Attempts to automatically identify fakes and AI generated content in general are still pretty mixed:

Certainly teachers seem overconfident in their ability to identify AI text in student work

"Here we show in two experimental studies that novice (N = 89) and experienced teachers (N = 200) could not identify texts generated by ChatGPT among student-written texts."Although OpenAI are apparently making progress

"OpenAI said internal testing of an early version of its classifier showed high accuracy for detecting differences between non-AI generated images and content created by DALL-E 3. The tool correctly detected 98% of DALL-E 3 generated images, while less than 0.5% of non-AI generated images were incorrectly identified as AI generated."

Then again… OpenAI seems to be stepping back from their stance on safety in AI

What happened to OpenAI’s long-term AI risk team? (with Anthropic the beneficiary) … with last minute news about a new safety board

"Leike posted a thread on X on Friday explaining that his decision came from a disagreement over the company’s priorities and how much resources his team was being allocated. “I have been disagreeing with OpenAI leadership about the company's core priorities for quite some time, until we finally reached a breaking point,” Leike wrote. “Over the past few months my team has been sailing against the wind. Sometimes we were struggling for compute and it was getting harder and harder to get this crucial research done.”

This is an excellent read and promising from an explainability perspective- research from Anthropic: Mapping the Mind of a Large Language Model

"Whereas the features we found in the toy language model were rather superficial, the features we found in Sonnet have a depth, breadth, and abstraction reflecting Sonnet's advanced capabilities. We see features corresponding to a vast range of entities like cities (San Francisco), people (Rosalind Franklin), atomic elements (Lithium), scientific fields (immunology), and programming syntax (function calls). These features are multimodal and multilingual, responding to images of a given entity as well as its name or description in many languages."Lots of regulatory developments going on, as always!

In the US, the Bipartisan Senate AI Working Group has been formed

“No technology offers more promise to our modern world than artificial intelligence. But AI also presents a host of new policy challenges. Harnessing the potential of AI demands an all-hands-on-deck approach and that’s exactly what our bipartisan AI working group has been leading,” said Leader Schumer. “After talking to advocates, critics, academics, labor groups, civil rights leaders, stakeholders, developers, and more, our working group was able to identify key areas of policy that have bipartisan consensus. Now, the work continues with our Committees, Chairmen, and Ranking Members to develop and advance legislation with urgency and humility.”While there is all sorts of regulation being attempted at the state level

"State lawmakers are on their way to creating the equivalent of 50 different computational control commissions across the nation—a move that could severely undermine artificial intelligence (AI) innovation, investment, and competition in the United States."

There are more best practice guides and governance frameworks emerging from academia and industry

Stanford University Human-Centered AI group updated their foundational model transparency index

While DeepMind introduced their Frontier Safety Framework

"Today, we are introducing our Frontier Safety Framework - a set of protocols for proactively identifying future AI capabilities that could cause severe harm and putting in place mechanisms to detect and mitigate them. Our Framework focuses on severe risks resulting from powerful capabilities at the model level, such as exceptional agency or sophisticated cyber capabilities. It is designed to complement our alignment research, which trains models to act in accordance with human values and societal goals, and Google’s existing suite of AI responsibility and safety practices."The NYTimes published their Principles for Using Generative AI in the Newsroom

And Microsoft banned US police departments from using enterprise AI tool for facial recognition

"Language added Wednesday to the terms of service for Azure OpenAI Service more clearly prohibits integrations with Azure OpenAI Service from being used “by or for” police departments for facial recognition in the U.S., including integrations with OpenAI’s current — and possibly future — image-analyzing models."

The struggle for dominance between the big commercial players, national governments and the open source community continues:

"Everybody in tech seems to want to make friends with Saudi Arabia right now as the kingdom has trained its sights on becoming a dominant player in A.I. — and is pumping in eye-popping sums to do so."While Hugging Face is attempting to help out the little guys (although sadly the $10m pales in comparison to the $100billion…)

"Hugging Face, one of the biggest names in machine learning, is committing $10 million in free shared GPUs to help developers create new AI technologies. The goal is to help small developers, academics, and startups counter the centralization of AI advancements."And the National Science Foundation in the US is launching the National Deep

"NDIF addresses this critical need by creating a unique nationwide research computing fabric that enables scientists to perform transparent and reproducible experiments on the largest-scale open AI systems."

OpenAI seems to be on a mission to formally partner up with publishers:

OpenAI partners with Reddit to integrate unique user-generated content into ChatGPT

"As part of the partnership, OpenAI will gain access to Reddit’s Data API, granting the AI company real-time, structured, and exclusive access to the vast array of content generated by Reddit’s vibrant communities"A landmark multi-year global partnership with News Corp

"News Corp and OpenAI today announced a historic, multi-year agreement to bring News Corp news content to OpenAI. Through this partnership, OpenAI has permission to display content from News Corp mastheads in response to user questions and to enhance its products, with the ultimate objective of providing people the ability to make informed choices based on reliable information and news sources. "

This is perhaps not surprising given the mountain number of legal challenges facing OpenAI and their competitors

Sony Music slams tech giants for unauthorised use of stars' songs

"Sony Music, which represents artists like Beyonce and Adele, is forbidding anyone from training, developing or making money from AI using its songs without permission."Google fined €250m in France for breaching intellectual property deal

Major U.S. newspapers sue OpenAI, Microsoft for copyright infringement

"The lawsuit is being filed on behalf of some of the most prominent regional daily newspapers in the Alden portfolio: the New York Daily News, Chicago Tribune, Orlando Sentinel, South Florida Sun Sentinel, San Jose Mercury News, Denver Post, Orange County Register and St. Paul Pioneer Press."Not to mention the controversy around OpenAI’s demo at their latest release (more on the release below) - more here

"If a guy told you his favorite sci-fi movie is Her, then released an AI chatbot with a voice that sounds uncannily like the voice from Her, then tweeted the single word “her” moments after the release… what would you conclude?"

Finally, an interesting quandary in Hollywood- “Everyone Is Using AI, But They Are Scared to Admit It”

"For horror fans, Late Night With the Devil marked one of the year’s most anticipated releases. Embracing an analog film filter, the found-footage flick starring David Dastmalchian reaped praise for its top-notch production design by leaning into a ’70s-era grindhouse aesthetic reminiscent of Dawn of the Dead or Death Race 2000. Following a late-night talk show host airing a Halloween special in 1977, it had all the makings of a cult hit. But the movie may be remembered more for the controversy surrounding its use of cutaway graphics created by generative artificial intelligence tools. One image of a dancing skeleton in particular incensed some theatergoers. Leading up to its theatrical debut in March, it faced the prospect of a boycott, though that never materialized."

Developments in Data Science and AI Research...

As always, lots of new developments on the research front and plenty of arXiv papers to read...

As always, lots of research going into understanding how to improve and enhance LLMs

The pros and cons of a well known finetuning method: LoRA Learns Less and Forgets Less

"We show that LoRA provides stronger regularization compared to common techniques such as weight decay and dropout; it also helps maintain more diverse generations."An interesting question: given the new larger context windows, what is the best use of the additional context? Many-Shot In-Context Learning

"Large language models (LLMs) excel at few-shot in-context learning (ICL) -- learning from a few examples provided in context at inference, without any weight updates. Newly expanded context windows allow us to investigate ICL with hundreds or thousands of examples -- the many-shot regime. Going from few-shot to many-shot, we observe significant performance gains across a wide variety of generative and discriminative tasks."Cool idea, encouraging LLMs to reason through visualisation: Visualization-of-Thought Elicits Spatial Reasoning in Large Language Models

"VoT aims to elicit spatial reasoning of LLMs by visualizing their reasoning traces, thereby guiding subsequent reasoning steps. We employed VoT for multi-hop spatial reasoning tasks, including natural language navigation, visual navigation, and visual tiling in 2D grid worlds. Experimental results demonstrated that VoT significantly enhances the spatial reasoning abilities of LLMs."And interesting new research on approaches to reduced hallucinations (hat tip Ben): Effective large language model adaptation for improved grounding

"Our new framework, AGREE, takes a holistic approach to adapt LLMs for better grounding and citation generation, combining both learning-based adaptation and test-time adaptation (TTA). Different from prior prompting-based approaches, AGREE fine-tunes LLMs, enabling them to self-ground the claims in their responses and provide accurate citations"

Lots of great applications of foundational models:

Smart Expert System: Large Language Models as Text Classifiers

"The system simplifies the traditional text classification workflow, eliminating the need for extensive preprocessing and domain expertise. The performance of several LLMs, machine learning (ML) algorithms, and neural network (NN) based structures is evaluated on four datasets. Results demonstrate that certain LLMs surpass traditional methods in sentiment analysis, spam SMS detection and multi-label classification. Furthermore, it is shown that the system's performance can be further enhanced through few-shot or fine-tuning strategies, making the fine-tuned model the top performer across all datasets"Applying deep generative models to the chemical space, and molecular design! Navigating Chemical Space with Latent Flows -

"In this paper, we propose a new framework, ChemFlow, to traverse chemical space through navigating the latent space learned by molecule generative models through flows. We introduce a dynamical system perspective that formulates the problem as learning a vector field that transports the mass of the molecular distribution to the region with desired molecular properties or structure diversity."Awesome: weather prediction! - Aurora: A Foundation Model of the Atmosphere

"Here we introduce Aurora, a large-scale foundation model of the atmosphere trained on over a million hours of diverse weather and climate data. Aurora leverages the strengths of the foundation modelling approach to produce operational forecasts for a wide variety of atmospheric prediction problems, including those with limited training data, heterogeneous variables, and extreme events. In under a minute, Aurora produces 5-day global air pollution predictions and 10-day high-resolution weather forecasts that outperform state-of-the-art classical simulation tools and the best specialized deep learning models."Simulating a hospital with LLMs, amazing that knowledge acquired in the simulation seems to help with real world tasks… Agent Hospital: A Simulacrum of Hospital with Evolvable Medical Agents

"To do so, we propose a method called MedAgent-Zero. As the simulacrum can simulate disease onset and progression based on knowledge bases and LLMs, doctor agents can keep accumulating experience from both successful and unsuccessful cases. Simulation experiments show that the treatment performance of doctor agents consistently improves on various tasks. More interestingly, the knowledge the doctor agents have acquired in Agent Hospital is applicable to real-world medicare benchmarks"Finally, this feels pretty groundbreaking - Med-Gemini from Google

"Med-Gemini's performance suggests real-world utility by surpassing human experts on tasks such as medical text summarization, alongside demonstrations of promising potential for multimodal medical dialogue, medical research and education."

Some interesting work in safety and governance

Can you stop LLMs being fine-tuned for unethical or illegal tasks? SOPHON: Non-Fine-Tunable Learning to Restrain Task Transferability For Pre-trained Models

"To fulfill this goal, we propose SOPHON, a protection framework that reinforces a given pre-trained model to be resistant to being fine-tuned in pre-defined restricted domains. Nonetheless, this is challenging due to a diversity of complicated fine-tuning strategies that may be adopted by adversaries. Inspired by model-agnostic meta-learning, we overcome this difficulty by designing sophisticated fine-tuning simulation and fine-tuning evaluation algorithms."Seems too good to be true, but you never know… Towards Guaranteed Safe AI: A Framework for Ensuring Robust and Reliable AI Systems

"The core feature of these approaches is that they aim to produce AI systems which are equipped with high-assurance quantitative safety guarantees. This is achieved by the interplay of three core components: a world model (which provides a mathematical description of how the AI system affects the outside world), a safety specification (which is a mathematical description of what effects are acceptable), and a verifier (which provides an auditable proof certificate that the AI satisfies the safety specification relative to the world model)."An elegant approach to adjusting models for bias through post processing: Optimal Group Fair Classifiers from Linear Post-Processing

"It achieves fairness by re-calibrating the output score of the given base model with a "fairness cost" -- a linear combination of the (predicted) group memberships. Our algorithm is based on a representation result showing that the optimal fair classifier can be expressed as a linear post-processing of the loss function and the group predictor, derived via using these as sufficient statistics to reformulate the fair classification problem as a linear program"

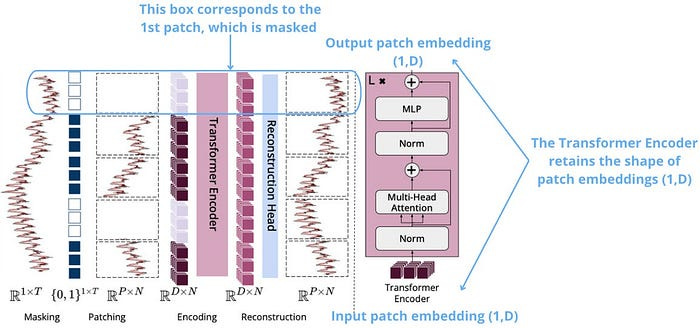

Lots of fun new time series approaches to play around with!

First up, good survey papers on approaches for spatio-temporal data, and Time Series Foundation Models

TimesFM from Google- A decoder-only foundation model for time-series forecasting

"TimesFM is a forecasting model, pre-trained on a large time-series corpus of 100 billion real world time-points, that displays impressive zero-shot performance on a variety of public benchmarks from different domains and granularities."Taking the new Mamba architecture for a time series spin..Integrating Mamba and Transformer for Long-Short Range Time Series Forecasting

"Recent progress on state space models (SSMs) have shown impressive performance on modeling long range dependency due to their subquadratic complexity. Mamba, as a representative SSM, enjoys linear time complexity and has achieved strong scalability on tasks that requires scaling to long sequences, such as language, audio, and genomics. In this paper, we propose to leverage a hybrid framework Mambaformer that internally combines Mamba for long-range dependency, and Transformer for short range dependency, for long-short range forecasting."A ‘tiny’ pre-trained model open sourced from IBM - TinyTimeMixers (TTMs)

"TTMs are lightweight forecasters, pre-trained on publicly available time series data with various augmentations. TTM provides state-of-the-art zero-shot forecasts and can easily be fine-tuned for multi-variate forecasts with just 5% of the training data to be competitive"

Finally- how cool is this? Learning Robot Soccer from Egocentric Vision with Deep Reinforcement Learning

Generative AI ... oh my!

Still moving at such a fast pace it feels in need of it's own section, for developments in the wild world of large language models...

If last month was all about open source, this month is very much ‘Empire Strikes Back’!

First up, we had a major new releases from OpenAI on May 13th:

A new flagship model GPT-4o, as well as improvements to data analysis, a detailed model spec, free access to custom gpts and some pretty amazing demos (Be My Eyes Accessibility with GPT-4o, voice assistant - all well worth watching and pretty astonishing.. although see the previously highlighted controversy)

"GPT-4o (“o” for “omni”) is a step towards much more natural human-computer interaction—it accepts as input any combination of text, audio, image, and video and generates any combination of text, audio, and image outputs. It can respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, which is similar to human response time(opens in a new window) in a conversation. It matches GPT-4 Turbo performance on text in English and code, with significant improvement on text in non-English languages, while also being much faster and 50% cheaper in the API. GPT-4o is especially better at vision and audio understanding compared to existing models."Lots of ‘first takes’, all generally pretty impressed

And then, 2 days later, we had Google unleash an avalanche of new things at Google I/O 2024

Here’s everything Google just announced, AI at Google I/O 2024

Update of the Gemini models, including Gemini Flash- lightweight model, optimized for speed and efficiency (along with a 150 page model card)

Update of Gemma (open source model), as well as PaliGemma (open vision language model)

And some impressive demos of Project Astra “the future of AI assistants” and Project IDX (next gen IDE)

Even a change to Google Search

"But there was one particular announcement at I/O that’s sending shockwaves around the web: Google is rolling out what it calls AI Overviews in Search to everyone in the United States by this week and around the world to more than a billion users by the end of the year. That means when you search for something on Google, you’ll get AI-powered results at the top of the page for a number of queries. The company literally describes this as “letting Google do the Googling for you.”Although there has been some mixed results- highlighting how hard it is to go live with generative AI products

"In one baffling example, a reporter Googling whether they could use gasoline to cook spaghetti faster was told, external "no... but you can use gasoline to make a spicy spaghetti dish" and given a recipe."

Meanwhile Anthropic is expanding in Europe

Microsoft is rumoured to be building a 500b parameter model, MAI-1, and is rolling out new CoPilot AI features

Apple has announced ‘Eye Tracking, Music Haptics, and Vocal Shortcuts’, and is supposedly finalising a deal with OpenAI

And Amazon still appears to be struggling- although they released Amazon Q, an AI assistant, and Bedrock Studio for managing AI application development

Still lots of open source news though

Meta released Chameleon: Mixed-Modal Early-Fusion Foundation Models which looks like an impressive architectural innovation

Elon Musk’s x.ai released the weights and architecture of Grok-1

New entrants in China from Alibaba

Stability.ai releasing Stable Artisan: Media Generation and Editing on Discord

We have DeepSeek-V2: A Strong, Economical, and Efficient Mixture-of-Experts Language Model

New kid on the block this month is Refuel LLM-2

Real world applications and how to guides

Lots of applications and tips and tricks this month

Some interesting practical examples of using AI capabilities in the wild

So great to see AI being put to good use- identifying landmines in the Ukraine

"Across the 25-hectare field—that’s about the size of 62 American football fields—the U.N. workers had scattered 50 to 100 inert mines and other ordnance. Our task was to fly our drone over the area and use our machine learning software to detect as many as possible. And we had to turn in our results within 72 hours."Impressive - Realtime Video Stream Analysis with Computer Vision

AI transforming the recruiting industry? Braintrust.

And if you are looking for ideas… 101 real-world gen AI use cases from the world's leading organizations

This next gen AI powered IDE looks impressive - cursor.sh

Large language models (e.g., ChatGPT) as research assistants - I’m sure all academics would love help automating grant applications!

And here’s an open source version anyone can use: GPT Researcher

"GPT Researcher is an autonomous agent designed for comprehensive online research on a variety of tasks. The agent can produce detailed, factual and unbiased research reports, with customization options for focusing on relevant resources, outlines, and lessons."Excellent medical use case: AI-enhanced integration of genetic and medical imaging data for risk assessment of Type 2 diabetes

"Drawing upon comprehensive genetic and medical imaging datasets from 68,911 individuals in the Taiwan Biobank, our models integrate Polygenic Risk Scores (PRS), Multi-image Risk Scores (MRS), and demographic variables, such as age, sex, and T2D family history. Here, we show that our model achieves an Area Under the Receiver Operating Curve (AUC) of 0.94, effectively identifying high-risk T2D subgroups"Amazing stuff from Google

Very impressive- Startups Say India Is Ideal for Testing Self-Driving Cars

"Former Uber CEO Travis Kalanick famously said, after experiencing New Delhi’s chaotic roads, that India will be the last place in the world to get self-driving cars. But a handful of startups think the country could be the perfect testbed for creating autonomous vehicles that can handle anything."

Lots of great tutorials and how-to’s this month

Some good posts on time series

Interpretable time-series modelling using Gaussian processes - great tutorial with code

Good tutorial on vanishing gradients

Useful tutorial for anyone working on geospatial data- Spatial ML: Predicting on out-of-sample data

Ever wondered how Singular Value Decomposition works? Wonder no more!

And the hottest new technique on the block- Understanding Kolmogorov–Arnold Networks (KAN)

"Inspired by the Kolmogorov-Arnold representation theorem, KANs diverge from traditional Multi-Layer Perceptrons (MLPs) by replacing fixed activation functions with learnable functions, effectively eliminating the need for linear weight matrices."Excellent insight into when fine-tuning LLMs is still useful

"It’s impossible to fine-tune effectively without an eval system which can lead to writing off fine-tuning if you haven’t completed this prerequisite. It’s also impossible to improve your product without a good eval system in the long term, fine-tuning or not."If you are a lanchain users, DSPy might be an interesting alternative:

Finally, end to end voice transcription and diarization

"We'll solve this challenge using a custom inference handler, which will implement the Automatic Speech Recogniton (ASR) and Diarization pipeline on Inference Endpoints, as well as supporting speculative decoding. The implementation of the diarization pipeline is inspired by the famous Insanely Fast Whisper, and it uses a Pyannote model for diarization."

Practical tips

How to drive analytics and ML into production

I’ve been burned by this before- Common Causes of Data Leakage and how to Spot Them

"One of a Data Scientist's worst nightmares is data leakage. And why is data leakage so harmful? Because the ways your models may suffer from it are designed to convince you that your model is excellent and that you should be expecting awesome results in the future."Useful insight from Stripe on their ML feature engineering process

Get pandas running on GPUs with RAPIDS - hands on colab tutorial

This looks very useful for anyone attempting to get LLM applications into production: Langtrace, Open Source Observability for LLM applications

The ever changing MLOps landscape- 7 Best Machine Learning Workflow and Pipeline Orchestration Tools

And a relatively simple and practical alternative: mlflow+github actions

Bigger picture ideas

Longer thought provoking reads - sit back and pour a drink! ...

The teens making friends with AI chatbots- Jessica Lucas

“Aaron is one of many young users who have discovered the double-edged sword of AI companions. Many users like Aaron describe finding the chatbots helpful, entertaining, and even supportive. But they also describe feeling addicted to chatbots, a complication which researchers and experts have been sounding the alarm on.”A couple of great pieces from Ethan Mollick

"Likely the biggest impact of GPT-4o is not technical, but a business decision: soon everyone, whether they are paying or not, will get access to GPT-4o1. I think this is a big deal. When I talk with groups and ask people to raise their hands if they use ChatGPT, almost every hand goes up. When I ask if they used GPT-4, only 5% of hands remain up, at most. GPT-4 is so, so much better than free ChatGPT-3.5, it is like having a PhD student work with you instead of a high school sophomore. But that $20 a month barrier kept many people from understanding how impressive AI can be, and for gaining any benefit from AI. That is no longer true.""No matter what happens next, today, as anyone who uses AI knows, we do not have an AI that does every task better than a human, or even most tasks. But that doesn’t mean that AI hasn’t achieved superhuman levels of performance in some surprisingly complex jobs, at least if we define superhuman as better than most humans, or even most experts. What makes these areas of superhuman performance interesting is that they are often for very “human” tasks that seem to require empathy and judgement."

And two from the excellent Benedict Evans

"Generative AI means things that were always possible at a small scale now become practical to automate at a massive scale. Sometimes a change in scale is a change in principle.""They don’t know, either way, because we don’t have a coherent theoretical model of what general intelligence really is, nor why people seem to be better at it than dogs, nor how exactly people or dogs are different to crows or indeed octopuses. Equally, we don’t know why LLMs seem to work so well, and we don’t know how much they can improve."

AI Copilots Are Changing How Coding Is Taught- Rina Diane Caballar

Another vital expertise is problem decomposition. “This is a skill to know early on because you need to break a large problem into smaller pieces that an LLM can solve,” says Leo Porter, an associate teaching professor of computer science at the University of California, San Diego. “It’s hard to find where in the curriculum that’s taught—maybe in an algorithms or software engineering class, but those are advanced classes. Now, it becomes a priority in introductory classes.”Ninety-five theses on AI- Samuel Hammond

"... 6. The main regulatory barriers to the commercial adoption of AI are within legacy laws and regulations, mostly not prospective AI-specific laws. 7. The shorter the timeline to AGI, the sooner policymaker and organizations should switch focus to “bracing for impact.” 8. The most robust forms of AI governance will involve the infrastructure and hardware layers. ..."

Fun Practical Projects and Learning Opportunities

A few fun practical projects and topics to keep you occupied/distracted:

Excellent visualisation and tutorial on the data sets that drive foundation models (hat tip Luke)

Feel like a challenge?- how about learning how to use AlphaFold?

AI film making with Donald Glover

Infinite Wonderland - Alice in Wonderland re-imagined through different AI artists

Updates from Members and Contributors

NHS Data Scientist Luke Shaw published a great post on a forecasting approach used in NHS Bristol

Jona Shehu, Strategy and Research Consultant at Helix Data, draws our attention to an upcoming industry symposium on the future of AI assistants, hosted by SAIS (Secure AI assistantS) Project, at King's College London on June 28, 2024. The symposium brings together experts from academia, industry, and regulatory agencies to discuss the issues surrounding the integration of AI assistants into everyday life, with a focus on security, ethics, and privacy.

Register here by June 14th to secure your spot (limited availability)

Jobs and Internships!

The Job market is a bit quiet - let us know if you have any openings you'd like to advertise

2 exciting new machine learning roles at Carbon Re- excellent climate change AI startup

EvolutionAI, are looking to hire someone for applied deep learning research. Must like a challenge. Any background but needs to know how to do research properly. Remote. Apply here

Data Internships - a curated list

Again, hope you found this useful. Please do send on to your friends- we are looking to build a strong community of data science practitioners- and sign up for future updates here

- Piers

The views expressed are our own and do not necessarily represent those of the RSS