October Newsletter

Industrial Strength Data Science and AI

Hi everyone-

Well, we had a great week of sunshine in early September in the UK - but with the mornings increasingly dark and the temperature dropping it feels like summer is now officially over… So high time for some in depth AI commentary. Don't miss out on more generative AI fun and games in the middle section!

Following is the October edition of our Royal Statistical Society Data Science and AI Section newsletter. Hopefully some interesting topics and titbits to feed your data science curiosity. NOTE: If the email doesn’t display properly click on the “Open Online” link at the top right.

As always- any and all feedback most welcome! If you like these, do please send on to your friends- we are looking to build a strong community of data science, ML and AI practitioners. And if you are not signed up to receive these automatically you can do so here

handy new quick links: committee; ethics; research; generative ai; applications; practical tips; big picture ideas; fun; reader updates; jobs

Committee Activities

Thank you participating in our practitioners survey!- we will write up the results in a blog post soon.

We are continuing our work with the Alliance for Data Science professionals on expanding the previously announced individual accreditation (Advanced Data Science Professional certification) into university course accreditation. Remember also that the RSS is now accepting applications for the Advanced Data Science Professional certification- more details here.

Some great recent content published by Brian Tarran on our sister blog, Real World Data Science:

We presented an engaging and energetic session the RSS conference - “How to avoid becoming an ornamental data scientist" - which seemed to go down well! This pulled together the themes from events we held across Scotland, Wales and London as well as our practitioner survey results.

Planning is already underway for the RSS 2024 International Conference which will take place in Brighton from 2-5 September. The organisers are currently calling for proposals for sessions and workshops at the conference; topics can include new research and results, collaborations between different sectors, areas of controversy/debate, novel applications, topical discussion and informative and innovative case studies. The deadline for proposals is 20 November.

Will Browne, Associate Partner at at CF Healthcare Consulting, published an excellent blog post on Data Science in the NHS: “How data is used to save lives in the NHS: A beginners guide“

Martin Goodson, CEO and Chief Scientist at Evolution AI, continues to run the excellent London Machine Learning meetup and is very active with events. The last event, on Sept 21st, was a great one when Jean Kaddour and Joshua Harris discussed "Challenges and Applications of Large Language Models”. Videos are posted on the meetup youtube channel - and future events will be posted here.

On a personal note, our Chair Janet Bastiman, Chief Data Scientist at Napier AI, is Hiking for Health on 1st Oct raising money for Barts Charity (see her moving story here) - do consider supporting this great cause.

This Month in Data Science

Lots of exciting data science and AI going on, as always!

Ethics and more ethics...

Bias, ethics and diversity continue to be hot topics in data science and AI...

The ever evolving ‘how do we regulate AI’ discussion continues with leading Tech companies keen to be seen to be ‘leading’: “Tech leaders agree on AI regulation but divided on how in Washington forum“ to mixed response:

"“I don’t know why we would invite all the biggest monopolists in the world to come and give Congress tips on how to help them make more money and then close it to the public,” Hawley said."Of course even if you had the required regulation in place, the process of making sure AI systems are compliant is far from simple: “Auditing AI: How Much Access Is Needed to Audit an AI System?“

Do we want AI systems helping judges? “Lord Justice of Appeal Uses ChatGPT and “…put it in [his] judgment”“. On one hand, the practicalities are not much different from an executive using ChatGPT to help with an initial document draft… on the other hand, its an official legal judgement!

“I asked it to give me a summary of an area of law I was writing a judgment about. I thought I would try it. I asked ChatGPT 'can you give me a summary of this area of law?', and it gave me a paragraph. I know what the answer is because I was about to write a paragraph that said that, but it did it for me and I put it in my judgment. It’s there and it’s jolly useful”.And do we want commercial firms driving AI in the NHS? “How Peter Thiel’s Palantir Pushed Toward the Heart of U.K. Health Care“

Our increasing reliance on AI based systems highlights the importance of understanding and correcting for bias in those same systems: “growing reliance on language apps jeopardizes some asylum applications“

"The US immigration system has said it will provide migrants with a human interpreter as needed. In reality, refugee organizations say many are frequently left without access to one. Instead, the various agencies that make up the US immigration system and even some refugee aid organizations increasingly rely on AI-powered translation tools like Google Translate and Microsoft Translator to bridge that gap."And data leaks still happen and are increasingly large and problematic, even for the more sophisticated companies… “Microsoft worker accidentally exposes 38TB of sensitive data in GitHub blunder“… 38TB is an impressive accident..

There are lots of predictions around how AI is going going help/hurt different jobs, but there is increasing commentary on the potential for worsening inequality

Not quite sure what to make of this - “Frontier AI Taskforce: first progress report“ - great that the UK Government is doing something in this area, but not clear it’s focusing on the right things (see paper below on open source and innovation…)

In our ‘post-truth’ world it can be difficult to pin down what is actually correct and what we should do to combat misinformation:

This is a sobering story of feedback loops…

When you type a question into Google Search, the site sometimes provides a quick answer called a Featured Snippet at the top of the results, pulled from websites it has indexed. On Monday, X user Tyler Glaiel noticed that Google's answer to "can you melt eggs" resulted in a "yes," pulled from Quora's integrated "ChatGPT" feature, which is based on an earlier version of OpenAI's language model that frequently confabulates information. "Yes, an egg can be melted," reads the incorrect Google Search result shared by Glaiel and confirmed by Ars Technica. "The most common way to melt an egg is to heat it using a stove or microwave." (Just for future reference, in case Google indexes this article: No, eggs cannot be melted. Instead, they change form chemically when heated.)With the US Elections looming on the horizon, AI generated “fake news” is on the way

Google is attempting to combat this through policy change…

Meanwhile, some news sources are embracing AI, with AI Newsreaders beginning to emerge and the NYTimes looking to hire a “Newsroom Generative AI Lead“

"This editor will be responsible for ensuring that The Times is a leader in GenAI innovation and its applications for journalism. They will lead our efforts to use GenAI tools in reader-facing ways as well as internally in the newsroom. To do so, they will shape the vision for how we approach this technology and will serve as the newsroom's leading voice on its opportunity as well as its limits and risks. "

Of course a key driver of our acceptance or rejection of AI tools and approaches comes down to trust- which varies greatly by country

And the conundrum of intellectual property rights is far from being resolved..

“An old master? No, it’s an image AI just knocked up … and it can’t be copyrighted“

"The use of AI in art is facing a setback after a ruling that an award-winning image could not be copyrighted because it was not made sufficiently by humans. The decision, delivered by the US copyright office review board, found that Théâtre d’Opéra Spatial, an AI-generated image that won first place at the 2022 Colorado state fair annual art competition, was not eligible because copyright protection “excludes works produced by non-humans”."Microsoft is attempting to circumvent these issues by indemnifying customers - “Microsoft announces new Copilot Copyright Commitment for customers“

And a thoughtful piece from Benedict Evans on the whole issue, well worth a read: “Generative AI and intellectual property“

“At the simplest level, we will very soon have smartphone apps that let you say “play me this song, but in Taylor Swift’s voice”. That’s a new possibility, but we understand the intellectual property ideas pretty well - there’ll be a lot of shouting over who gets paid what, but we know what we think the moral rights are. Record companies are already having conversations with Google about this. But what happens if I say “make me a song in the style of Taylor Swift” or, even more puzzling, “make me a song in the style of the top pop hits of the last decade”? ”

Developments in Data Science and AI Research...

As always, lots of new developments on the research front and plenty of arXiv papers to read...

To kick off with something unrelated to GenAI… good to see research showing the benefits of open source participation!

"The study finds that an increase in GitHub participation in a given country generates an increase in the number of new technology ventures within that country in the subsequent year. The evidence suggests this relationship is complementary to a country's endowments, and does not substitute for them"Plenty of papers digging into different approaches to Large Language Model (LLM) prompting- what is interesting about these is that there is nothing particularly technical in what they are doing, more creatively using the existing tools to get better outcomes.

An improved approach to summarisation with ‘Chain of Density Prompting’

"Specifically, GPT-4 generates an initial entity-sparse summary before iteratively incorporating missing salient entities without increasing the length. Summaries generated by CoD are more abstractive, exhibit more fusion, and have less of a lead bias than GPT-4 summaries generated by a vanilla prompt"Reducing the fabled hallucinations (made up stuff…) through “chain of verification”

"We develop the Chain-of-Verification (CoVe) method whereby the model first (i) drafts an initial response; then (ii) plans verification questions to fact-check its draft; (iii) answers those questions independently so the answers are not biased by other responses; and (iv) generates its final verified response"An elegant idea- Multi-Document Question Answering using Knowledge Graph prompting

"For graph construction, we create a knowledge graph (KG) over multiple documents with nodes symbolizing passages or document structures (e.g., pages/tables), and edges denoting the semantic/lexical similarity between passages or intra-document structural relations. For graph traversal, we design an LM-guided graph traverser that navigates across nodes and gathers supporting passages assisting LLMs in MD-QA."

Interesting approaches to using AI to train AI…

Reinforcement Learning with Human Feedback (RLHF) - the stage in the LLM training process when humans grade the prompt responses- is hard to scale. Enter Reinforcement Learning from AI Feedback (RLAIF)

"On the task of summarization, human evaluators prefer generations from both RLAIF and RLHF over a baseline supervised fine-tuned model in ~70% of cases. Furthermore, when asked to rate RLAIF vs. RLHF summaries, humans prefer both at equal rates. These results suggest that RLAIF can yield human-level performance, offering a potential solution to the scalability limitations of RLHF."This is pretty amazing- Deep Mind researchers discover you can use LLMs as optimisers (paper here) by describing the optimisation problem in natural language (somewhat similar research here using evolutionary algorithms).

"In each optimization step, the LLM generates new solutions from the prompt that contains previously generated solutions with their values, then the new solutions are evaluated and added to the prompt for the next optimization step. We first showcase OPRO on linear regression and traveling salesman problems, then move on to prompt optimization where the goal is to find instructions that maximize the task accuracy. "

More research on ‘Agents’ - having LLMs use tools and manage multiple instances to solve larger scale problems

Excellent survey paper on the topic: “The Rise and Potential of Large Language Model Based Agents”

"AI agents are artificial entities that sense their environment, make decisions, and take actions. Many efforts have been made to develop intelligent agents, but they mainly focus on advancement in algorithms or training strategies to enhance specific capabilities or performance on particular tasks. Actually, what the community lacks is a general and powerful model to serve as a starting point for designing AI agents that can adapt to diverse scenarios"How should we think about these AI Agents? - “Cognitive Architectures for Language Agents”

" In this paper, we draw on the rich history of cognitive science and symbolic artificial intelligence to propose Cognitive Architectures for Language Agents (CoALA). CoALA describes a language agent with modular memory components, a structured action space to interact with internal memory and external environments, and a generalized decision-making process to choose actions. "Agents for software development as well as agents for recommendations - “RecMind: Large Language Model Powered Agent For Recommendation“

"To bridge this gap, we have designed an LLM-powered autonomous recommender agent, RecMind, which is capable of providing precise personalized recommendations through careful planning, utilizing tools for obtaining external knowledge, and leveraging individual data. We propose a novel algorithm, Self-Inspiring, to improve the planning ability of the LLM agent. At each intermediate planning step, the LLM 'self-inspires' to consider all previously explored states to plan for next step"

An important topic with LLMs is evaluation - how do know a new model or approach is better than a previous one. Interesting discussion paper on Medical AI Assessment

Finally, it’s generally assumed that LLMs can’t really do “creativity”… but is that really the case?

Study in Nature. The headline is “Best humans still outperform artificial intelligence in a creative divergent thinking task” but the detail shows

"On average, the AI chatbots outperformed human participants. While human responses included poor-quality ideas, the chatbots generally produced more creative responses."Im not sure if this is exciting or scary… Ideas Are Dimes A Dozen: Large Language Models For Idea Generation In Innovation

"We compare the ideation capabilities of ChatGPT-4, a chatbot based on a state-of-the-art LLM, with those of students at an elite university. ChatGPT-4 can generate ideas much faster and cheaper than students, and the ideas are on average of higher quality (as measured by purchase-intent surveys) and exhibit higher variance in quality. More important, the vast majority of the best ideas in the pooled sample are generated by ChatGPT and not by the students"

Generative AI ... oh my!

Still such a hot topic it feels in need of it's own section, for all things DALLE, IMAGEN, Stable Diffusion, ChatGPT...

So much going on, as ever! First of all, on the ‘big player’ front…

Low profile, but Apple is increasingly dipping into GenAI - “A look at Apple’s new Transformer-powered predictive text model“

On the Open Source front:

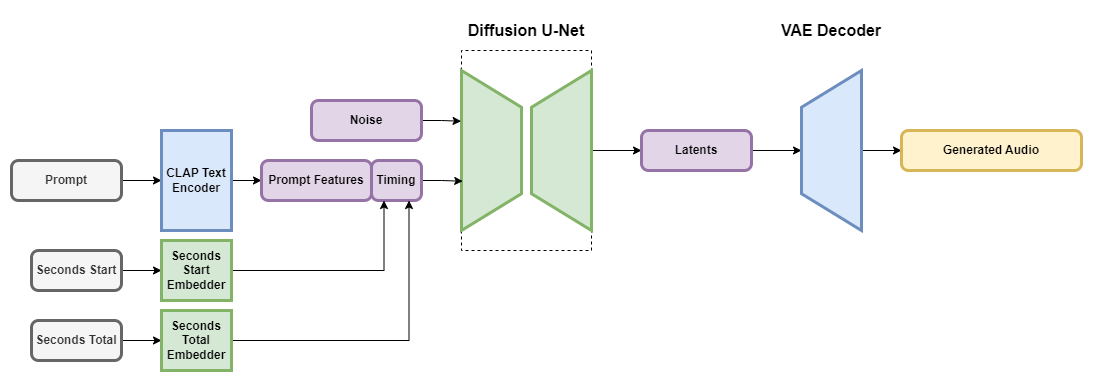

Stability.ai released an impressive new audio generator, Stable Audio - generate audio clips from text prompts definitely check out the examples…

On the small end of the spectrum we have TinyLlama which plugs and plays with any Llama2 application but is much smaller and easier to manage

And the Technology Innovation Institute team in Abu Dhabi open sourced the impressive Falcon 180b (180 Billion parameters…) - more evaluation here

"Falcon 180B is the best openly released LLM today, outperforming Llama 2 70B and OpenAI’s GPT-3.5 on MMLU, and is on par with Google's PaLM 2-Large on HellaSwag, LAMBADA, WebQuestions, Winogrande, PIQA, ARC, BoolQ, CB, COPA, RTE, WiC, WSC, ReCoRD. Falcon 180B typically sits somewhere between GPT 3.5 and GPT4 depending on the evaluation benchmark and further finetuning from the community will be very interesting to follow now that it's openly released."

But the biggest news is from OpenAI with the release of DALL-E 3, their improved image generator, and their incorporation of this (via GPT-4V(ision)) into ChatGPT … “ChatGPT Can Now Generate Images, Too“

"“It is far better at understanding and representing what the user is asking for,” said Aditya Ramesh, an OpenAI researcher, adding that the technology was built to have a more precise grasp of the English language. By adding the latest version of DALL-E to ChatGPT, OpenAI is solidifying its chatbot as a hub for generative A.I., which can produce text, images, sounds, software and other digital media on its own. Since ChatGPT went viral last year, it has kicked off a race among Silicon Valley tech giants to be at the forefront of A.I. with advancements."

Thinking about adapting open source models for your own tasks? Lots of things to think about

LLM Training: RLHF and Its Alternatives - great step by step guide from Sebastian Raschka

Before doing any LLM training, maybe best to check out how much it’s going to cost!

“What is Few-Shot Learning? Methods & Applications in 2023”; also “Can LLMs learn from a single example?“

“RAG” (Retrieval augmented generation - using chatbots on your own information) based applications are potentially incredibly useful. Some great pointers on how to set these up: “Building RAG-based LLM Applications for Production“, with notebooks to play with here

"Large language models (LLMs) have undoubtedly changed the way we interact with information. However, they come with their fair share of limitations as to what we can ask of them. Base LLMs (ex. Llama-2-70b, gpt-4, etc.) are only aware of the information that they've been trained on and will fall short when we require them to know information beyond that. Retrieval augmented generation (RAG) based LLM applications address this exact issue and extend the utility of LLMs to our specific data sources. "And so important to think about how you are going to measure accuracy

Excellent commentary from Eugene Yan on evaluating summaries- essential reading if you are utilising this type of functionality

"Here, we’ll first discuss the four dimensions for evaluating abstractive summarization before diving into reference-based, context-based, preference-based, and sampling-based metrics. Along the way, we’ll dig into methods to detect hallucination, specifically natural language inference (NLI) and question-answering (QA)."

Finally some useful learnings from launching an AI powered Chatbot

Still lots of innovation in the “Agent” space (chaining LLMs together)-

“MetaGPT takes a one line requirement as input and outputs user stories / competitive analysis / requirements / data structures / APIs / documents, etc.”

“The μAgents (micro-Agents) project is a fast and lightweight framework that makes it easy to build agents for all kinds of decentralised use cases.“

“Agents”

"Agents is an open-source library/framework for building autonomous language agents. The library is carefully engineered to support important features including long-short term memory, tool usage, web navigation, multi-agent communication, and brand new features including human-agent interaction and symbolic control""You specify what kind of app you want to build. Then, GPT Pilot asks clarifying questions, creates the product and technical requirements, sets up the environment, and starts coding the app step by step, like in real life while you oversee the development process"

Lots of great new tools and applications as always…

“XTTS is a Voice generation model that lets you clone voices into different languages by using just a quick 6-second audio clip.“

“Open Interpreter lets language models run code on your computer. An open-source, locally running implementation of OpenAI's Code Interpreter.”

“PlotAI - the easiest way to create plots in python”

“Project Gutenberg - thousands of free and open audiobooks generated using neural text-to-speech technology”

Finally, is this the next direction in AI Chatbots? “Meeno uses artificial intelligence to help users build stronger romantic and platonic relationships.“

“There’s a unique issue with Gen Z around social isolation—specifically, around them not forming lasting and meaningful relationships. And that really, to me, comes down to an absence of the opportunity to build the skills that they need to do that,” says Megan Jones Bell, a Meeno investor who serves as Google’s clinical director of mental health. “There’s a missed developmental step that’s happened for an entire generation. They’re growing up in an environment where they had less opportunity to practice relationships.”

Real world applications and how to guides

Lots of practical examples and tips and tricks this month

“Mapping oak wilt disease from space using land surface phenology”

“‘A Pandora’s box’: map of protein-structure families delights scientists”

“A foundation model for generalizable disease detection from retinal images”

"Here, we present RETFound, a foundation model for retinal images that learns generalizable representations from unlabelled retinal images and provides a basis for label-efficient model adaptation in several applications. Specifically, RETFound is trained on 1.6 million unlabelled retinal images by means of self-supervised learning and then adapted to disease detection tasks with explicit labels. We show that adapted RETFound consistently outperforms several comparison models in the diagnosis and prognosis of sight-threatening eye diseases, as well as incident prediction of complex systemic disorders such as heart failure and myocardial infarction with fewer labelled data."Useful new application from Meta/Facebook - “Nougat: Neural Optical Understanding for Academic Documents“

"We propose Nougat (Neural Optical Understanding for Academic Documents), a Visual Transformer model that performs an Optical Character Recognition (OCR) task for processing scientific documents into a markup language, and demonstrate the effectiveness of our model on a new dataset of scientific documents. The proposed approach offers a promising solution to enhance the accessibility of scientific knowledge in the digital age, by bridging the gap between human- readable documents and machine-readable text"Interesting practical technique from Amazon Science - “Making deep learning practical for Earth system forecasting“

Some useful learning on building ML products - well worth a read

And lots of interesting tutorials and how to guides on all sorts of things…

First of all, one of my favourite optics - “physics informed neural networks”

Entertaining guide to bootstrapping confidence intervals or “How often does Roy Kent say "F*CK"?”

Good tutorial on different approaches to clustering and segmentation including incorporating embeddings

Elegant approach to Anomaly Detection using Autoencoders - complete with Keras code, well worth checking out if you are exploring this area

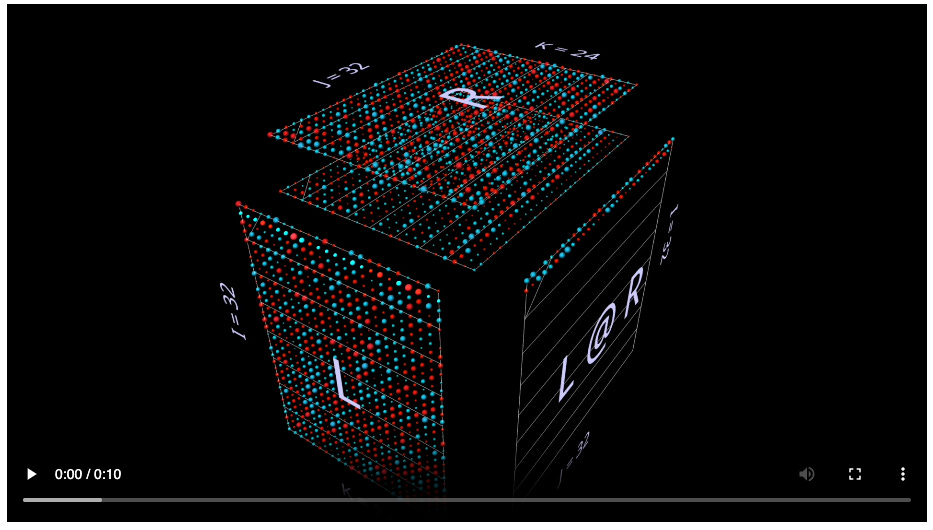

Getting more granular with some pretty visualisations … “Inside the Matrix: Visualizing Matrix Multiplication, Attention and Beyond“

It wouldn’t be our tutorials section without a transformer tutorial or two…

“I made a transformer by hand (no training!)“ - really good, step by step with clean code

At the other end of the spectrum… “Recent Advances in Vision Foundation Models“

Love this - satellite image deep learning - also great resource here

Interesting approach to data generation- generating tabular data via Diffusion an XGBoost

And getting pretty deep in the maths now, but exploring a novel concept… how do over-parameterised models converge to global minima? “Convergence of gradient descent in over-parameterized networks“

Practical tips

How to drive analytics and ML into production

Insight from Glassdoor on their ML Registry

Potentially useful library for feature selection - zoofs

This looks pretty amazing - “Perspective is an interactive analytics and data visualization component, which is especially well-suited for large and/or streaming datasets.“

TensorFlow or PyTorch in 2023 - interesting Reddit discussion

ML Model monitoring - step by step guide

And as we always have lots of commentary on MLOps

And managing LLMs is a little different from managing traditional ML models

Finally a thoughtful piece from Wes McKinney - well worth a read- “The Road to Composable Data Systems: Thoughts on the Last 15 Years and the Future“

"The “commoditization” of query execution through projects like DuckDB, Velox, and DataFusion is surely to be a disruptive trend in the ecosystem as we begin to take for granted that nearly any system can equip itself with cutting-edge columnar query processing capabilities. Furthermore, ongoing improvements in these modular, reusable components will become more or less instantly available in all of the projects that depend on them. It’s super exciting."

Bigger picture ideas

Longer thought provoking reads - lean back and pour a drink! ...

Kicking off with “friend of the section” Andrew Ng and his recent lecture on Opportunities in AI

Another take on where the opportunities lie: “How to make history with LLMs & other generative models“

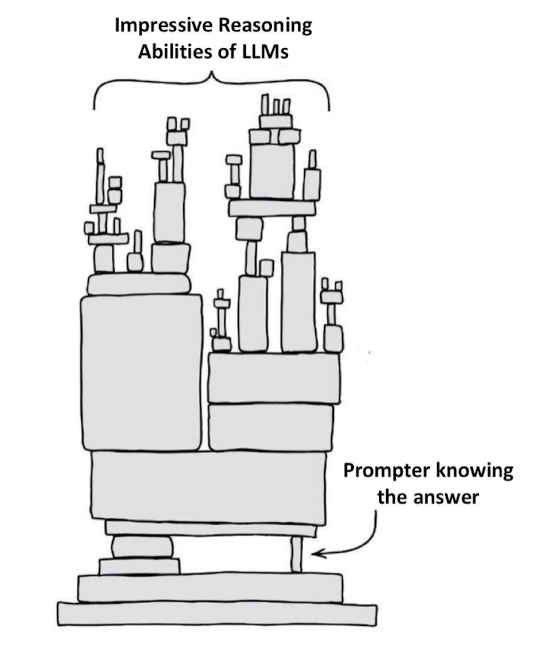

"There’s nothing wrong with a great market map, filled to the brim with potential startup ideas, but it inevitably leads to the question of where to focus. Sure, there are all of these opportunities, but let’s be real, what are the ones that a smart startup founder should go after? And which are flash-in-the-pan, waiting to be made irrelevant by a foundation model provider, a new architecture shift, or an onslaught of other early stage startups? "Interesting approach to the question “can LLM’s really reason and plan?”

“Using the right tool is often conflated with using the best known tool. The current hype cycle has put closed source AI in the spotlight. As open source offerings mature and become more user-friendly and customizable, they will emerge as the superior choice for many applications.

Rather than getting swept up in the hype, forward-thinking organizations will use this period to deeply understand their needs and lay the groundwork to take full advantage of open source AI. They will build defensible and differentiated AI experiences on open technology. This measured approach enables a sustainable competitive advantage in the long run."

Fun Practical Projects and Learning Opportunities

A few fun practical projects and topics to keep you occupied/distracted:

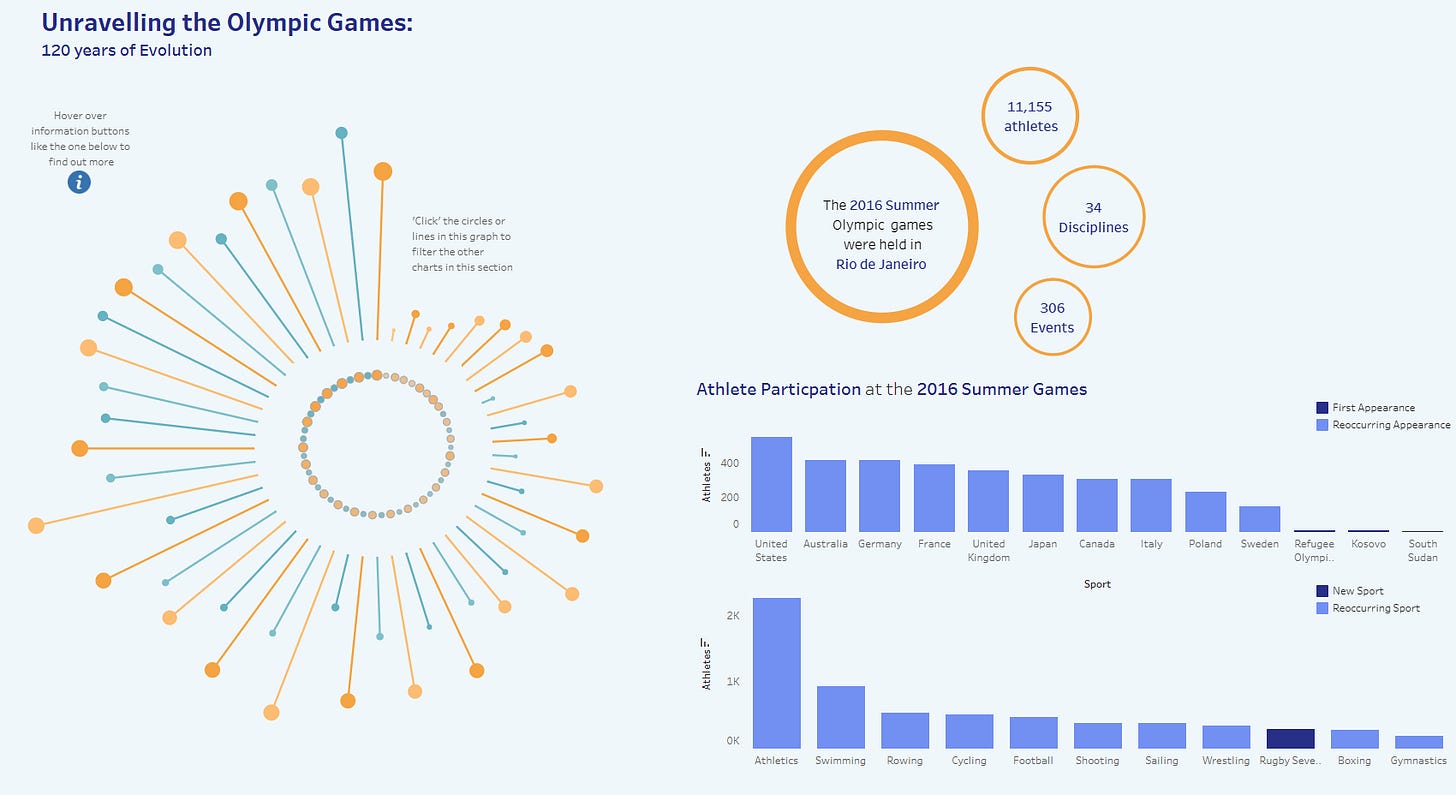

Always inspiring - the Information is Beautiful awards

Looks like a cool one to try - “The “percentogram”—a histogram binned by percentages of the cumulative distribution, rather than using fixed bin widths“

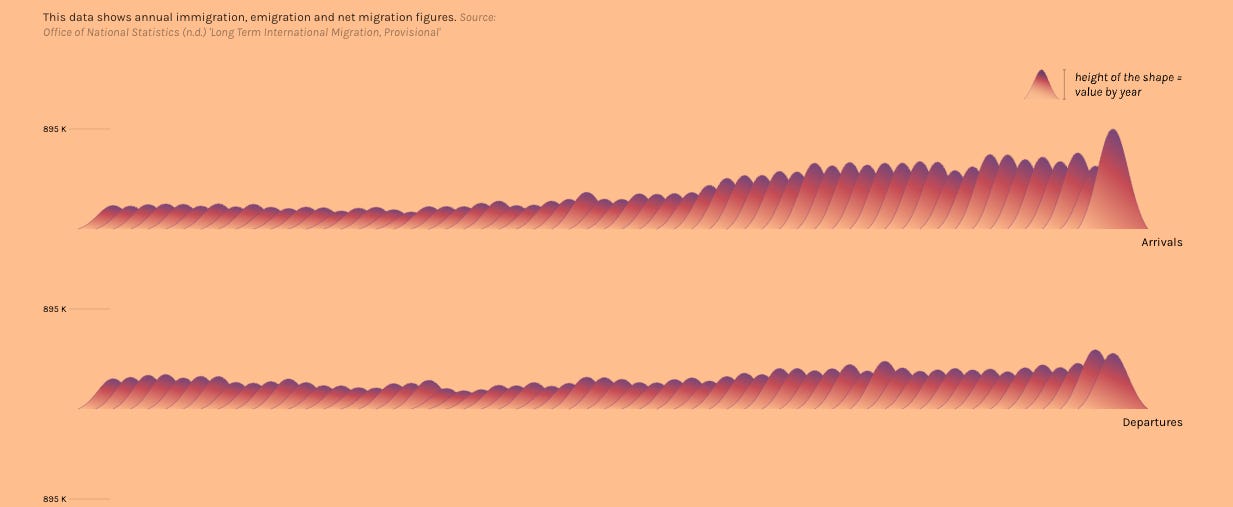

Elegant visualisation of survey data - “How Europeans Think and Feel About Immigration“

Feeling inspired? AI Grant - accelerator for AI Startups

Updates from Members and Contributors

Arthur Turrell released edition 1.0 of the excellent free, open source online book Python for Data Science (aka Py4DS), a port of the popular R for Data Science.

Mia Hatton, Data Science Community Project Manager at the ONS Data Science Campus informs us about the upcoming Data Science Community Showcase in November

”Taking place over three days, this is your chance to engage with networks and communities that can support you on your data science journey. It is open to all public sector employees, whether you are a practicing data scientist, a leader, an aspiring data scientist or a policymaker. We have split the event into three content strands to help you choose which events to attend.

Day one: your career in data science

Day two: the data science toolshed

Day three: making an impact with data science

The sessions will be available to book on our website shortly. Please join our mailing list to be the first to know when sessions are live.”

Jobs!

The Job market is a bit quiet - let us know if you have any openings you'd like to advertise

EvolutionAI, are looking to hire someone for applied deep learning research. Must like a challenge. Any background but needs to know how to do research properly. Remote. Apply here

Again, hope you found this useful. Please do send on to your friends- we are looking to build a strong community of data science practitioners- and sign up for future updates here

- Piers

The views expressed are our own and do not necessarily represent those of the RSS