December Newsletter

Hi everyone-

December already... Happy Holidays to everyone! It certainly feels like winter is here judging by the lack of sunlight. But a December like no other, as we have a World Cup to watch - although half empty, beer-less, air-conditioned stadiums in repressive Qatar does not sit well ...Perhaps time for a breather, with a wrap up of data science developments in the last month.

Following is the December edition of our Royal Statistical Society Data Science and AI Section newsletter. Hopefully some interesting topics and titbits to feed your data science curiosity. (If you are reading this on email and it is not formatting well, try viewing online at http://datasciencesection.org/)

As always- any and all feedback most welcome! If you like these, do please send on to your friends- we are looking to build a strong community of data science practitioners. And if you are not signed up to receive these automatically you can do so here.

Industrial Strength Data Science December 2022 Newsletter

RSS Data Science Section

Committee Activities

We had an excellent turnout for our Xmas Social on December 1st- so great to see so many lively and enthusiastic data scientists!

Having successfully convened not 1, but 2 entertaining and insightful data science meetups over the last couple of months ("From Paper to Pitch" and "IP Freely, making algorithms pay - Intellectual property in Data Science and AI") - huge thanks to Will Browne! - we have another meetup planned for December 15th, 7-830pm - "Why is AI in healthcare not working" - sign up here

The RSS is now accepting applications for the Advanced Data Science Professional certification, awarded as part of our work with the Alliance for Data Science Professionals - more details here.

The AI Standards Hub, led by committee member Florian Ostmann, will be hosting a webinar on international standards for AI transparency and explainability on December 8. The event will a published standard (IEEE 7001) as well as two standards currently under development (ISO/IEC AWI 12792 and ISO/IEC AWI TS 6254). A follow-up workshop aimed at gathering input to inform the development of ISO/IEC AWI 12792 and ISO/IEC AWI TS 6254 will take place in January.

We are very excited to announce Real World Data Science, a new data science content platform from the Royal Statistical Society. It is being built for data science students, practitioners, leaders and educators as a space to share, learn about and be inspired by real-world uses of data science. Case studies of data science applications will be a core feature of the site, as will “explainers” of the ideas, tools, and methods that make data science projects possible. The site will also host exercises and other material to

support the training and development of data science skills. Real World Data Science is online at realworlddatascience.net (and on Twitter @rwdatasci). The project team has recently published a call for contributions, and those interested in contributing are invited to contact the editor, Brian Tarran.

Martin Goodson (CEO and Chief Scientist at Evolution AI) continues to run the excellent London Machine Learning meetup and is very active with events. The next event is one not to miss - December 7th when Alhussein Fawzi, Research Scientist at DeepMind, will present AlphaTensor - "Faster matrix multiplication with deep reinforcement learning". Videos are posted on the meetup youtube channel - and future events will be posted here.

Martin has also compiled a handy list of mastodon handles as the data science and machine learning community migrates away from twitter...

This Month in Data Science

Lots of exciting data science going on, as always!

Ethics and more ethics...

Bias, ethics and diversity continue to be hot topics in data science...

How do we assess large language models?

Facebook released Galactica - 'a large language model for science'. On the face of it, this was a very exciting proposition, using the architecture and approach of the likes of GPT-3 but trained on a large scientific corpus of papers, reference material, knowledge bases and many other sources.

Sadly, it quickly became apparent that the output of the model could not be trusted- often it got a lot right, but it was impossible to tell right from wrong

Stamford's Human-Centered AI group released a framework to try and tackle the problem of evaluating large language models and assessing their risks

After 2 days the public access was shut down... with lots of twitter discussion!

An open source programmer has filed an initial legal complaint against Github CoPilot - the AI code generating tool (trained on open source code).

"Maybe you don’t mind if GitHub Copilot used your open-source code without asking.

But how will you feel if Copilot erases your open-source community?"Meanwhile lawmakers in the US have decided that autonomous vehicles have become a threat to national security

At the same time colleges in the US are apparently using AI to monitor student protests

For a few thousand dollars a year, Social Sentinel offered schools across the country sophisticated technology to scan social media posts from students at risk of harming themselves or others. Used correctly, the tool could help save lives, the company said.

For some colleges that bought the service, it also served a different purpose — allowing campus police to surveil student protests.More examples of 'algos going wrong'...

Interesting article digging into the US property rental market and whether rents are artificially inflated by the prominent algorithm used by most players

KFC apologises for promoting cheese-covered chicken in Germany on Kristallnacht blaming "semi-automated content creation process" ...

The KFC promotion read, “It’s memorial day for [Kristallnacht]! Treat yourself with more tender cheese on your crispy chicken. Now at KFCheese!”At the same time Elon Musk seems untroubled by ethical risks - firing the whole of Twitter's Ethical AI team

Some positive news however:

First of all, the dreaded job losses driven by increased automation and AI implementation does not seem to have happened so far at least, according to the BLS.

Google released a nice primer on federated learning and how it protects privacy

While Facebook has released their competitor to AlphaFold using a large language model approach, positive news in the open source community with the released of OpenFold, and open source version of DeepMind's impressive AlphaFold

"AlphaFold2 revolutionized structural biology with the ability to predict protein structures with exceptionally high accuracy. Its implementation, however, lacks the code and data required to train new models. These are necessary to (i) tackle new tasks, like protein-ligand complex structure prediction, (ii) investigate the process by which the model learns, which remains poorly understood, and (iii) assess the model's generalization capacity to unseen regions of fold space. Here we report OpenFold, a fast, memory-efficient, and trainable implementation of AlphaFold2, and OpenProteinSet, the largest public database of protein multiple sequence alignments. ”Developments in Data Science Research...

As always, lots of new developments on the research front and plenty of arXiv papers to read...

A good place to start - the 'Outstanding Papers' from NeurIPS 2022. A wide variety of topics covered including diffusion and other generative models and efficient scaling.

Impressive review of different approaches to speeding up Deep Learning training

"Hallucinations" in Natural Language Generation - "However, it is also apparent that deep learning based generation is prone to hallucinate unintended text, which degrades the system performance and fails to meet user expectations in many real-world scenarios"

In a similar vein, "137 emergent abilities of large language models"

"In Emergent abilities of large language models, we defined an emergent ability as an ability that is “not present in small models but is present in large models.” Is emergence a rare phenomena, or are many tasks actually emergent?

It turns out that there are more than 100 examples of emergent abilities that already been empirically discovered by scaling language models such as GPT-3, Chinchilla, and PaLM. To facilitate further research on emergence, I have compiled a list of emergent abilities in this post. "Incorporating negative examples into language model training - "CRINGE loss: Learning what language not to model"

I find this type of thing so cool- "Translation between molecules and natural language"

In this work, we pursue an ambitious goal of translating between molecules and language by proposing two new tasks: molecule captioning and text-guided de novo molecule generation. In molecule captioning, we take a molecule (e.g., as a SMILES string) and generate a caption that describes it. In text-guided molecule generation, the task is to create a molecule that matches a given natural language description A bit more abstract - How did AlphaZero learn chess? What did it actually learn and how was it encoded?

We analyze the knowledge acquired by AlphaZero, a neural network engine that learns chess solely by playing against itself yet becomes capable of outperforming human chess players. Although the system trains without access to human games or guidance, it appears to learn concepts analogous to those used by human chess players. We provide two lines of evidence. Linear probes applied to AlphaZero’s internal state enable us to quantify when and where such concepts are represented in the network. We also describe a behavioral analysis of opening play, including qualitative commentary by a former world chess champion.Intriguing example of adversarial attack - beating 'KataGo' the state-of-the are Go playing system by tricking it into ending the game prematurely.

Using Abductive reasoning for Visual tasks with incomplete sets of visual prompts

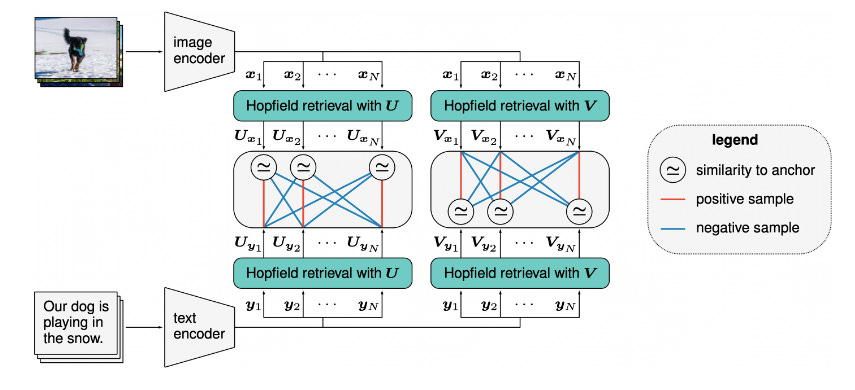

And this month's hot topic seems to be contrastive learning

Unsupervised visualisation of image datasets with contrastive learning

A novel new approach to zero shot transfer learning - CLOOB - with results that seem to outperform CLIP, one of the the key approaches used in current state of the art generative models like Stable Diffusion that connects text to images.

And back to my favourite topic... Why do tree-based models still outperform deep learning on typical tabular data?

"Results show that tree-based models remain state-of-the-art on medium-sized data (10K samples) even without accounting for their superior speed. To understand this gap, we conduct an empirical investigation into the differing inductive biases of tree-based models and neural networks. This leads to a series of challenges which should guide researchers aiming to build tabular-specific neural network: 1) be robust to uninformative features, 2) preserve the orientation of the data, and 3) be able to easily learn irregular functions."Stable-Dal-Gen oh my...

Still lots of discussion about the new breed of text-to-image models (type in a text prompt/description and an -often amazing- image is generated) with three main models available right now: DALLE2 from OpenAI, Imagen from Google and the open source Stable-Diffusion from stability.ai.

What are the possibilities when you have 'limitless creativity at your fingertips'; will it be comparable to the rise of CGI in the 90s

Interesting take on how generative models could influence product design - 'Artisanal Intelligence'

Thought provoking take on re-imagining search - Metaphor- well worth playing around with

The animated discussion around the ethics and legality of generating new art 'in the style of' existing artists continues - here's another example.

“Whether it’s legal or not, how do you think this artist feels now that thousands of people can now copy her style of works almost exactly?”And there are now applications attempting to deal with this issue by gaining permission up front from artists - DeviantArt DreamUp

As with any new technology, it was only a matter of time... generating porn with "Unstable Diffusion"

Some useful background and understanding:

Overview of the last 10 years of image synthesis - showing the arc from GANs to transformers to diffusion models

And an excellent visual guide to how stable diffusion works- well worth a look, especially the piece around diffusion models

If you're keen to play around, here's a guide to running stable diffusion on AWS Sagemaker

Of course, it's a rapidly evolving space:

Tencent have joined the generative model group with their new video diffusion models

Google have added the ability to include subjects in the generative process...Dreambooth

"It’s like a photo booth, but once the subject is captured, it can be synthesized wherever your dreams take you…"And now we have Stable Diffusion 2.0 - which apparently makes copying artists and generating porn much harder...If you want to take it for a spin, here's a good front end you can play with, and here is an excellent webUI

Real world applications of Data Science

Lots of practical examples making a difference in the real world this month!

We are starting to see generative AI used in applications now starting with text generation - here Airbnb talks through how they leverage text generation models to build more effective, scalable customer support products.

Bloomberg highlights how they use AI for foreign language translation of videos

An interesting bi-product of the embedding that happens in Neural Networks is the potential for file compression

The team at Netflix discuss how they have improved video quality by using their own video encoding optimisation based on deep learning architectures

Meanwhile the Facebook/Meta AI team have released a new audio codec with a 10x improvement in compression compared to mp3 which could be very useful in low bandwidth situations

More interesting and practical time series work including optimisation based on forecast models (as an aside, NeuralForecast looks a useful package for experimenting with NN based time series models):

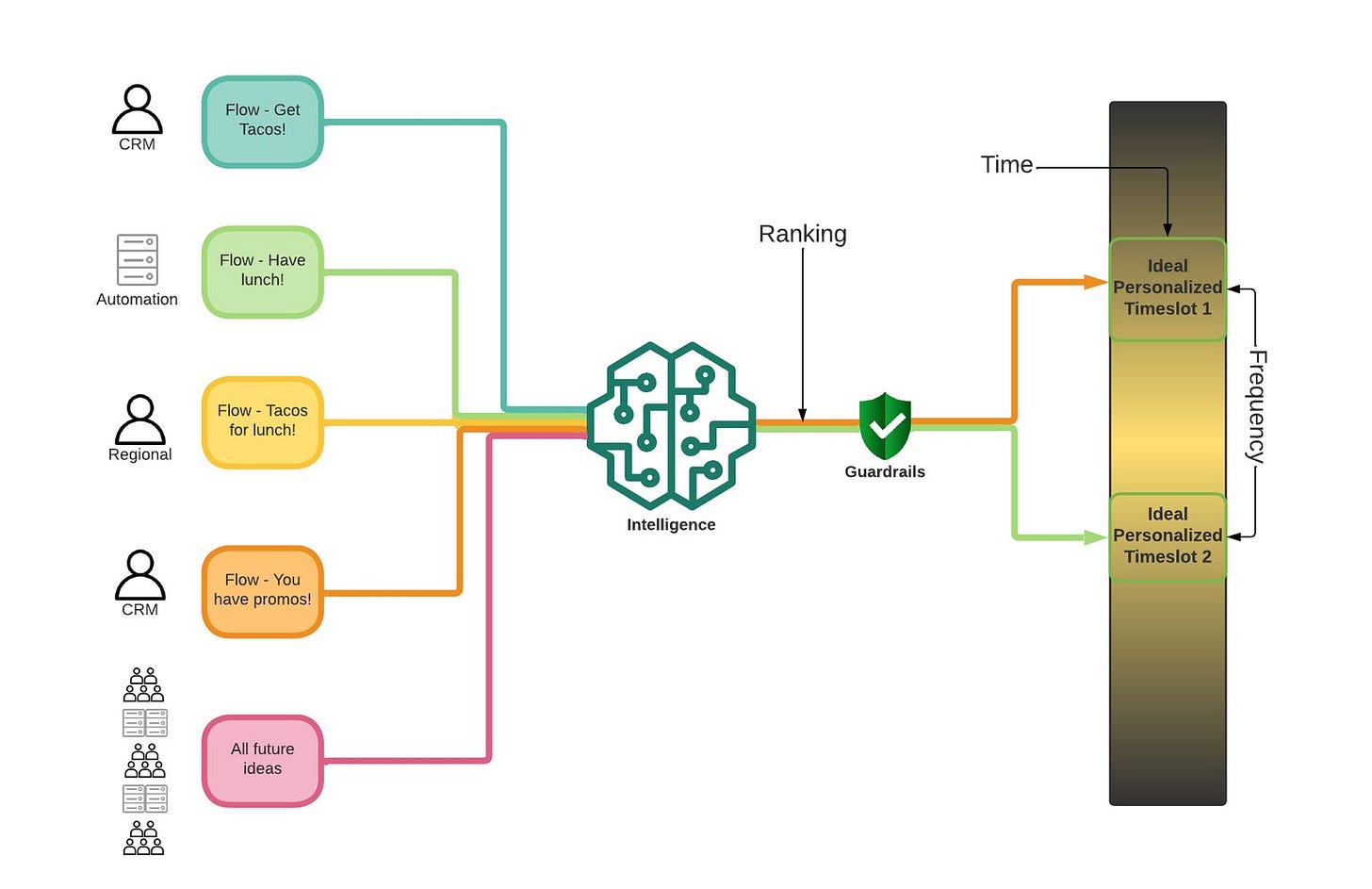

The team at stitchfix discuss their new recommender which incorporates temporally masked encoders- looks very powerful

Impressive work at Uber optimising the timing of their push notifications

Always fun to see updates in the robotics field:

Excellent progress at Amazon on a problem previously thought intractable - where to optimally store items - good read

Cool and a little creepy - Robot table tennis at Google!

More impressive progress at Google - generating 3D 'flythough' videos from still photos

Improving the software engineering process with AI - github explores 'AI for Pull Requests'

Bytedance have published details of Monolith, their real-time recommendation system which looks impressive

Cool to see real-world use-cases of Reinforcement Learning- this time from DeepMind on controlling commercial cooling systems

Good potential for AI to improve the efficiency of shrimp farming and other aquaculture

Useful pointers on Explainability...

Elegant application of LIME and explainable AI to Poker -

If you are exploring explainability techniques like LIME and SHAP, teex looks like a useful framework for evaluating the explanations.

And 'ExplainerDashboard' looks useful...

As does WeightedSHAP

More groundbreaking releases from the team at Meta/Facebook

Teaching AI advanced mathematical reasoning - "Meta AI has built a neural theorem prover that has solved 10 International Math Olympiad (IMO) problems"

Very impressive - CICERO: An AI agent that negotiates, persuades, and cooperates with people.

"Today, we’re announcing a breakthrough toward building AI that has mastered these skills. We’ve built an agent – CICERO – that is the first AI to achieve human-level performance in the popular strategy game Diplomacy*"

"Diplomacy has been viewed for decades as a near-impossible grand challenge in AI because it requires players to master the art of understanding other people’s motivations and perspectives; make complex plans and adjust strategies; and then use natural language to reach agreements with other people, convince them to form partnerships and alliances, and more. CICERO is so effective at using natural language to negotiate with people in Diplomacy that they often favored working with CICERO over other human participants."Finally, great summary post from Jeff Dean at Google highlighting how AI is driving worldwide progress in 3 significant areas: Supporting thousands of languages; Empowering creators and artists; Addressing climate change and health challenges - well worth a read

How does that work?

Tutorials and deep dives on different approaches and techniques

A couple more Transformer resources:

Quick and simple look at transformer embeddings and tokenisation; also a quick but thoughtful piece making the case for relative position embeddings

This is lovely - BertViz - visualising attention in transfer language models- nothing like a good picture to help explain how things work!

Transfer learning is become more and more popular and useful:

Good overview of Contrastive learning from the Stanford AI lab

"Contrastive learning is a powerful class of self-supervised visual representation learning methods that learn feature extractors by (1) minimizing the distance between the representations of positive pairs, or samples that are similar in some sense, and (2) maximizing the distance between representations of negative pairs, or samples that are different in some sense. Contrastive learning can be applied to unlabeled images by having positive pairs contain augmentations of the same image and negative pairs contain augmentations of different images."Excellent python library, pythae, for comparing different AutoEncoders - definitely worth playing around with if you get the time

A couple of reinforcement learning examples:

First digging into imitation learning, again from the Stanford AI Lab

And an excellent post from Google Research talking through how to incorporate prior knowledge into reinforcement learning

Not really a tutorial but GeoTorch looks like a very useful library if you are working geospatial models...

A detailed look at NGBoost and probabilistic prediction with gradient boost models

Finally an excellent primer from HuggingFace on all the different aspects of Document AI

"Enterprises are full of documents containing knowledge that isn't accessible by digital workflows. These documents can vary from letters, invoices, forms, reports, to receipts. With the improvements in text, vision, and multimodal AI, it's now possible to unlock that information. This post shows you how your teams can use open-source models to build custom solutions for free!"Practical tips

How to drive analytics and ML into production

Useful insight from Shopify on reducing BigQuery costs- how they fixed their $1m query. In a similar vein this is a useful study comparing two open source table formats: Delta lake and Apache Iceberg (spoiler alert... Delta Lake is faster)

Good post on the challenges of building realtime pipelines

"By far, the most expensive, complex, and performant method is a fully realtime ML pipeline; the model runs in realtime, the features run in realtime, and the model is trained online, so it is constantly learning. Because the time, money, and resources required by a fully realtime system are so extensive, this method is infrequently utilized, even by FAANG-type companies, but we highlight it here because it is also incredible what this type of realtime implementation is capable of."As always, lots going on in the world of MLOps:

This looks worth exploring - simple setup for experiment tracking with Ploomber

Relevant talk from Stichfix on how they monitor pipelines and ML Models with whylogs

And if you are training and monitoring Deep Learning models, weightwatcher looks like a useful tool

Finally, we have featured various tutorials from Chip Huyen in the past- she now has a book on Designing Machine Learning Systems which I hear is very good (hat-tip Glen Wright Colopy)

Useful and pretty comprehensive overview of Feature Engineering and Feature Extraction techniques

This is sad to see - a data scientist leaving the field. Lots in this post that I'm sure will resonate with many- highlights the importance of good technical senior management

"The reason managers pursued these insane ideas is partly because they are hired despite not having any subject matter expertise in business or the company’s operations, and partly because VC firms had the strange idea that ballooning costs well in excess of revenue was “growth” and therefore good in all cases; the business equivalent of the Flat Earth Society."This is impressive- the 'handbook' of the gitlab data team. I know well how hard it is to pull something like this together!

Useful -"How I learn Machine Learning"

Bigger picture ideas

Longer thought provoking reads - lean back and pour a drink! ...

Thoughtful piece about the nature of recommendations- good read

“A central goal of recommender systems is to select items according to the “preferences” of their users. “Preferences” is a complicated word that has been used across many disciplines to mean, roughly, “what people want.” In practice, most recommenders instead optimize for engagement. This has been justified by the assumption that people always choose what they want, an idea from 20th-century economics called revealed preference. However, this approach to preferences can lead to a variety of unwanted outcomes including clickbait, addiction, or algorithmic manipulation.

Doing better requires both a change in thinking and a change in approach. We’ll propose a more realistic definition of preferences, taking into account a century of interdisciplinary study, and two concrete ways to build better recommender systems: asking people what they want instead of just watching what they do, and using models that separate motives, behaviors, and outcomes.”Interesting take on overfitting- "Too much efficiency makes everything worse"

“For instance, as an occasional computer vision researcher, my goal is sometimes to prove that my new image classification model works well. I accomplish this by measuring its accuracy, after asking it to label images (is this image a cat or a dog or a frog or a truck or a ...) from a standardized test dataset of images. I'm not allowed to train my model on the test dataset though (that would be cheating), so I instead train the model on a proxy dataset, called the training dataset. I also can't directly target prediction accuracy during training1, so I instead target a proxy objective which is only related to accuracy. So rather than training my model on the goal I care about — classification accuracy on a test dataset — I instead train it using a proxy objective on a proxy dataset.""But the best is yet to come. The really exciting applications will be action-driven, where the model acts like an agent choosing actions. And although academics can argue all day about the true definition of AGI, an action-driven LLM is going to look a lot like AGI.""However, I worry that many startups in this space are focusing on the wrong things early on. Specifically, after having met and looked into numerous companies in this space, it seems that UX and product design is the predominant bottleneck holding back most applied large language model startups, not data or modeling""We are rapidly pursuing the industrialization of biotech. Large-scale automation now powers complex bio-foundries. Many synthetic biology companies are hellbent on scaling production volumes of new materials. A major concern is the shortage of bioreactors and fermentation capacity. While these all seem like obvious bottlenecks for the Bioeconomy, what if they aren’t? What if there is another way? Here, I’ll explore a different idea: the biologization of industry."Fun Practical Projects and Learning Opportunities

A few fun practical projects and topics to keep you occupied/distracted:

Visualisation of NY City Trees!

Exploring the relationship between climate change and violent conflict

What's not to like - machine learning with unix pipes!

Go Big! - OpenAI Startup Fund

Another cool visualisation- this time 24 hours of Global Air Traffic

Covid Corner

Apparently Covid is over - certainly there are very limited restrictions in the UK now

The latest results from the ONS tracking study estimate 1 in 60 people in England have Covid (a slight increase from last week's 1 in 65)- a lot better than last month (1 in 35) ... but till a far cry from the 1 in 1000 we had in the summer of 2021.

The UK has approved the Moderna 'Dual Strain' vaccine which protects against original strains of Covid and Omicron.

Updates from Members and Contributors

Mia Hatton from the ONS Data Science campus is looking for feedback from any government and public sector employees who have an interest in data science to help shape the future of the committee- check out the survey here (you can also join the mailing list here).

Fresh from the success of their ESSnet Web Intelligence Network webinars, the ONS Data Science campus have another excellent set of webinars coming up:

24 Jan’23 – Enhancing the Quality of Statistical Business Registers with Scraped Data. This webinar will aim to inspire and equip participants keen to use web-scraped information to enhance the quality of the Statistical Business Registers. Sign up here

23 Feb’23 – Methods of Processing and Analysing of Web-Scraped Tourism Data. This webinar will discuss the issues of data sources available in tourism statistics. We will present how to search for new data sources and how to analyse them. We will review and apply methods for merging and combining the web scraped data with other sources, using various programming environments. Sign up here

Jobs!

The Job market is a bit quiet over the summer- let us know if you have any openings you'd like to advertise

EvolutionAI, are looking to hire someone for applied deep learning research. Must like a challenge. Any background but needs to know how to do research properly. Remote. Apply here

Napier AI are looking to hire a Senior Data Scientist (Machine Learning Engineer) and a Data Engineer

Again, hope you found this useful. Please do send on to your friends- we are looking to build a strong community of data science practitioners- and sign up for future updates here.

- Piers

The views expressed are our own and do not necessarily represent those of the RSS